Researchers have developed a lightweight method to analyse and steer large language models using a fraction of the computing power, potentially making explainable and trustworthy AI accessible to smaller labs and startups.

A team of researchers has introduced a new technique that dramatically cuts the computational cost of analysing and controlling large language models (LLMs), potentially opening explainable AI to a much wider audience. Their approach can reduce the hardware and energy resources required for probing LLM behaviour by more than 90% compared with previous methods, according to research published this month.

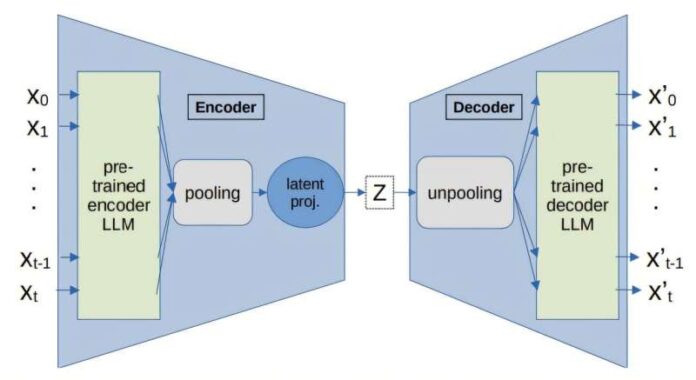

The breakthrough centers on two software frameworks dubbed LangVAE and LangSpace that construct compressed representations of a model’s internal language patterns. These representations allow scientists to map and manipulate how an LLM processes language using geometric tools, without modifying the model itself.

By “treating internal activations as points and shapes in a space that can be measured and adjusted,” the team can infer and steer behaviours far more efficiently than traditional, resource-intensive probing techniques. This efficiency leap is significant because explainability, the ability to understand why AI systems make certain predictions or decisions, has long been bottlenecked by the sheer scale and opacity of models like GPT and Llama. Detailed behavioural analysis typically requires powerful clusters and extensive computational budgets, putting it beyond the reach of many university labs, startups, and smaller industrial teams.

The Manchester group’s method could change that, shrinking the entry cost and enabling broader participation in trust-worthy AI research. Lead researcher Dr. Danilo S. Carvalho says the team hopes that lowering these barriers “will accelerate the development of trustable and reliable AI for mission-critical applications, such as health care,” while also reducing the environmental footprint associated with AI research.

Experts in the field view this work as part of a broader shift toward more sustainable, accessible AI development. The need to balance advanced capabilities with explainability, fairness, and efficiency has driven a wave of innovations in model compression, interpretability, and resource-aware algorithms throughout 2025. If adopted widely, this geometric representation technique could make explainable AI tools a standard part of LLM toolchains, rather than a niche research specialty.