MLOps adds automation, organisation and reliability to the machine learning lifecycle. Open source MLOps tools do a great job of helping build a machine learning model, with each tool tackling a distinct challenge.

When building a machine learning (ML) model what really makes the difference is the quality with which the underpinning data pipelines are architected, operated and aligned to the needs of the model. If the behind-the-scenes processes such as data preparation, monitoring or workflow coordination are variable or poorly executed, even the best algorithms and most capable teams may fail to deliver. The continuous flow of activities from data gathering and preprocessing through version control, model training and evaluation, deployment, and post-deployment monitoring is key to a reliable machine learning system. Failure of a project, wastage of resources, or delays can be caused by any single point of failure in this chain. This is addressed by MLOps, which introduces automation and definition into the process, making it more transparent and uniform. While most teams cannot meet the high costs of commercial MLOps platforms, open source technology is redefining the space. These flexible, budget-friendly solutions are making it possible for companies of all sizes to manage and scale machine learning projects, driving innovation and pushing AI research for industries everywhere.

Why MLOps is the backbone of sustainable machine learning

Machine learning operations, or MLOps, adds structure to the frequently disorganised process of creating and implementing machine learning models. It is more than just a collection of tools. While traditional machine learning often emphasises getting the model’s prediction right, MLOps takes a broader, more practical view ensuring that models can be reliably trained, tested, deployed and maintained in real-world scenarios. Imagine a team of data scientists developing an impressive model, only to find it failing in production due to a minor data shift or an inability to reproduce results. In the absence of a robust operational framework, such failures are frequent.

To overcome this difficulty, MLOps adds dependability, consistency, and organisation to each phase of the ML lifecycle. Making sure models are reliable and provide actual value is more important than merely creating correct models. Data scientists, machine learning engineers, and DevOps specialists work closely together thanks to MLOps. It guarantees accurate data versioning, thorough documentation of experiments, and scalable, repeatable model deployment. The confusion that manual procedures and dispersed tools frequently cause is removed by this well-organised workflow.

Real-world challenges in ML data workflows

Most machine learning teams begin with zeal, data access, and the intention of developing a successful model. However, as the project expands, they start to run across issues that weren’t immediately apparent. The absence of organisation in data handling is one of the main problems. Data often exists in multiple versions across different team members’ systems. When someone updates a dataset or applies preprocessing changes, those changes are rarely documented or shared in a consistent way. It becomes difficult to replicate results regularly when teams lack a clear method to monitor progress or record what works. The lack of communication between data scientists and the teams responsible for implementing the model is another frequent obstacle. Because it was trained on slightly different data or because the production pipeline differs from the training pipeline, a model that performs flawlessly during development may fail in production. These discrepancies frequently result in misunderstandings, unforeseen errors, and preventable delays. Even the best models can fail if they are not coordinated properly. In addition, teams often have difficulties in monitoring experiments and learning from previous runs. Without a clear system to log parameters, code versions and results, they waste time repeating work. Worse, they may not even realise why one model version performed better than another.

As projects scale, manual workflows become harder to manage. Tasks like data preparation, model training and evaluation are often repeated for every few versions of the dataset or code. Without automation, these tasks drain resources and introduce human error. The result is a fragile system. When a business needs to update a model or explain how predictions were made, the team cannot respond quickly. Trust in the model begins to erode. These challenges are not limited to large organisations. Start-ups, research teams and even solo developers experience them. That is why managing data workflows is not a luxury but a requirement for any serious machine learning initiative.

| Case study: How open source MLOPs tools helped in COVID-19 diagnosis workflow management |

| Background: Healthcare systems around the world experienced an overwhelming influx in data during the COVID-19 epidemic, necessitating the quick creation and implementation of machine learning models to help with patient triage, disease diagnosis, and prediction. However, irregular processes, versioning problems, and a lack of scalable infrastructure hindered the practical application of these AI models for most healthcare teams.

Problem statement: To detect COVID-19 positive cases, a top government health research institute sought to use machine learning models to evaluate patient vitals and chest X-ray data. Ad hoc pipelines and manual procedures caused the deployment and update cycles to be sluggish and unreliable, even when promising model accuracy was attained in research labs. Delays in interventions and ineffective use of resources resulted from the lack of a standardised way to manage trials, version datasets, or monitor models in real-time. Proposed solution: The organisation used open source MLOps tools to handle the entire machine learning pipeline to address this difficulty.

The ML workflow was made more efficient by using these tools together, which covered raw data collection, preprocessing, model training, evaluation, and deployment under a monitored, scalable infrastructure. Outcome: The time-to-deployment was drastically shortened from weeks to days with the help of MLOps. With updated models, the clinical teams could react to new variants more quickly, and model accuracy was constantly checked for declines in performance. Reproducible tests also produced more reliable medical results and clinical deployment authorisation. This is how mission-critical health projects can achieve operational excellence using open source MLOps technologies, especially in the face of limited resources. Key takeaways

Source: NCBI COVID-19 AI Diagnosis Study (https://pmc.ncbi.nlm.nih.gov/articles/PMC7395236/) |

How open source tools power modern ML workflows

The machine learning lifecycle involves many moving parts, from data collection to model deployment. It gets harder to manage this entire process manually or with bespoke scripts when teams and projects get bigger. For this reason, the core of contemporary ML processes is now based on open source MLOps tools. These tools can be used freely, are adaptable to different industries, and are always changing due to community contributions. Open source solutions give teams greater freedom over how they develop and expand their machine learning systems because they are not vendor-specific like commercial platforms.

Every tool in the ecosystem tackles a distinct problem. DVC, for instance, assists in managing pipeline definitions and data versioning, guaranteeing that each dataset and experiment can be traced. Logging, comparing, and reproducing experiments across teams is made simple by MLflow. As an orchestration engine, Airflow schedules intricate processes and ensures that everything goes without a hitch. Newer systems like ZenML and Feast are also having a significant impact, in addition to more conventional tools. Feast concentrates on effectively managing and serving features, whereas ZenML assists teams in creating reusable pipeline components.

The transparency that open source software provides is among its most significant advantages. Teams can examine the real source code, comprehend how it works, and alter it to suit the requirements of their projects. Even when team members have different levels of technical expertise, this transparency fosters trust and facilitates easier collaboration.

Open source MLOps is now much more than just a way to cut costs. It has emerged as a potent force for innovation, dismantling the obstacles of closed platforms and costly infrastructure. Open source technologies are enabling teams, from small startups to huge corporations and academicians, to create scalable, production-ready machine learning pipelines with more control and flexibility.

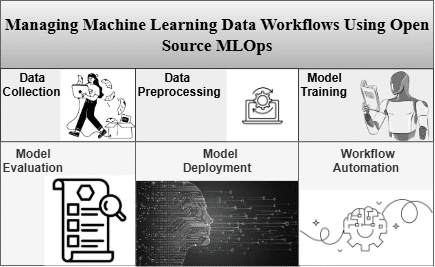

Conceptual workflow of ML project with open source MLOps

Building a model is only one aspect of managing a machine learning project. It entails several related actions that need to be meticulously planned and monitored. Open source MLOps tools give this process structure by outlining precise, scalable, and repeatable routines. Data collection is frequently the first step in the process. Raw datasets are collected, labelled, and kept here. These datasets are versioned with the aid of tools such as DVC, which enable teams to revert to an earlier version when necessary. When models later need to be retrained or audited, this versioning is essential. Preprocessing is the next step. This covers feature extraction, transformation, and cleaning. To manage feature stores that supply consistent features during both training and inference, some teams employ technologies like Feast.

As models are built and trained, experiment tracking becomes essential. MLflow allows teams to log parameters, results and artifacts for each experiment. This visibility helps in comparing approaches and choosing the most effective one. The next phase is pipeline orchestration. Airflow and similar tools let teams schedule and automate workflows. From fetching new data to retraining models on a schedule, these tools ensure the process runs smoothly without manual effort. Deployment and monitoring are the final pieces. MLOps ensures that models move into production in a controlled, traceable way. Teams can monitor performance over time and trigger retraining when needed. Each tool in this pipeline plays a specific role, but together they create a reliable system. A well-managed workflow not only improves accuracy and efficiency but also boosts confidence in the ML solution. It allows teams to move fast without losing control.

Machine learning success is not defined solely by the accuracy of a model. It is defined by how well the entire journey from raw data to real-world deployment is managed. Without structured workflows, reproducible processes and collaborative practices, even the most accurate models can fail to deliver value in production. MLOps transforms that reality by shifting the focus from model-centric thinking to system-centric design. Through tools like DVC, MLflow and Airflow, teams can build ML workflows that are traceable and sustainable. Teams of all sizes and budgets may now use cutting-edge machine learning capabilities thanks to open source solutions that are removing the obstacles of high cost and technical complexity.

The ability of teams to grow and manage their machine learning workflows will be the true differentiator as we approach a future in which machine learning will influence decisions in every industry. Those who do it correctly will advance, while others run the risk of slipping behind. Open source MLOps tools provide the blueprint. It is now up to teams and organisations to implement that blueprint and unblock the true potential of machine learning.