Everywhere we look in today’s data management landscape, the volume of information is soaring. According to one estimate, the data created in 2010 is about 1,200 exabytes, and will grow to nearly 8,000 exabytes by 2015, with the Internet/Web being the primary data driver and consumer.

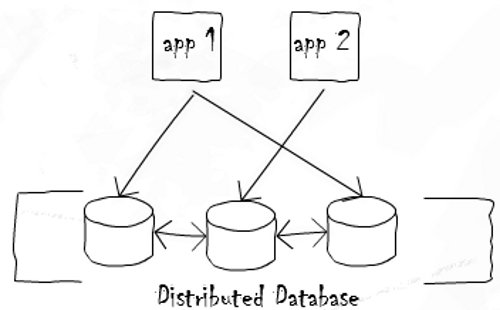

This growth is outpacing the growth of storage capacity, leading to the emergence of information management systems where data is stored in a distributed way, but accessed and analysed as if it resides on a single machine (Figure 1).

Besides resolving the data size problem, these solutions also need to cater to massive performance requirements to ensure timeliness of data processing. Unfortunately, an increase in processing power does not directly translate to faster data access, triggering a rethink on existing database systems.

To understand the enormity of data volumes, let’s look at a couple of figures: Facebook needs to store 135 billion messages a month. Twitter has the problem of storing 7 TB of data per day, with the prospect of this requirement doubling multiple times per year. Criticality of data and continuity in data availability has become more important than ever. We expect data to be available 24×7 and from everywhere.

This brings in the third dimension of high availability and durability. So we have to factor in high availability without any single point of failure, which has traditionally been a telecom forte. These challenges have created a new wave in database processing solutions, which manage data in both structured

and unstructured ways.

Legacy information management systems are characterised by monolithic relational databases, disk/tape-based stores (as memory in huge quantities is scarce or expensive), vendor lock-in and limited enterprise-level scalability. With the advent of the cloud, new data management solutions are emerging to handle distributed (relational/non-relational) content on open platforms at the speed of a mouse-click.

NoSQL

One of the key advances in resolving the “big-data” problem has been the emergence of NoSQL as an alternative database technology. NoSQL (sometimes expanded to “not only SQL”) is a broad class of DBMS that differ significantly from the classic RDBMS model. These data stores may not require fixed table schemas, usually avoid join operations and typically scale horizontally.

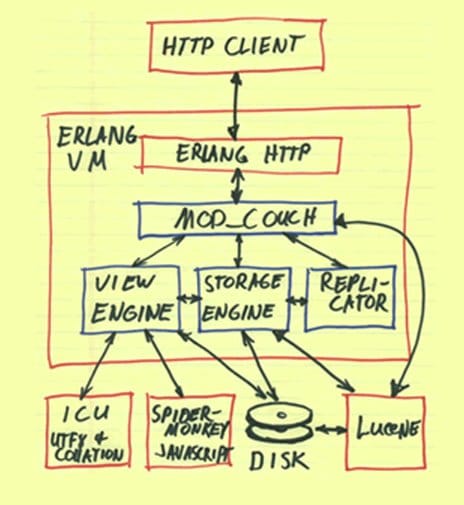

NoSQL solutions have carved a niche market for themselves as key-value stores, document databases, graph databases and big-tables. Use cases for these solutions in Web applications are a dime a dozen. They are used everywhere — whether it is in Web-based social interactions like online gaming/networking, or revenue maximising decisions like ad offerings, and not to mention basic operations like searching the Internet. Figure 2 shows the architecture of a famous NoSQL database (CouchDB) written in Erlang.

But, is NoSQL the answer to all problems? In spite of the technological acumen provided by NoSQL solutions, the RDBMS users in enterprises are reluctant to switch to it. Why?

The greatest deployment of traditional RDBMS is primarily in enterprises, for everything the enterprise does, whether storing customer information, internal financials, employee information or anything else. Is such data growing? Yes, so big-data issues are relevant to them. So why not NoSQL?

Even though there are varieties of NoSQL offerings, they are typically characterised by lack of SQL support, and non-adherence to ACID (Atomicity, Consistency, Isolation and Durability) properties. (Here, we will not go into the technical reasons for this.)

So while NoSQL could help enterprises manage large distributed data, enterprises cannot afford to lose the ACID properties — which were the key reasons for choosing an RDBMS in the first place. Also, since NoSQL solutions don’t provide SQL support, which most current enterprise applications require, this pushes enterprises away from NoSQL.

Hence, a new set of data-management solutions are emerging to address large data OLTP concerns, without sacrificing ACID and SQL interfaces.

Why is traditional OLTP insufficient?

We have already discussed the fact that there is an explosion in the OLTP data space. For example, more widely used social network sites (like Facebook and Linkedin) lead to larger OLTP requirements. Each user of such sites requires credentials and user profile information to be stored, generally in some OLTP database, even though the actual user data is stored in a NoSQL data-store. Facebook’s 750 million users require a very large OLTP database.

While growing OLTP data is a direct contributor, business requirements also force non-OLTP data to be managed as OLTP. Let’s look at the example of how analytics (which is the quintessential OLAP application) has led to the scalability requirements of OLTP.

Case study: Real-time analytics

In the earlier days, analytics were performed on historic data with specialised data warehouse solutions, mostly using an ETL (Extract, Transform and Load) approach. Data extracted from OLTP systems was fed to data warehouse systems capable of handling voluminous data. Real-time analytics is an approach that enables business users to get up-to-the-minute data by directly accessing business operational systems or feeding real-time transactions to analytics systems.

For example, previously Google Analytics analysed past performance — but recently, Google launched Google Analytics: Real Time, which shows a set of new reports on what’s happening on their site, as it happens.

Even though traditional OLTP systems provide ACID, they are not well equipped to handle the volume of data seen because of new innovative business scenarios like real-time analytics. A combination of traditional OLTP systems and analytics systems might under-utilise the analytics systems, due to scalability limitations and the performance of traditional OLTP systems.

Welcome NewSQL

To address big-data OLTP business scenarios that neither traditional OLTP systems nor NoSQL systems address, alternative database systems have evolved, collectively named NewSQL systems. This term was coined by the 451 Group, in their now famous report, “NoSQL, NewSQL and Beyond”.

The term NewSQL was used to categorise these new alternative database systems. 451 Group’s senior analyst, Matthew Aslett, clarified the meaning of the term NewSQL (in his blog) as follows: “NewSQL is our shorthand for the various new scalable/high-performance SQL database vendors. We have previously referred to these products as “ScalableSQL” to differentiate them from the incumbent relational database products. Since this implies horizontal scalability, which is not necessarily a feature of all products, we adopted the term NewSQL in the new report. And to clarify, like NoSQL, NewSQL is not to be taken too literally: the new thing about the NewSQL vendors is the vendor, not the SQL. NewSQL is a set of various new scalable/high-performance SQL database vendors (or databases). These vendors have designed solutions to bring the benefits of the relational model to the distributed architecture, and improve the performance of relational databases to an extent that the scalability is no longer an issue.”

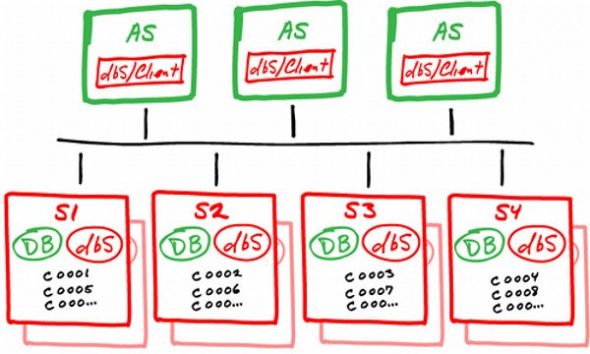

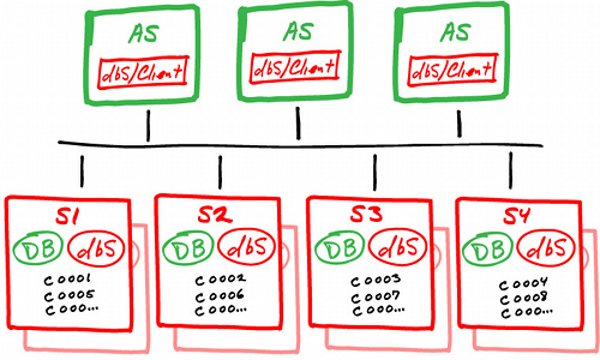

Figure 3 is an architectural example of one of the NewSQL solutions (dbShards).

Technical characteristics of NewSQL solutions

- SQL as the primary mechanism for application interaction.

- ACID support for transactions.

- A non-locking concurrency control mechanism so real-time reads will not conflict with writes, and thus cause them to stall.

- An architecture providing much higher per-node performance than available from traditional RDBMS solutions.

- A scale-out, shared-nothing architecture, capable of running on a large number of nodes without suffering bottlenecks.

The expectation is that NewSQL systems are about 50 times faster than traditional OLTP RDBMS.

Similar to NoSQL, there are many categories of NewSQL solutions. Now we can look at these categorisations and their characteristics.

NewSQL categorisation

Categorisation is based on the different approaches adopted by vendors to preserve the SQL interface, and address the scalability and performance concerns of traditional OLTP solutions.

- New databases: These NewSQL systems are newly designed from scratch to achieve scalability and performance. Of course, some (hopefully minor) changes to the code will be required and data migration is still needed. One of the key considerations in improving the performance is making non-disk (memory) or new kinds of disks (flash/SSD) the primary data store. Solutions can be software-only (VoltDB, NuoDB and Drizzle) or supported as an appliance (Clustrix, Translattice). Examples of offerings are Clustrix, NuoDB and Translattice (commercial); and VoltDB, Drizzle, etc., (open source).

- New MySQL storage engines: MySQL is part of the LAMP stack and is used extensively in OLTP. To overcome MySQL’s scalability problems, a set of storage engines are developed, which include Xeround, Akiban, MySQL NDB cluster, GenieDB, Tokutek, etc. The good part is the usage of the MySQL interface, but the downside is data migration from other databases (including old MySQL) is not supported. Examples of offerings are Xeround, GenieDB and TokuTek (commercial); and Akiban, MySQL NDB Cluster and others in open source.

- Transparent clustering: These solutions retain the OLTP databases in their original format, but provide a pluggable feature to cluster transparently, to ensure scalability. Another approach is to provide transparent sharding to improve scalability. Schooner MySQL, Continuent Tungsten and ScalArc follow the former approach, whereas ScaleBase and dbShards follow the latter approach. Both approaches allow reuse of existing skillsets and ecosystem, and avoid the need to rewrite code or perform any data migration. Examples of offerings are ScalArc, Schooner MySQL, dbShards and ScaleBase (commercial); and Continuent Tungsten (open source).

Summing up

Given the continuing trend of data growth in OLTP systems, a new generation of solutions is required to cater to them. DBMSs have to be rearchitected from scratch to meet this demand. However, unlike NoSQL, these DBMSs have to cater for applications already written for an earlier generation of RDBMS. Hence, drastic interface changes like throwing out SQL or extensive changes to data schema is out of the question.

A new generation of information management systems, termed NewSQL systems, caters to this trend and these constraints. NewSQL is apt for businesses that are planning to:

- migrate existing applications to adapt to new trends of data growth,

- develop new applications on highly scalable OLTP systems, and

- rely on existing knowledge of OLTP usage.

We look forward to your comments on this introductory article, and we will also plan for a series of expert in-depth articles on the NewSQL database technology.

Excellent introductory article. What’s really interesting about NewSQL is the rapid emergence of use cases from both “traditional economy” and “Web economy” organizations. For example, VoltDB is finding ground in “traditional” capital markets applications like trade order processing, real-time trade compliance and trade cost analysis. In the “Web economy”, we’re seeing significant traction in digital advertising, online gaming, network DDoS detection/mitigation and real-time website analytics.

What do these applications have in common? They all need to ingest data at very high rates – up to millions of database writes per second, they need to scale inexpensively and smoothly as their apps gain user momentum, and they can’t operate in a model of eventual consistency (i.e., they need ACID transactions). That NewSQL products also support SQL as their native data language also allows organizations to immediately leverage existing skills and infrastructures.

It’s important to consider, however, that needs may exist which will be best served by complementary database technologies. For example, many users are pairing VoltDB with an analytic datastore such as Vertica or a massively parallel framework like Hadoop. Doing so allows them to use the best tools for each job – VoltDB for the high Velocity aspects of their Big Data applications and a complementary analytic solution for their high Volume needs. We anticipate seeing more of these interesting combinations as users gain experience and success with them in the field.

@davidtanderson There is still life in the old dogs

@Phil_Factor Dare to know your past life? Dont miss out here now! http://t.co/xvtcMkZx