Cloud platforms enable high performance computing without the need to purchase the required infrastructure. Cloud services are available on a ‘pay per use’ basis which is very economical. This article takes a look at cloud platforms like Neptune, BigML, Deep Cognition and Google Colaboratory, all of which can be used for high performance applications.

Software applications, smart devices and gadgets face many performance issues which include load balancing, turnaround time, delay, congestion, Big Data, parallel computations and others. These key issues traditionally consume enormous computational resources and low-configuration computers are not able to work on high performance tasks. The laptops and computers available in the market are designed for personal use; so these systems face numerous performance issues when they are tasked with high performance jobs.

For example, a desktop computer or laptop with a 3GHz processor is able to perform approximately 3 billion computations per second. However, high performance computing (HPC) is focused on solving complex problems and working on quadrillions or trillions of computations with high speed and maximum accuracy.

Application domains and use cases

High performance computing applications are used in domains where speed and accuracy levels are quite high as compared to those in traditional scenarios, and the cost factor is also very high.

The following are the use cases where high performance implementations are required:

- Nuclear power plants

- Space research organisations

- Oil and gas exploration

- Artificial intelligence and knowledge discovery

- Machine learning and deep learning

- Financial services and digital forensics

- Geographical and satellite data analytics

- Bio-informatics and molecular sciences

Working with cloud platforms for high performance applications

There are a number of cloud platforms on which high performance computing applications can be launched without users having actual access to the supercomputer. The billing for these cloud services is done on a usage basis and costs less compared to purchasing the actual infrastructure required to work with high performance computing applications.

The following are a few of the prominent cloud based platforms that can be used for advanced implementations including data science, data exploration, machine learning, deep learning, artificial intelligence, etc.

Neptune

URL: https://neptune.ml/

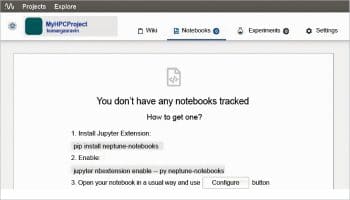

Neptune is a lightweight cloud based service for high performance applications including data science, machine learning, predictive knowledge discovery, deep learning, modelling training curves and many others. Neptune can be integrated with Jupyter notebooks so that Python programs can be easily executed for multiple applications.

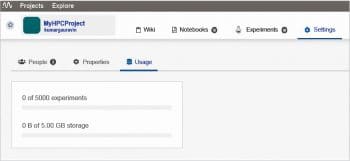

The Neptune dashboard is available at https://ui.neptune.ml/ on which multiple experiments can be performed. Neptune works as a machine learning lab on which assorted algorithms can be programmed and their outcomes can be visualised. The platform is available as Software as a Service (SaaS) so that the deployment can be done on the cloud. The deployments can be done on the users’ own hardware and can be mapped with the Neptune cloud.

In addition to having a pre-built cloud based platform, Neptune can be integrated with Python and R programming so that high performance applications can be programmed. Python and R are prominent programming environments for data science, machine learning, deep learning, Big Data and many other applications.

For Python programming, Neptune provides neptune-client so that communication with the Neptune server can be achieved, and advanced data analytics can be implemented on its advanced cloud.

For integration of Neptune with R, there is an amazing and effective library ‘reticulate’ which integrates the use of neptune-client.

The detailed documentation for the integration of R and Python with Neptune is available at https://docs.neptune.ml/python-api.html and https://docs.neptune.ml/r-support.html.

In addition, integration with MLflow and TensorBoard is also available. MLflow is an open source platform for managing the machine learning life cycle with reproducibility, advanced experiments and deployments. It has three key components — tracking, projects and models. These can be programmed and controlled using the Neptune – MLflow integration.

The association of TensorFlow with Neptune is possible using Neptune-TensorBoard. TensorFlow is one of the powerful frameworks for the deep learning and advanced knowledge discovery approaches.

With the use of assorted features and dimensions, the Neptune cloud can be used for high performance research based implementations.

BigML

URL: https://bigml.com/

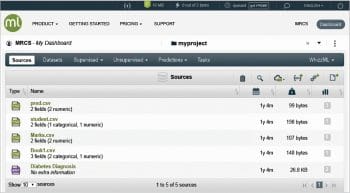

BigML is a cloud based platform for the implementation of advanced algorithms with assorted data sets. This cloud based platform has a panel for implementing multiple machine learning algorithms with ease.

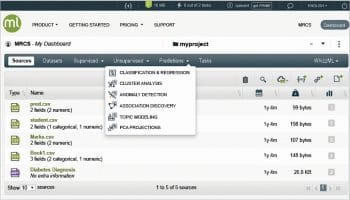

The BigML dashboard has access to different data sets and algorithms under supervised and unsupervised taxonomy, as shown in Figure 4. The researcher can use the algorithm from the menu according to the requirements of the research domain.

A number of tools, libraries and repositories are integrated with BigML so that the programming, collaboration and reporting can be done with a higher degree of performance and minimum error levels.

Algorithms and techniques can be attached to specific data sets for evaluation and deep analytics, as shown in Figure 5. Using this methodology, the researcher can work with the code as well as the data set on easier platforms.

The following are the tools and libraries associated with BigML for multiple applications of high performance computing:

- Node-Red for flow diagrams

- GitHub repos

- BigMLer as the command line tool

- Alexa Voice Service

- Zapier for machine learning workflows

- Google Sheets

- Amazon EC2 Image PredictServer

- BigMLX app for MacOS

Google Colaboratory

URL: https://colab.research.google.com

Google Colaboratory is one of the cloud platforms for the implementation of high performance computing tasks including artificial intelligence, machine learning, deep learning and many others. It is a cloud based service which integrates Jupyter Notebook so that Python code can be executed as per the application domain.

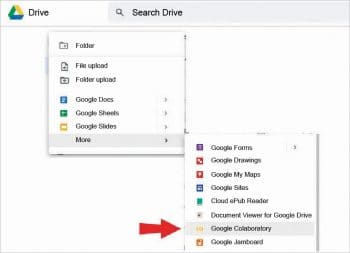

Google Colaboratory is available as a Google app in Google Cloud Services. It can be invoked from Google Drive as depicted in Figure 6 or directly at https://colab.research.google.com.

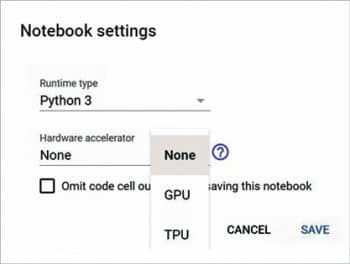

The Jupyter notebook in Google Colaboratory is associated with the CPU, by default. If a hardware accelerator is required, like the tensor processing unit (TPU) or the graphics processing unit (GPU), it can be activated from Notebook Settings, as shown in Figure 7.

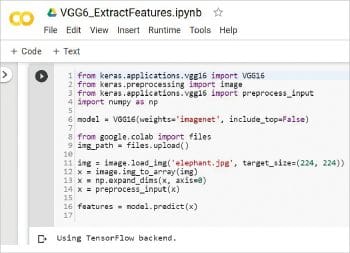

Figure 8 presents a view of Python code that is imported in the Jupyter Notebook. The data set can be placed in Google Drive. The data set under analysis is mapped with the code so that the script can directly perform the operations as programmed in the code. The outputs and logs are presented on the Jupyter Notebook in the platform of Google Colaboratory.

Deep Cognition

URL: https://deepcognition.ai/

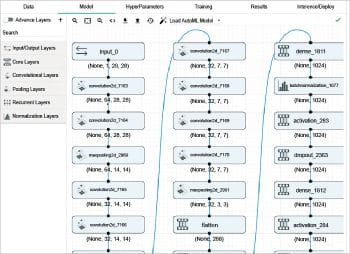

Deep Cognition provides the platform to implement advanced neural networks and deep learning models. AutoML with Deep Cognition provides an autonomous integrated development environment (IDE) so that the coding, testing and debugging of advanced models can be done.

It has a visual editor so that the multiple layers of different types can be programmed. The layers that can be imported are core layers, hidden layers, convolutional layers, recurrent layers, pooling layers and many others.

The platform provides the features to work with advanced frameworks and libraries of MXNet and TensorFlow for scientific computations and deep neural networks.

Scope for research and development

Research scholars, academicians and practitioners can work on advanced algorithms and their implementations using cloud based platforms dedicated to high performance computing. With this type of implementation, there is no need to purchase the specific infrastructure or devices; rather, the supercomputing environment can be hired on the cloud.