The deployment of containers has changed the way organisations create, ship and maintain applications in real-time. Container orchestration automates the deployment, maintenance, scaling as well as networking of containers. In today’s world, where enterprises are required to deploy and manage multiple hosts, container orchestration can be the only rock-solid alternative.

Container platforms, led by ubiquitous Docker, are now being used to package applications so that they can access a specific set of resources on a physical or virtual host’s operating system. In microservice architectures, applications are further broken up into various discrete services that are each packaged in a separate container. The most important advantage of containers to the organisation is adherence to continuous integration and continuous delivery (CI/CD) practices, as containers are scalable. Ephemeral instances of applications or services, hosted in containers, come and go as needed. But, sometimes, scalability becomes an operational challenge. If an enterprise is using ten containers and four to five applications, it’s not at all complex to manage the deployment. But if the containers increase to (say) 1000 and applications scale up to 400-500, then management becomes more complex. Container orchestration automates the process in terms of deployment, management, scalability, and even the 24×7 availability of containers.

What exactly is container orchestration?

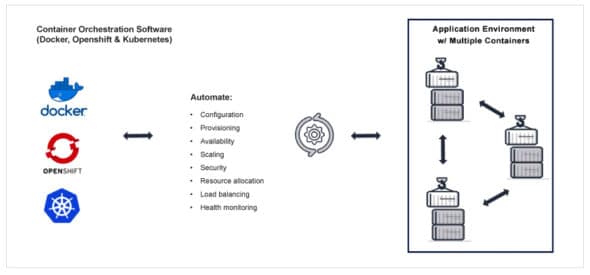

In order to manage the life cycle of containers, container orchestration is used in varied enterprise environments. It controls and automates the following significant tasks:

- Resource allocation

- Redundancy and 24×7 availability

- Deployment and provisioning

- Health monitoring of all containers and attached hosts

- Real-time addition and removal of containers across the entire infrastructure

- Application configuration

How container orchestration works

The container orchestrator plays an important role in container management and deployment. It helps to manage multiple containers with different versions, network configurations and relationships by scheduling them using its master node.

When you use a container orchestration tool, such as Kubernetes or Docker Swarm, you typically describe the configuration of the application in a YAML or JSON file, depending on the tool. These configuration files (e.g., docker-compose.yml) tell the orchestration tool where to gather container images (e.g., Docker Hub), how to establish networking between the containers, how to mount storage volumes, and where to store logs for that container.

Containers are deployed on hosts, usually in replicated groups. When it’s time to deploy a new container into a cluster, the container orchestration tool schedules the deployment and looks for the most appropriate host to place the container, based on predefined constraints. Once the container is running on the host, the orchestration tool manages its life cycle according to the specifications mentioned in the container’s definition file.

There are lots of options for container orchestration like Docker Native ‘Swarm’, AWS Container Orchestrator, Elastic Container Service and Apache Mesos, a strong open source framework. Among all these options, Apache Mesos has the unique capability to handle container and non-container workloads using a distributed channel in-built in the framework.

The advantages of container orchestration tools are:

- Increased portability: Application scalability with just a single command without impacting the entire application.

- Simple and fast deployment: Ease of creation of new containerised applications to handle increasing traffic load.

- Enhanced productivity: Simplified installation process and decreased dependency errors.

- Improved security: Application isolation improves Web application security by separating each application’s process into different containers.

Figure 1 highlights the working of container orchestration.

How to select a container management and orchestration tool

Some of the variables which need to be considered for tool selection are listed below, but the final selection will depend on the user’s requirements.

- CNI networking: A good tool should allow trivial network connectivity between services to save developers time in writing special-purpose codes for finding dependencies.

- Simplicity: The tool should be easy to understand, and its interface should have all the options handy to perform real-time container operations.

- Active development: The tool should be under active development to fix the bugs and increase new options on regular intervals.

- Cloud vendor: The tool chosen should not be tied to any single cloud provider.

A deep dive into Kubernetes

Kubernetes has established itself as the de facto standard for container orchestration. It started as the flagship project of the Cloud Native Computing Foundation, which is backed by key players such as Google, Amazon Web Services (AWS), Microsoft, IBM, Intel, Cisco and Red Hat. Kubernetes continues to gain popularity with DevOps practitioners because it allows them to deliver a Platform-as-a-Service (PaaS) that creates a hardware layer abstraction for development teams. Nowadays, it supports Amazon Web Services (AWS), Microsoft Azure, the Google Cloud Platform (GCP), or on-premise installations.

Kubernetes is a popular open source platform for container orchestration. It enables developers to easily build containerised applications and services, as well as scale, schedule and monitor those containers. Kubernetes is also highly extensible and portable, which means it can run in a wide range of environments and be used in conjunction with other technologies, such as service meshes.

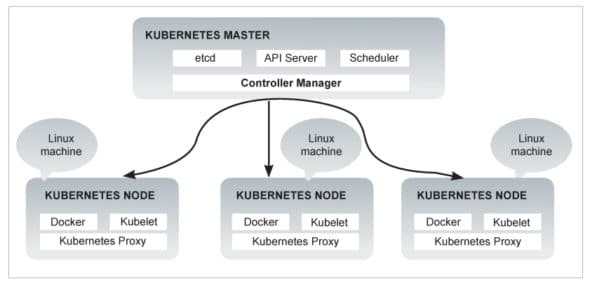

Figure 2 highlights the Kubernetes architecture.

Kubernetes follows client-server architecture. The master is installed on one machine and the node on separate Linux machines.

Kubernetes: Key components of master

- etcd: This stores the configuration information used by every node in the cluster. It is a high availability key value store that can be distributed among multiple nodes. It is a distributed key value store that is accessible to all.

- API server: Kubernetes is an API server that provides all the operations on cluster using the API. API server implements an interface, which means different tools and libraries can readily communicate with it. Kubeconfig is a package along with the server side tools. It can be used for communication.

- Controller manager: This component is responsible for most of the collectors that regulate the state of a cluster and perform a task. It works towards getting the shared state of a cluster, and then makes changes to bring the current status of the server to the desired state.

- Scheduler: This is one of the key components of Kubernetes master. It is a service in master responsible for distributing the workload. It is responsible for tracking the utilisation of working load on cluster nodes, placing the workload on the resources that are available, and then accepting it.

Kubernetes: Key components of node (client)

- Docker: This helps in running the encapsulated application containers in a lightweight operating environment.

- Kubelet service: This is a small service in each node responsible for relaying information to and from the control plane service. It interacts with the etcd store to read configuration details and right values. This communicates with the master component to receive commands and work.

- Kubernetes proxy service: This is a proxy service which runs on each node and helps in making services available to the external host. It helps in forwarding the request to correct containers and is capable of performing primitive load balancing.

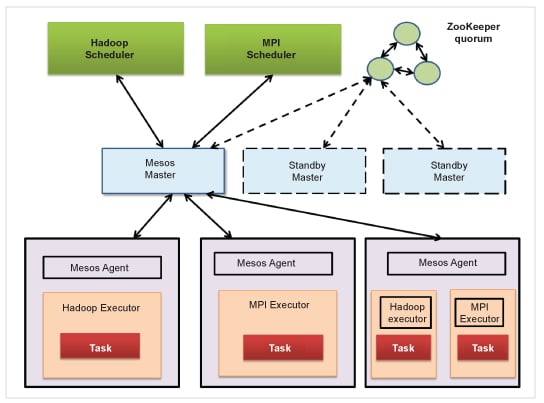

Deep dive into Apache Mesos

Apache Mesos is an open source software project originally developed at the University of California at Berkeley, but now widely adopted in organisations like Twitter, Uber and PayPal. Mesos’ lightweight interface lets it scale easily up to 10,000 nodes (or more). Its APIs support popular languages like Java, C++ and Python, and it also supports out-of-the-box high availability. Unlike Swarm or Kubernetes, however, Mesos only provides management of the cluster; so a number of frameworks have been built on top of it, including Marathon, a ‘production-grade’ container orchestration platform.

Mesos is a cluster resource manager that targets the principal requirements of:

- Efficient isolation and resource sharing across distributed applications

- Optimal utilisation of resources in cluster

- Support for a wide variety of frameworks

- Hardware heterogeneity

- High scalability, being able to have clusters of tens of thousands of nodes

- Fault tolerance

The architectural components of Apache Mesos are listed below.

- Mesos master: This is a mediator between an agent’s resources and the frameworks, and undertakes the task of fine-grained resource sharing among frameworks.

- Mesos agent: This primarily performs resource management on physical nodes and also runs executors of frameworks.

- Mesos framework: It has two parts: scheduler and executor. The scheduler acts as a controller and the executor performs all the activities.

Some of the frameworks provided by Mesos are:

Chronos: Fault-tolerant scheduler for Mesos cluster.

Marathon: Handles hardware or software failures.

Aurora: Service scheduler to run long-running services to take advantage of Mesos’ scalability, fault-tolerance and resource isolation.

Hadoop: Data processing.

Spark: Data processing.

Jenkins: Allows dynamic launch of workers on a Mesos cluster depending on the workload.

When to containerise a cloud-native application

Containers, part of a more general software approach called cloud-native, are small software packages which, ideally, perform small, well-defined tasks. Container images include all the software, including settings, libraries and other dependencies, needed for them to run. They are better-suited to frequent changes, for technical or business reasons. This agility aligns them better with cloud architectures.

Cloud-native refers to a set of characteristics and an underlying development methodology for applications and services that are scalable, reliable and have a high level of performance. Containers help accelerate the development and deployment processes, make workloads portable (and even mobile) between different servers and clouds, and are the ideal material from which to build software-defined infrastructure. Critically, the software infrastructure for containers is primarily open source, written by talented developers and with backing from many large groups.

In some respects, this may seem surprising. Containers don’t run only in the cloud. You could run containers on a local, on-premise server if you want—and there are plenty of good reasons to do so.

- You can deploy the same container in any cloud. You can also typically use the same open source tools to manage containers in any cloud. This means that containers maximise mobility between clouds.

- Containers allow you to deploy applications in the cloud without having to worry about the nuances of a particular cloud provider’s virtual servers or compute instances.

- Cloud vendors can use containers to build other types of services, such as serverless computing.

- Containers offer security benefits for applications running in the cloud. They add another layer of isolation between your application and the host environment, without requiring you to run a full virtual server.So, while it’s certainly true that you don’t need a cloud to use containers, the latter can make cloud-based applications easier to deploy. Containers and the cloud go hand in hand in our cloud-native world.