Here’s a quick look at how microservices and containerisation work, with a few best practices thrown in.

Microservices can be developed in any programming language. Each microservice focuses on completing one task and there are no criteria as to how small it should be. These services help to scale up parts of applications rather than scaling up the entire application. Major characteristics of microservices are:

Service independence: As microservices are autonomous, each microservice can change independent of other services.

Self-contained: Microservices are easily replaceable and upgradeable and promote single ownership of the service.

Decentralised: Microservices decentralise patterns, languages, standards, as well as component and data responsibility and governance because of their architectural style.

Resiliency: If one microservice goes down, it does not bring the whole application down.

Polyglot architecture: Microservices architecture supports multiple technologies.

Single responsibility: A service is aligned to a single business activity and is responsible for that. It delivers the complete business logic necessary to fulfill that business activity.

Scalability: Microservices can be deployed and scaled independent of each other.

Decoupled: Microservices implement a single business function. This helps in maintaining minimum dependency on other services.

Implementation agnostic: Microservices support multiple development platforms and technologies and can be deployed in any of the containers.

Blackbox: Microservices are a black box (no internal details are exposed outside the service boundary) for other systems using them.

Bounded context: This is one of the most important characteristics of microservices. Other services do not need to know anything about the architecture of a microservice.

Innovation friendly: New versions of small independent services can be deployed rapidly, making it easier to experiment and innovate.

Quality: Composability and responsibility, more maintainable code, better scaling and optimisation are all characteristics of microservices.

End-to-end ownership: Microservices bring about a cultural shift for the project team. The development team maintains each project built using microservices architecture as opposed to the regular practice of handing it over to the support and maintenance team.

Independent governance: A single team has the complete ownership of the microservice lifecycle. It owns all the aspects of the microservices including governance. Governance is decentralised and autonomous.

Manageability: Smaller microservices code bases are easier for developers to understand, making changes and deployments easier.

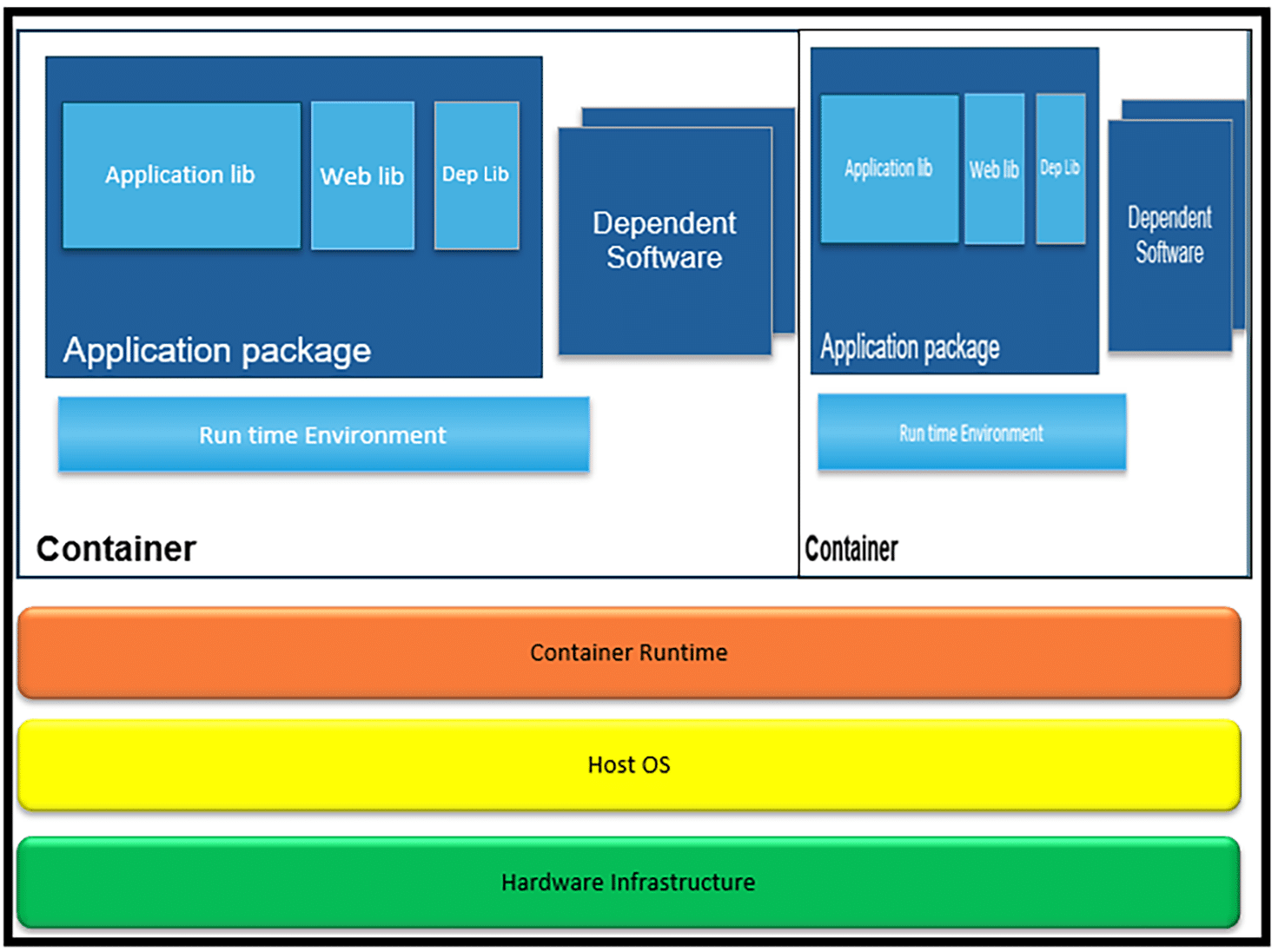

Containers

Containers are packages of software that include everything that they need to run. They encapsulate microservices code, dependencies, libraries, binaries, and more, providing a high degree of isolation, as well as fast initialisation and execution.

Containerisation ensures consistency across development, testing and production environments, as containers behave the same regardless of where they are deployed. They leverage orchestrators to manage microservices-based applications using container tools. The most popular frameworks to orchestrate multiple containers in an enterprise environment are Docker and Kubernetes.

Containers provide a minimal footprint, allowing for faster startup times and efficient resource utilisation. This is essential for rapid scaling and maintaining a low mean time to recovery (MTTR) during incidents.

Once built, container images are stored in container registries (such as Docker Hub or private registries). These registries act as a source of truth for deploying the most current versions of the microservices.

Container features include:

- Ability to reduce memory footprint to bare minimum.

- With a container image, we can bundle the application along with its runtime and dependencies. This makes the application very portable.

- Containers are very lightweight. They use less memory and CPU than VMs running similar workloads.

- They can be built locally, deployed and run anywhere, either in the cloud or on-premises.

- Containerised services can be deployed very fast (within milliseconds). They can be scaled up or down with ease.

The need for container orchestration

Orchestration is about automating deployment and operational efforts when managing containerised microservices. Key considerations are:

- Deploying for scalability and availability.

- Implementing automated health checks and monitoring.

- Setting resource limits.

- Updating the orchestration platform for critical security notifications and patches released by the platform provider.

- Automating backup for faster local replicability.

- Implementing business continuity planning.

Kubernetes, DockerSwarm and Apache Mesos are popular open source container orchestration solutions.

Kubernetes

Kubernetes is an orchestration framework for deploying, scaling, and managing containers. It automates the deployment of containerised microservices.

Key Kubernetes components include:

Pods: This is the smallest deployable unit that can house one or more containers. Pods are not expected to live long. Persistent volume can be assigned and shared between pods in the same node.

Deployments: These define the desired state (e.g., the number of pod replicas) and manage rolling updates to ensure availability.

Services: These provide stable network endpoints and load balancing, enabling reliable service discovery for inter-service communication.

Infrastructure features: Kubernetes offers built-in capabilities like auto-scaling, self-healing (rescheduling failed pods), and configuration management (using ConfigMaps and Secrets), which are crucial for running microservices at scale reliably.

Its features are:

- Quick deployment of applications.

- Portable — can be used with public, private, hybrid and multi-cloud.

- Extensible — can be modular, pluggable, hookable and composable.

- Automated deployment and replication of containers.

- Online scale-in or scale-out of applications on-the-fly.

- Load balancing over a group of containers.

- Rolling view features of application containers.

- Resilience due to automated rescheduling of failed containers.

- Controlled exposure of network ports to systems outside the cluster.

- Limits hardware usage to required resources only.

- Self-healing, with auto-placement, auto-restart, auto-replication and auto-scaling.

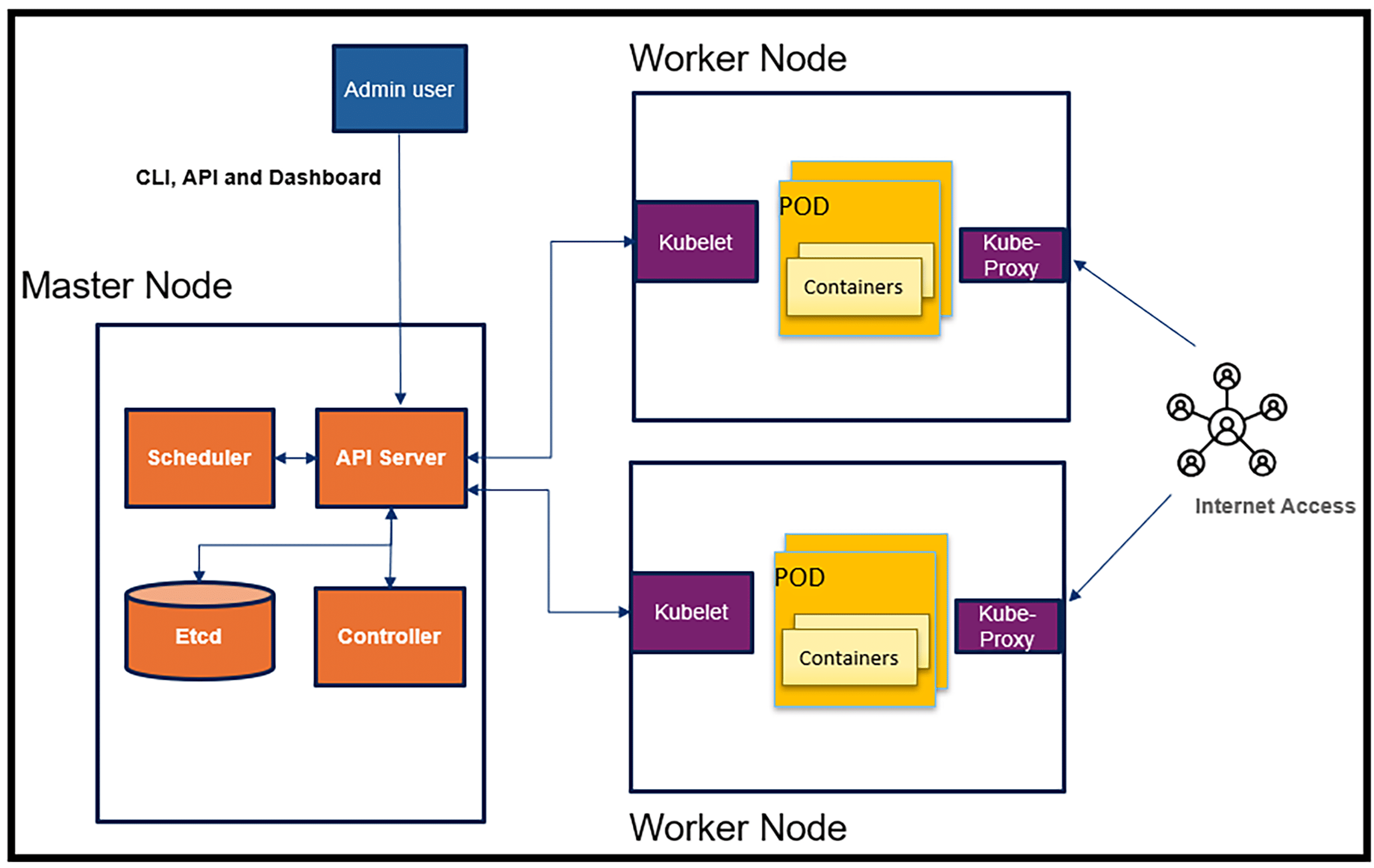

Here’s a quick overview of Kubernetes architecture (Figure 2):

- Kubernetes users communicate with the API server and apply the desired state.

- The master node is responsible for managing the Kubernetes cluster. Master nodes actively enforce the desired state on worker nodes.

- Worker nodes support communication between containers.

- Pod is the scheduling unit.

- Kubelet is the agent that receives definitions from the master and implements them.

- Kube-proxy is the network proxy in each node and listens to the API server.

- Etcd is the distributed key value store for cluster state and configuration. It can be run in the master or outside.

- Master, worker node and etcd can be one or more.

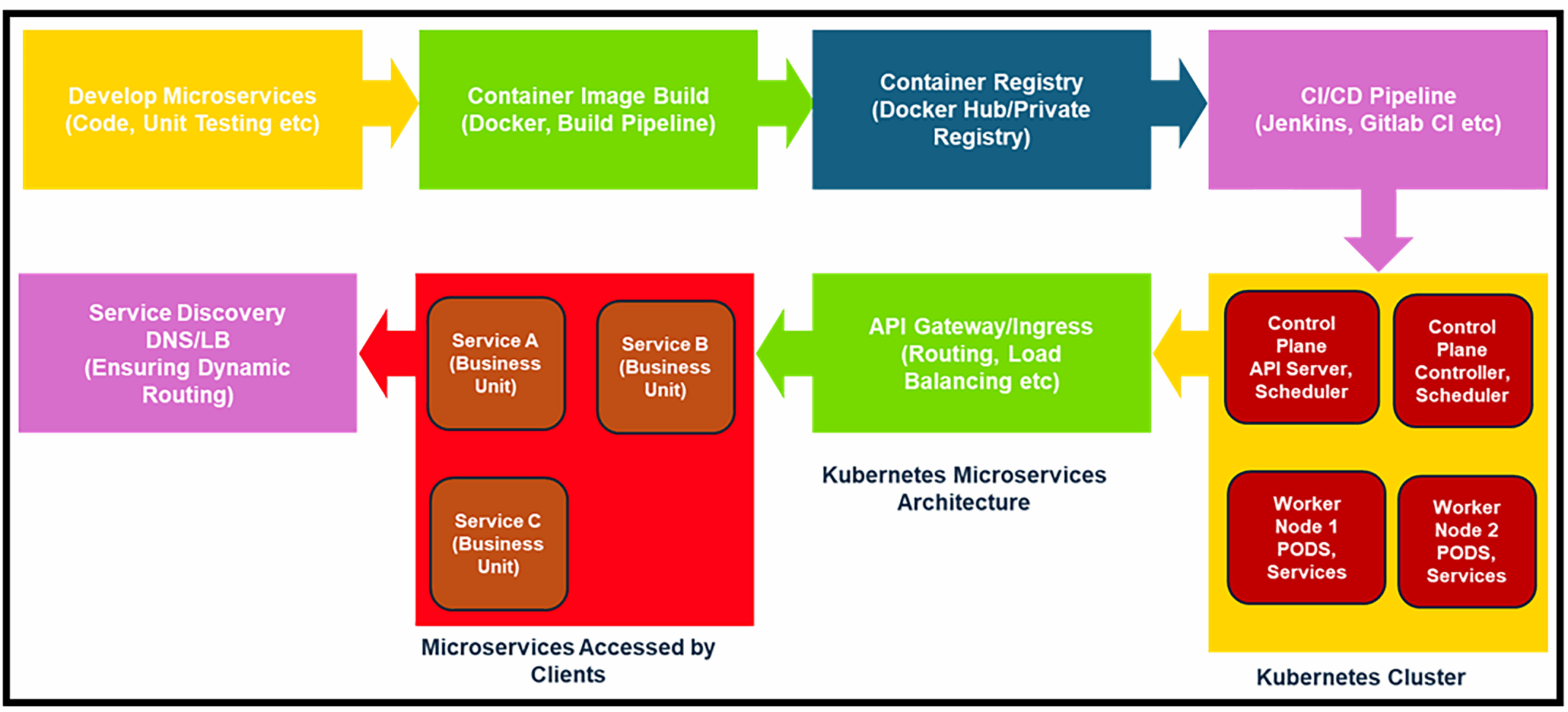

End-to-end flow of microservices deployment

In microservices architecture, each service is independently developed, packaged, and deployed. Containerisation (using tools like Docker) ensures that each microservice runs in an identical environment, while Kubernetes takes care of orchestration—handling deployment, scaling, and management of these containerised workloads.

Here’s how microservices deployment flows from development through containerisation to deployment in a Kubernetes cluster (Figure 3):

Develop microservices: Developers write code for discrete business functions (each microservice).

Container image build: Using tools like Docker, source code is packaged with all dependencies.

Container registry: Image artifacts are saved in registries (e.g., Docker Hub) for storage and distribution.

CI/CD pipeline: Automated processes build, test, and trigger deployments.

Kubernetes cluster: The CI/CD pipeline deploys containerised applications into a Kubernetes cluster.

Control plane and worker nodes: The cluster’s control plane (with API servers, schedulers, etc) handles orchestration while worker nodes host the actual application pods.

API gateway/Ingress controller: Incoming client requests are managed through an API gateway or ingress controller that looks up the appropriate service endpoints.

Microservices (Service A/B/C): Independent microservices—each aligned with a business unit—are deployed in pods across the nodes.

Service discovery: Kubernetes provides internal DNS and load balancing so that service endpoints are dynamically discovered and routed.

Best practices for implementing microservices

Business capability-based design: Microservices architecture typically needs teams that specialise in particular business capabilities with different requirements, such as order approval, shipping, delivery and returns management, which are all part of order fulfillment.

Product mindset: Enterprises should aim to focus not on more projects, but on products. Services should be developed as independent products that are well documented, with each responsible for a single business capability.

Data store for each microservice: The same data store should not be used for all microservices. Each microservices team should select the database that is best suited to its service.

Code maturity: All code in a microservice should have a similar degree of maturity and stability. To add or rewrite code that works well in a microservice, the best way is usually to create a new microservice for the new or altered code and leave the existing microservice where it is. This allows teams to iteratively release and test the new code until it is error-free and maximally efficient.

Containerisation: There should be only one microservice per container. Minimal base images should be generated to protect from unintentional packages/libraries. The container image should be small (removing redundant dependencies), with a version for each image being maintained. Implementation of signature/digest for images enhances trust and reliability.

Monitoring: Microservices consist of many mobile parts; it is therefore all the more important that you measure everything efficiently and simply with mechanisms such as response time notifications, service error notifications and dashboards. Use logs such as Splunk and monitoring tools like AppDynamics.

Leverage built-in Kubernetes tools: Use commands like ‘kubectl logs’, ‘kubectl describe’, and ‘kubectl get events’ to troubleshoot the misbehaving of pods. These commands deliver critical insights into container logs, pod state, and recent cluster events, helping pinpoint issues quickly.

Centralised logging and monitoring: Implement a centralised logging system such as Elasticsearch/Kibana, Fluentd, or Loki to aggregate logs across the cluster. Similarly, monitoring tools like Prometheus, Grafana, or Kubernetes-native solutions can help visualise performance metrics, resource usage, and alert you to anomalous behaviour in real time.

Distributed tracing and debugging: Employ debugging and observability tools specifically designed for Kubernetes to provide insights into service mesh layers, ingress controllers, or cluster networking patterns.

Developers must decide early whether a microservices or monolithic architecture aligns best with their application’s long-term scalability and usability goals. Choosing microservices from the outset can be more advantageous when significant expansion and frequent updates are anticipated.

Containers, Kubernetes, and microservices embody a collaborative evolution. The agility of microservices pairs with the efficient delivery of containers. Kubernetes provides robust orchestration and can scale individual microservices independently based on demand.

Containerisation allows for consistent behaviour of microservices from local development to production, reducing environment-specific issues. Lightweight containers and Kubernetes’ self-healing capabilities lead to lower MTTR, as failing services can be quickly restarted or replaced. The independent deployment model minimises risks during updates. One service can be updated without the need to redeploy the entire application, reducing downtime and complexity.

Developers should be familiar with new tools such as centralised logging, distributed tracing, and container monitoring to handle the increased complexity.

Disclaimer: The views expressed in this article are those of the author. Tricon

Solutions LLC does not subscribe to the substance, veracity or truthfulness

of this opinion.