Data governance plays a critical role in ensuring effective data management in an organisation. Businesses who invest in it are at a definite advantage over those who don’t.

The data that businesses deal with today is diverse. Managing this data efficiently is critical for making informed decisions, providing better customer experience and driving innovation. Hence, enterprises need to focus on creating modern data governance strategies that tackle current data challenges and convert it into a key asset that increases the efficiency of the business.

Data governance is a key component of data management. It is a holistic approach to managing, organising, monitoring, improving and leveraging data to create business value. Data governance establishes the overall strategy for data management including the process of acquiring, organising, storing and utilising data throughout the data life cycle within an enterprise. It involves a set of practices, processes, procedures, policies and technologies to ensure that the data is accurate, consistent, available and secure. It also includes business process management and risk management.

The basic objectives of data governance are:

- Enhance the agility of informed business decisions

- Facilitate seamless knowledge sharing across the enterprise

- Eliminate ambiguity and foster trust in data assets

- Create value through collaboration within existing operational workflows

- Streamline adherence to security and privacy regulations

According to IDC, global data creation is expected to reach 175 zettabytes this year. As per a Gartner report, 60% of organisations will fail to realise the value of their AI initiatives due to weak data governance frameworks. In a survey of chief data and analytics officers (CDAO) in 2024 by Gartner, 89% of CDAOs agreed that effective data and analytics governance is essential for business and technology innovation.

Challenges in data governance

Enterprises today face a range of data governance challenges that hinder effective data utilisation and decision-making. One of the most significant issues is data being stored in silos—scattered across platforms, tools, channels, and business units—which makes access difficult and leads to inefficiencies and inconsistencies. Ensuring data accuracy, completeness, and timeliness remains a persistent struggle. Additionally, the lack of oversight in monitoring both incoming data quality and its usage across the organisation results in degraded data value. Converting offline data into usable digital formats poses another hurdle. Enterprises also grapple with managing regulatory compliance while trying to maintain data security and privacy.

A poorly defined data management strategy can lead to overwhelming volumes of disorganised and unmanageable data. This, in turn, increases the risk of sensitive business or customer information being leaked or misused. Furthermore, the use of unsecured data from various sources opens the door to data breaches. The complexity is compounded by the adoption of modern digital applications that rely on polyglot storage architectures—such as relational databases, columnar databases, key-value stores, and graph databases—which vary depending on application functionality, making unified governance even more challenging.

Next-gen data management capabilities

To manage the huge and different types of data being generated today, businesses need to upgrade their data management capabilities.

Data processing techniques: Unstructured data processing covers entity extraction, concept extraction, sentiment analysis, NLP, ontology, etc. Machine learning techniques must be leveraged to automate this extraction.

Agile data delivery: The overall cost of data delivery can be reduced by using agile development methodology.

Digital 360 view of the customer and products: Customers interact with enterprises through social media channels. The ability to create a single view of a customer and the company’s products across internal and external data is critical in the digital world.

On-demand data services: It’s important for businesses to provide virtualised access to data by way of on-demand data services for both online and offline use.

Automated metadata extraction: Data is thrown into enterprise systems at a rate that doesn’t allow businesses (especially SMEs) to look at data structures and extract metadata. Automated metadata extraction based on ontology is critical.

Real-time analytics: Businesses need to use multi-channel applications and decision management systems to capture the interactions of digital processes in real-time scenarios.

Data archival: Compliance and performance requirements drive the need for archival of both structured and unstructured data.

Principles of data governance

Data governance principles are a structured set of ideas that collectively define and guide the development of a solution architecture, from values through to design and implementation, harmonising decision-making across an organisation.

These include:

- Data is an asset: Data is an asset that has a specific and measurable value for an enterprise.

- Data is shared: Users have access to the data necessary to perform their tasks; therefore, data is shared across enterprise functions and organisations.

- Data trustee: Each data element has a trustee accountable for data quality.

- Common vocabulary and data definitions: Data definition is consistent throughout the enterprise, and the definitions are understandable and available to all users.

- Data security: Data is protected from unauthorised use and disclosure.

- Data privacy: Privacy and data protection is enabled throughout the life cycle of the data. All data sharing conforms to relevant regulatory and business requirements.

- Data integrity: Each enterprise must be aware of and abide by its responsibilities with respect to the provision of source data and the obligation to establish and maintain adequate controls over the use of personal or other sensitive data.

- Data transparency: Data governance processes must be transparent. All data-related decisions must be explained clearly to all personnel as to how, when, and why they were introduced.

- Fit for purpose: A next-generation information ecosystem needs to have fit for purpose tools since one technology will not meet the needs of all the workloads and processing techniques. These include text processing, data discovery, dynamic data services, high performance analysis, streaming analytics, etc.

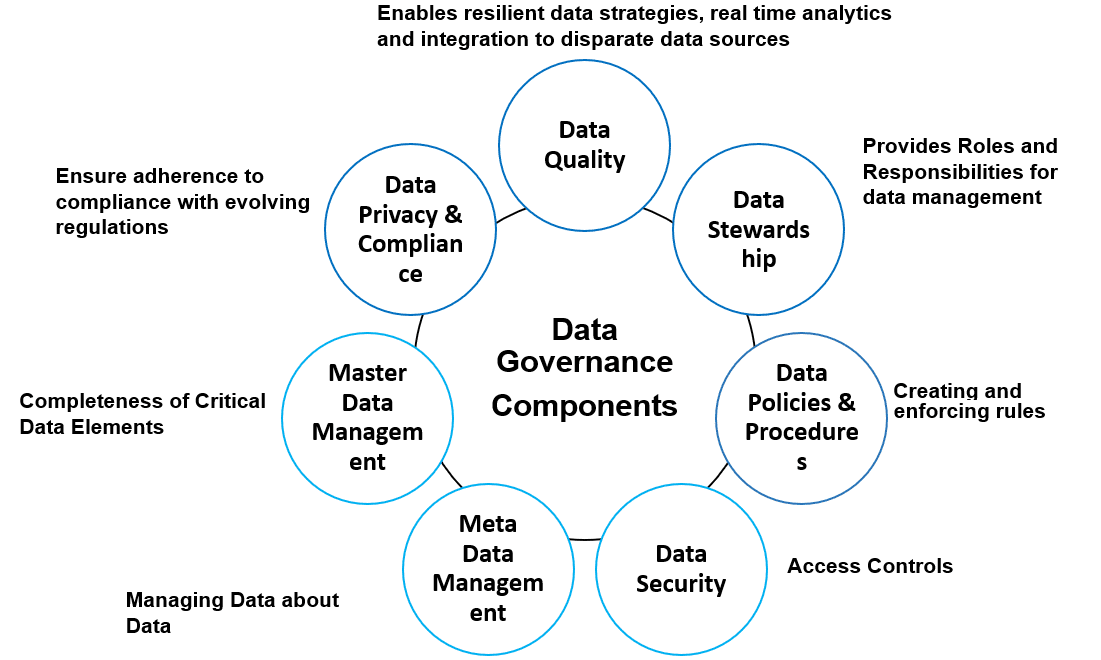

Key components of data governance

Data governance ensures data quality, accuracy, and compliance with regulatory requirements, building trust in the data. It must be aligned with enterprise architecture governance to realise the objectives of the business.

Open source data governance tools include Amundsen, DataHub, Apache Atlas, Magda, Open Meta Data, Egeria, and TrueDat. These tools offer features like metadata management, and data cataloguing and collaboration.

Data quality: It helps in ensuring the accuracy, completeness, and consistency of data. Data quality management involves identifying and correcting errors, standardising formats, and maintaining a high level of data integrity.

The best open source data quality tools include Cucumber, Deequ, dbt Core, MobyDQ, Great Expectations, and Soda Core. These tools help in the automation of data validation processes, data cleaning and monitoring.

Data stewardship: This is about assigning roles and responsibilities related to data management. Data stewards are designated individuals or teams entrusted with overseeing the appropriate use, integrity, and secure storage of enterprise data. They serve as a vital bridge between IT and business units, ensuring that data conforms to the enterprise’s established quality and consistency standards. Key responsibilities include defining and standardising data elements, monitoring data quality, and collaborating with IT to resolve any technical challenges.

Data policies and procedures: These establish and enforce policies for how data is collected, stored, shared, and used. An enterprise’s central data management policies prescribe permitted and prohibited practices at every stage of the data life cycle. They ensure compliance with internal standards and external regulations.

Data security: Establishing proper security protocols helps in reducing the risk of data breaches and threats. It also safeguards sensitive information. Encryption, access controls, authentication, and intrusion detection systems help in protecting data across the life cycle.

Open source data security tools that are widely used include Metasploit, OSSEC, OpenVAS, Snort, KeePass, and ClamAV. These tools can be integrated into various security strategies to protect against a wide range of cyber threats.

Metadata management: This helps in keeping track of data definitions, relationships, and structures. It’s essentially data about data. Metadata functions as the contextual glue that transforms isolated data points into coherent, actionable assets. It captures essential attributes covering:

- Creation timestamp

- Authorship and ownership of data

- Source provenance

- Relationships with other data elements

Leading open source metadata management tools are Apache Atlas, Amundsen, LinkedIn DataHub, Metacat Data Catalogue, Open Metadata, and Marquez.

Master data management: Master data management (MDM) is a process for ensuring the accuracy, consistency, and completeness of critical data elements, such as customer data and product data. Master data is standardised, matched, merged, enriched, and validated according to governance rules.

Key open source players in the MDM area are Talend Open Studio for MDM, AtroCore, and Pimcore.

Data storage: This helps in determining where and how data will be stored as an enterprise data repository. It covers both structured and unstructured data sources, which include databases, data warehouses and data lakes.

Popular open source tools in the data storage area are Hadoop, LakeFS, Cassandra, and Neo4J. These tools provide scalability, robustness and performance in managing and analysing large datasets in various applications.

Data privacy and compliance: This ensures adherence to regulations and ethical considerations. Privacy implements controls to prevent unauthorised access.

Regulatory frameworks such as the European Union’s General Data Protection Regulation (GDPR) and California’s Consumer Privacy Act (CCPA) impose stringent requirements on how businesses collect, process, and safeguard personal data.

Data metrics management: Defining and implementing robust business metrics and key performance indicators (KPIs) to quantify the enterprise-wide impact of data governance is critical to its success. These measures should be clearly articulated, inherently quantifiable, tracked longitudinally, and applied consistently each year to ensure comparability, accountability, and continuous improvement. Some of the metrics monitoring activities are:

- Aligning KPIs to strategic goals (e.g., data-quality gains, reduced time-to-insight, compliance rates, cost savings)

- Leveraging real-time dashboards for ongoing visibility

- Conducting annual KPI reviews to recalibrate targets and processes as the organisation evolves

Data governance ensures consistent, accurate, and trustworthy data across the enterprise, enabling smarter and more informed decisions. It enhances data integrity, reduces duplication, and improves productivity by streamlining data management. Centralised control helps lower data management costs, reduce data breaches, and optimise data storage. Governance also builds trust in data quality, promotes reuse, and ensures compliance with regulations such as GDPR, CCPA, HIPAA, and PCI-DSS.

Data governance is not a one-time activity — it’s a journey. Also, it is not optional but mandatory. Effective data governance is a collection of processes, people, policies, standards, and metrics that ensure the effective and efficient use of data in enabling an enterprise to achieve its goals. By implementing data governance best practices, enterprises can ensure that they are maximising the value of their data.