This real-world implementation of a full-stack, microservices-based e-commerce platform uses open source technologies. The project demonstrates how open source tools can power scalable, reactive applications with production-grade architecture and provides insights into containerised deployments, observability, and service communication patterns.

In the modern digital ecosystem, building scalable and maintainable applications demands more than just writing code—it requires smart architecture, efficient communication between services, and seamless deployment pipelines. Microservices architecture has emerged as the go-to solution for developing complex, modular systems that can evolve rapidly without breaking the whole application.

This article dives into the development of a full-stack e-commerce platform built using open source technologies. From backend services written in Node.js and Express, to Kafka-powered asynchronous communication, to a containerised deployment on Kubernetes using Minikube—every layer is designed to reflect a real-world, production-grade microservices environment.

The frontend, built using Next.js, is tightly integrated with Clerk.dev for authentication and interacts with backend services through REST APIs and WebSockets for real-time responsiveness. This project serves as a hands-on blueprint for developers looking to build distributed, event-driven systems using open source tools—offering practical insights into architecture, deployment, and scalability.

System architecture

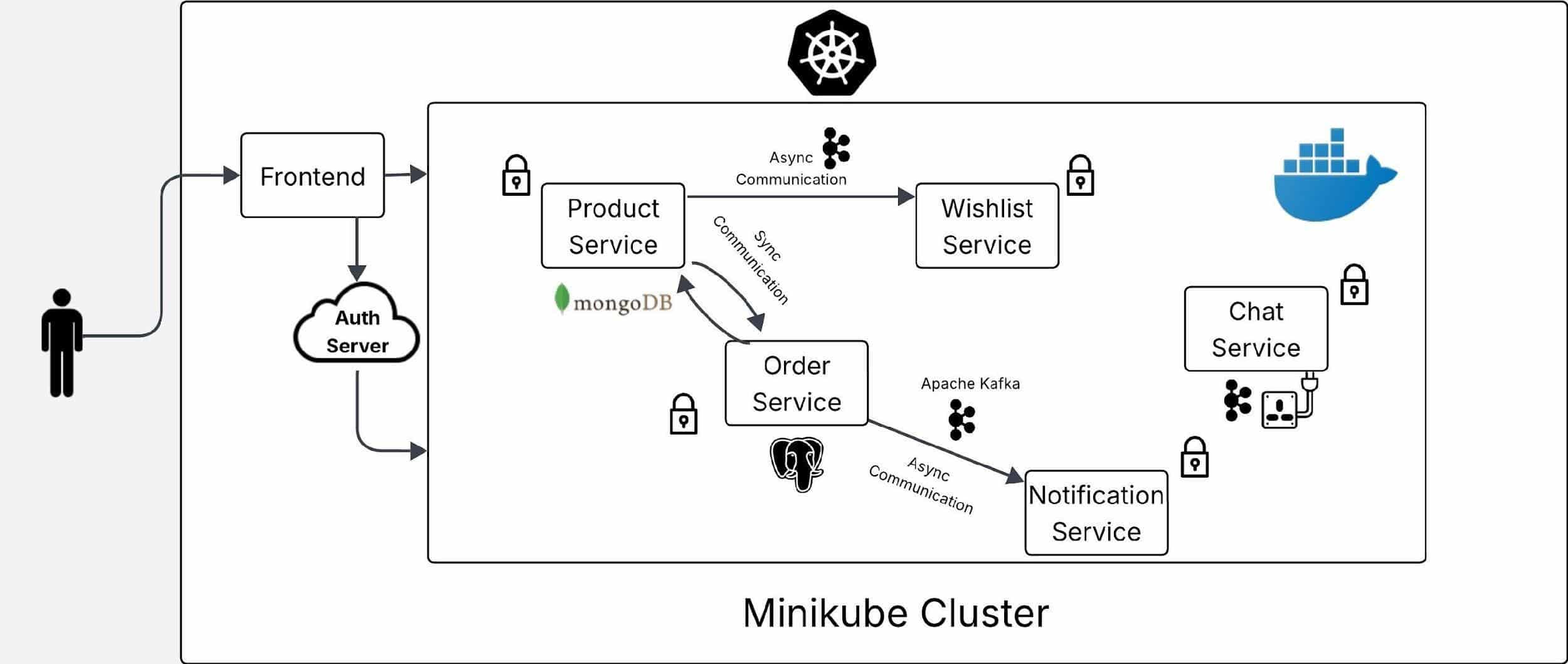

The architecture of the platform is based on a modular microservices approach, where each functional unit is isolated into an independent service. Figure 1 shows system architecture of the e-commerce platform. This design promotes scalability, ease of maintenance, and rapid deployment.

Each microservice—Product, Order, Wishlist, Chat, and Notification, is responsible for a single domain and communicates with other services through a hybrid model:

- Synchronous communication using REST APIs for immediate, request-response interactions (e.g., Wishlist fetching product details).

- Asynchronous communication using Apache Kafka, allowing services to publish and consume events decoupled from one another (e.g., Order service publishing to a notification topic).

All backend services are Dockerized and orchestrated via Kubernetes (Minikube), enabling features such as independent deployment of services, service discovery and internal routing via ClusterIP, autoscaling using Horizontal Pod Autoscaler (HPA) and environment-specific configuration through ConfigMaps and Secrets.

The platform uses a polyglot persistence strategy: MongoDB for semi-structured or dynamic data (Product, Chat, Wishlist) and PostgreSQL for transactional integrity (Order).

The frontend, built using Next.js, interacts with backend services over REST APIs and WebSocket connections, enabling real-time experiences such as live chat. Clerk.dev handles user authentication and access control using secure token-based sessions.

This architecture simulates real-world, production level deployments and demonstrates how open source technologies can power fully scalable and reactive applications.

Technology stack

This e-commerce platform is built using a suite of modern, open source technologies, each selected for its robustness, flexibility, and community support. The stack spans backend APIs, asynchronous messaging, containerisation, orchestration, and frontend development.

Backend

Node.js: A non-blocking, event-driven runtime perfect for handling high I/O workloads.

Express.js: A minimalist framework used to define RESTful API routes across services.

Kafka (Apache Kafka): Powers asynchronous, event-driven communication between services using topics like product-events, order-events, and chat-events.

kafkajs: Lightweight Kafka client for Node.js, used to implement producers and consumers.

Databases

MongoDB: Used in Product, Wishlist, and Chat services for handling semi-structured data with schema flexibility.

PostgreSQL: Used in the Order service where transactional integrity and relational data modelling are essential.

Prisma ORM: Used with PostgreSQL for schema validation and type-safe database operations.

Frontend

Next.js: React-based framework enabling server-side rendering, static site generation, and dynamic routing.

WebSocket: Used for real-time features such as chat and notifications.

Axios/Fetch API: For interacting with backend REST endpoints.

Clerk.dev: Provides secure user authentication, role-based access control, and JWT-based session handling.

Infrastructure and deployment

Docker: Each microservice is containerised to ensure consistent environments across development and deployment.

Kubernetes (Minikube): Orchestrates all services, managing pods, scaling, and service discovery.

Horizontal Pod Autoscaler (HPA): Automatically adjusts service replicas based on CPU utilisation.

This tech stack creates a solid foundation for building distributed, scalable applications while keeping development efficient and modular. Each tool plays a crucial role in transforming this project into a near-production-grade system.

Microservices overview and communication flow

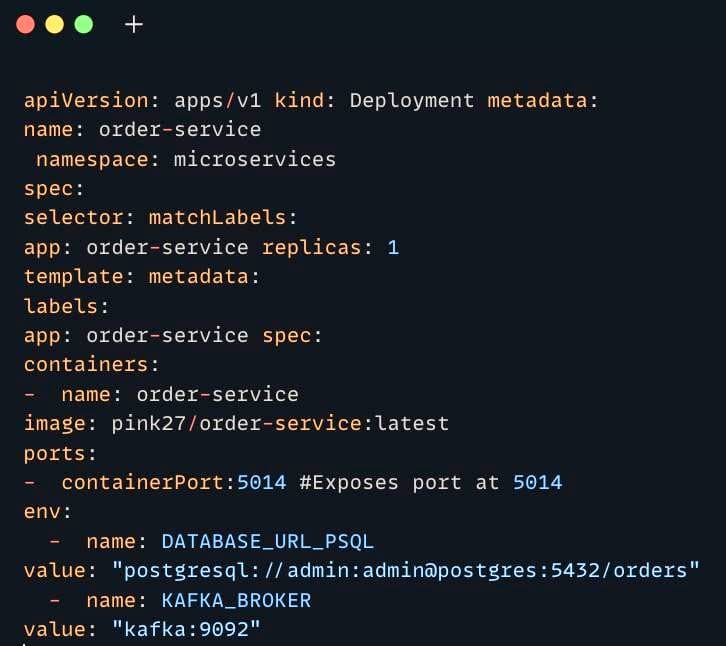

The platform’s backend is divided into five core microservices, each encapsulating a specific domain and operating independently. This modularity ensures better scalability, team ownership, and fault isolation. Figure 2 illustrates the code snippets for the microservices, along with their respective databases and responsibilities. The complete source code for this project is available in our GitHub repository at https://github.com/pink-27/ThriftStore-For-College-Students. Interested readers are encouraged to clone the repository and explore the application.

Core microservices

Product service: Handles product listings, categories, and seller-specific data. Database: MongoDB

Order service: Manages order placement, payment status, and tracking. Database: PostgreSQL

Wishlist service: Allows users to save and manage products of interest. Database: MongoDB

Chat service: Enables real-time buyer-seller communication. Tech: WebSocket + MongoDB + Kafka

Notification service: Sends system-generated alerts triggered by Kafka events. Tech: Kafka + WebSocket (for frontend push)

Inter-service communication

A hybrid communication model is implemented for optimal responsiveness and scalability.

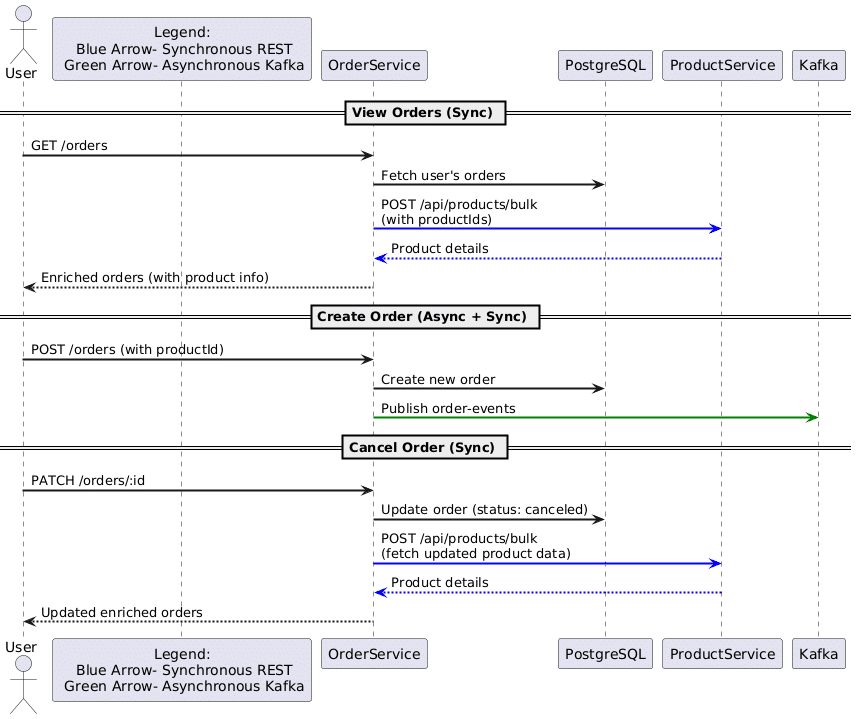

Synchronous (REST APIs): Used for direct data access and CRUD operations.

Example: Wishlist calls Product service to fetch product details.

Asynchronous (Apache Kafka): Enables decoupled, real-time workflows.

Example: When an order is placed, an order-event is published to Kafka. Notifications and Chat services consume this event.

Each service subscribes only to the topics relevant to its business logic (e.g., order-events, chat-events, notification-events), ensuring clean separation and scalability. Figure 2 depicts the sequence of operations in an e-commerce microservices architecture for order management. It shows how the OrderService interacts synchronously via REST with the ProductService and asynchronously via Kafka during viewing, creating, and cancelling orders. The flow involves retrieving product details, creating orders, publishing order events, and updating order status, all involving coordinated communication between users, services, and databases.

This setup not only enhances performance under load but also simplifies debugging and horizontal scaling. REST handles immediate tasks, while Kafka ensures reactive behaviour and eventual consistency.

Deployment architecture

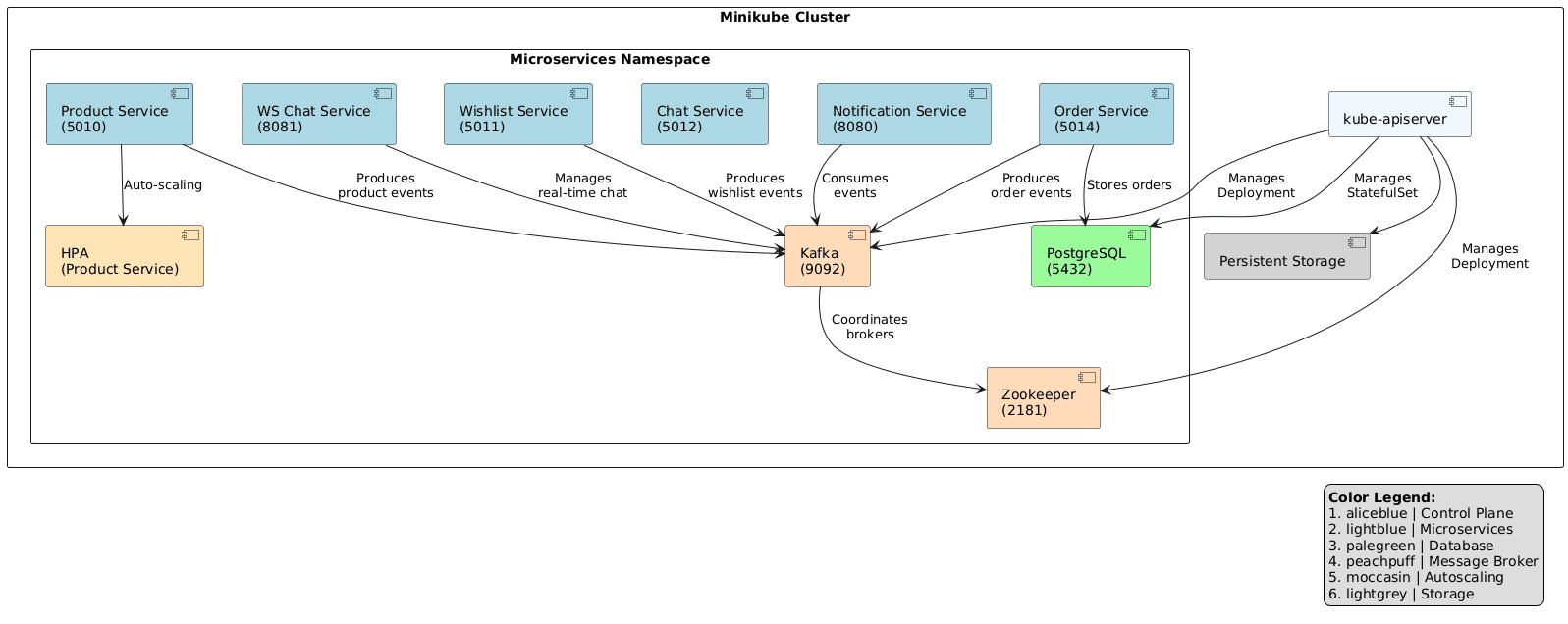

The entire application is containerised using Docker and orchestrated on a local Kubernetes cluster via Minikube. This setup closely mirrors production environments, offering service resilience, scalability, and ease of management.

Each microservice runs in its own Docker container, exposing only the necessary ports and packaged with minimal dependencies. These containers are managed via Kubernetes Deployments, which ensure desired pod states and handle restart policies, scaling, and updates.

Key Kubernetes components are:

Pods and Deployments: Each service has its own deployment definition and can be scaled independently. Example: The Product service runs with 2 to 4 replicas based on CPU load.

ClusterIP services: Internal DNS based service discovery, allowing one microservice to reach another by name; e.g., http://order-service:5020/api/orders.

ConfigMaps and Secrets: Used to inject environment-specific variables like DB URIs, Clerk keys, Kafka broker addresses, etc, into each container securely.

Horizontal Pod Autoscaler (HPA): Automatically adjusts the number of running pods based on resource usage metrics. For example, Product service scales based on CPU utilisation under high traffic.

Figure 4 shows the Kubernetes deployment of a microservices based architecture within a Minikube cluster. It includes services like Product, Wishlist, Chat, Notification, and Order, all communicating through Kafka as the message broker. PostgreSQL handles persistent storage, while Zookeeper coordinates Kafka brokers. The kube-apiserver manages deployments, stateful sets, and persistent storage. Horizontal Pod Autoscaler (HPA) dynamically scales the Product service based on usage metrics.

The benefits of this deployment setup are:

- Simulates real-world DevOps pipelines using open source tools.

- Supports modular redeployment, with no need to restart the whole system.

- Simplifies scaling and fault recovery.

- Maintains clear separation between development, configuration, and runtime.

This architecture allows developers to iterate quickly, roll out features safely, and experiment with real-time load balancing and observability tools like Prometheus and Grafana.

Frontend integration and authentication

The frontend of the platform is built using Next.js, a powerful React framework that supports both server-side rendering (SSR) and static site generation (SSG). It provides fast page loads, improved SEO, and dynamic routing—key for building interactive e-commerce experiences. Figure 5 illustrates the architecture of a full-stack microservices ecosystem, where a frontend web browser communicates via an API gateway supporting both REST and WebSocket protocols.

Users can browse products, manage wishlists, place orders, and chat with sellers, all through an intuitive and responsive interface.

Frontend features

Next.js routing: Dynamic pages for products, cart, orders, chats, and login.

API integration: Uses axios and native fetch( ) to call backend REST APIs hosted on each microservice.

WebSocket connections: Enables real-time chat and live notifications from the backend via persistent sockets.

Session management: Local storage and Clerk’s SDK manage secure token handling across sessions.

Authentication with Clerk.dev

Authentication and authorisation are handled using Clerk.dev, a plug-and-play identity platform offering prebuilt sign-up/sign-in UIs, secure JWT-based session management, role-based access control (e.g., buyer, seller) and integration with the frontend through Clerk’s React SDK.

Once users log in, Clerk provides JWTs that are attached to every frontend API request. Backend services validate these tokens to ensure only authenticated users can access protected routes.

This offloads complex auth logic from your codebase while keeping identity management secure and scalable.

Experimental challenges and learnings

Building this microservices-based e-commerce platform was not without its hurdles. From inter-service communication bugs to deployment quirks in Kubernetes, every step offered valuable hands-on experience. Given below are some of the key challenges faced and what we learned from resolving them.

Kafka setup and debugging

Challenge: Setting up Kafka on Minikube and ensuring services would consistently produce/consume events.

Learning: Network policies in Kubernetes can block inter-pod communication if not configured properly. Exposing Kafka with correct listeners and using kafkajs with proper retry logic helped stabilise message flow.

WebSocket handling

Challenge: Maintaining persistent WebSocket connections across multiple services and pods.

Learning: Centralising WebSocket logic in dedicated services (Chat, Notification) and using topics to forward events from Kafka to frontend greatly simplified architecture and improved stability.

Autoscaling with HPA

Challenge: CPU thresholds were too sensitive, leading to unnecessary scaling.

Learning: Fine-tuning targetCPUUtilizationPercentage and using custom metrics made autoscaling more predictable.

Auth token propagation

Challenge: Passing JWT tokens across services and ensuring backend verification.

Learning: Each service validates tokens individually via middleware; Clerk’s SDK made token decoding easier, but caching Clerk’s public keys reduced verification latency.

MongoDB vs PostgreSQL use

Challenge: Choosing when to use NoSQL or relational databases.

Learning: Use MongoDB for flexible and fast reads (e.g., product listings), and PostgreSQL when data consistency and transactions are critical (e.g., orders, payments).

Overall, these challenges transformed theoretical understanding into real DevOps and backend engineering skills—highlighting the power (and complexity) of distributed systems in real-world scenarios.

This project was a comprehensive exercise in building a real-world, production style application using a microservices architecture powered entirely by open source technologies. By combining tools like Node.js, Kafka, Docker, Kubernetes, MongoDB, and Next.js, we were able to create a scalable, modular, and reactive e-commerce platform that mirrors modern cloud-native applications.

Beyond technical implementation, the project provided deep insights into the challenges of distributed system design, inter-service communication, data consistency, authentication, and observability.

Future enhancements

Ingress controller: To enable external access and URL routing with TLS support.

CI/CD pipeline: Automating deployment with tools like GitHub Actions or Jenkins.

Observability tools: Integrate Prometheus and Grafana for real-time system monitoring.

Advanced caching: Use Redis to optimise product listings and reduce DB load.

Cloud deployment: Port the Minikube setup to a managed Kubernetes service like GKE or EKS.

With modular design, open source tooling, and hands-on implementation, this project not only served as a strong learning experience but also demonstrated the feasibility of building robust systems without relying on closed source or proprietary platforms.