In a world where organisations are transforming their infrastructure to house AI-based solutions, Docker and Kubernetes are proving to be powerhouses for developing secure and scalable software that is delivered with speed.

In October 2023, Docker announced the release of Docker AI — a revolutionary product within its developer toolkit that brought smart help directly into the software development process. Built to drive AI/ML application productivity and security to its optimal level, Docker AI is much more than code generation. Whereas tools such as GitHub Copilot and Tabnine aid in writing source code — which is generally only 10–15% of an application — Docker AI is taking on the larger and more nuanced 85–90% of app development, which is made up of infrastructure elements such as Dockerfiles, language runtimes, databases, message queues, and configuration files. This is important because real-world applications aren’t solely about logic and algorithms but about the entire system they inhabit.

Docker AI offers developers context-aware, automated advice while they’re coding Dockerfiles, creating Compose files, or debugging builds. It taps into the experience of millions of developers across the Docker ecosystem and leverages collectively shared best practices from Docker Hub, GitHub, and other community repositories. With Docker CEO Scott Johnston noting that Docker AI speeds up the whole development “inner loop,” developers can fix issues and iterate faster with more confidence. Backed by community-tested findings from the broader Docker community, the AI provides recommendations that are not only optimised but also secure and production-ready. According to Katie Norton of IDC, this type of generative tooling enhances productivity and developer happiness while reducing operational workloads.

Inside a containerised AI workflow: From code to cloud

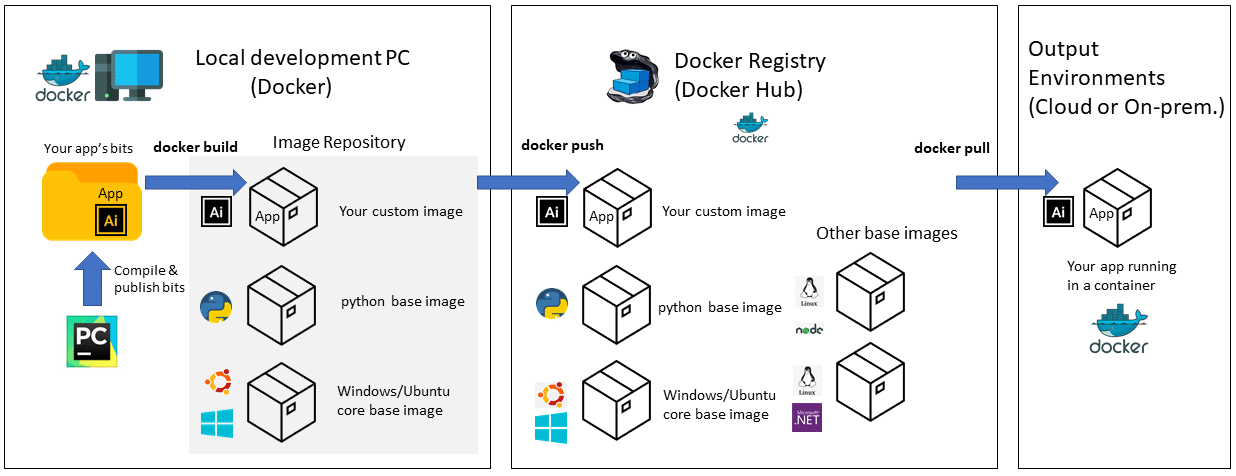

In a contemporary containerised artificial intelligence pipeline, development is done locally, where an ML engineer or data scientist develops the application from a root image — Python on Ubuntu or some other Linux OS. The development environment is all the dependencies, libraries (such as TensorFlow, PyTorch), and configuration files bundled in a Docker container. After the environment is in shape, the developer runs a Docker build to establish a personalised image of the application.

The image is pushed into a Docker registry such as Docker Hub or an on-cloud private registry. This renders the image accessible to remote environments and teams, and enables collaborative development, CI/CD pipelines, and model and code version control.

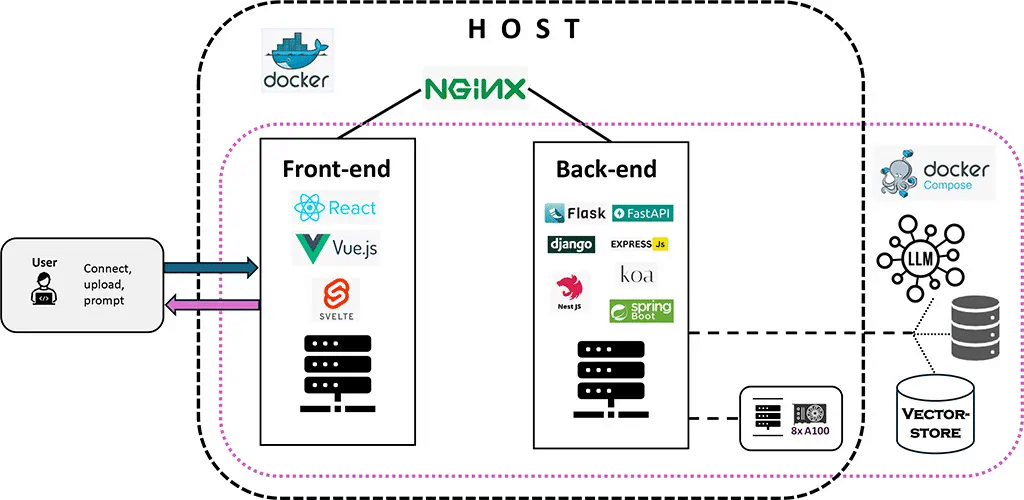

Figure 2 illustrates a typical AI/ML system architecture running inside Docker containers. It showcases a modular frontend (built with React, Vue.js, or Svelte) and a flexible backend (using frameworks like Flask, FastAPI, or Django), all hosted behind an NGINX reverse proxy. The entire stack runs on a Dockerized infrastructure, orchestrated with Docker Compose. The backend connects to high-performance compute (e.g., NVIDIA A100 GPUs) and integrates with LLMs and vector stores for intelligent processing.

Finally, when deployed, the image is copied from the registry to a production environment — whether a cloud service like AWS/GCP/Azure or an on-prem server. The AI app runs in the same Docker container, under the same environment it was initially coded and tested in. This guarantees consistency of outputs, eliminates dependence complications, and makes scaling and maintenance exponentially easier.

Dockerizing intelligence: Real-world use cases and edge deployments

As AI applications move to distributed, real-time systems from centralised processing environments, the necessity of containerisation becomes more critical. Docker’s lightness and platform independence make it a key facilitator of edge AI deployment — providing portability, consistency, and operational simplicity across hardware ecosystems.

Containerised AI enables developers and engineers to encapsulate models, runtime environments, and inference logic into isolated units that deploy with ease on edge devices — from industrial sensors and autonomous drones to hospital diagnostic equipment. This model obviates the historical friction between development and deployment, and harmonises with current needs for privacy, low latency, and real-time decision-making.

For production-ready deployments or edge scenarios involving caching and persistent storage, Docker Compose can orchestrate a lightweight AI stack as shown below:

version: ‘3.8’ services: ai-model: build: . ports: - “5000:5000” depends_on: - redis - mongodb redis: image: redis:alpine ports: - “6379:6379” mongodb: image: mongo ports: - “27017:27017”

Strategic advantages of Docker in edge AI

Portability across architectures: Docker containers encapsulate differences in underlying infrastructure. Developers can develop once on a development workstation and deploy throughout x86 or ARM-based systems — such as NVIDIA Jetson, Raspberry Pi, or cloud edge nodes — without environment-specific tuning.

Isolation and conflict resolution: Malicious coexistence of multiple ML services on edge platforms normally leads to conflicts of dependencies. Docker isolates services, ruling out package or runtime conflicts and making the system more reliable.

Maximised resource utilisation: Unlike virtual machines, Docker containers consume much less resources, which is critical in memory-constrained or battery-operated edge devices.

OTA (over-the-air) updates and rollbacks: Using Docker, AI models and business logic can be remotely updated through Docker registries or locked-down pipelines. This makes MLOps easier and improves maintainability of distributed AI systems.

Security and compliance: Containers provide process-level separation and support speedy patching of vulnerabilities. Also, Docker images can be signed and scanned to maintain integrity, which is particularly important in environments that must be regulated, such as finance or health care.

By encapsulating complex AI processes in standardised containers, Docker makes it possible for developers to deploy AI at scale from the cloud to the edge. From autonomous vehicles and intelligent factories to remote diagnosis systems, Docker makes AI systems modular, scalable, and ready for production.

Security, scale and speed: Making AI production-ready with Docker

Security, scalability, and performance are critical in today’s AI-driven world. Docker enables all three. From a security perspective, containerisation provides efficient process isolation, reducing attack surfaces and keeping models running in isolated environments. Docker containers can be hardened, vulnerability-scanned, and cryptographically signed to meet compliance requirements for high-risk industries such as healthcare and finance.

On the scalability front, Docker collaborates with orchestration tools like Kubernetes or Amazon ECS/EKS, and AI workloads can be horizontally scaled across distributed environments. This makes model availability and service reliability high even in case of high demand, such as during model retraining, batch inference, or live stream analytics. Moreover, Docker’s low system resource usage makes it possible to parallelize inference pipelines and fine-tuning jobs on cloud nodes.

From the perspective of speed, Docker streamlines startup times and execution performance by encapsulating only what is required for a specific task, eliminating bloat and conflicts in dependencies. AI models can be hosted as microservices inside containers, driving time-to-market with reproducibility. GPU-accelerated containers and deployment models ready at the edge enable organisations to perform well.

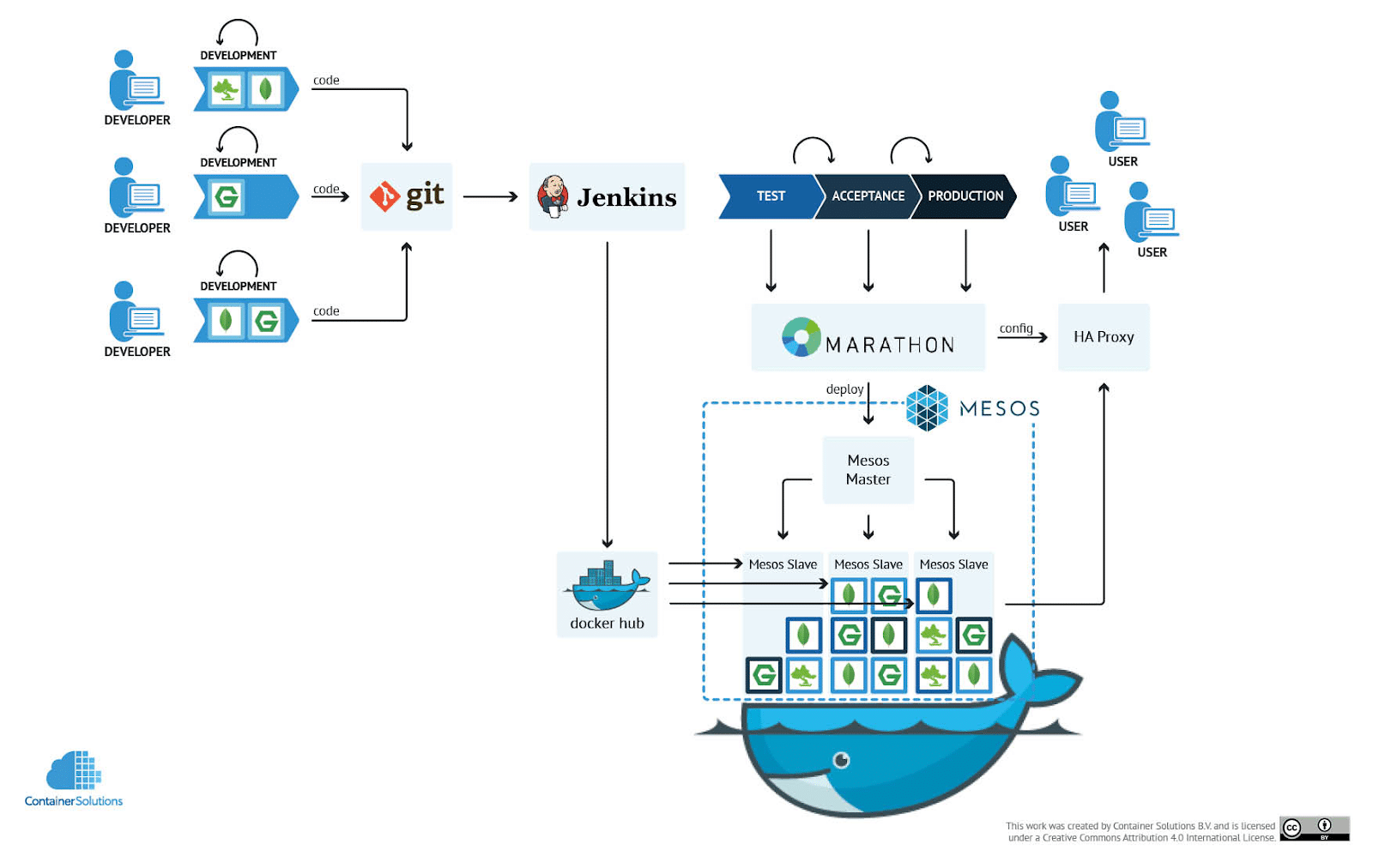

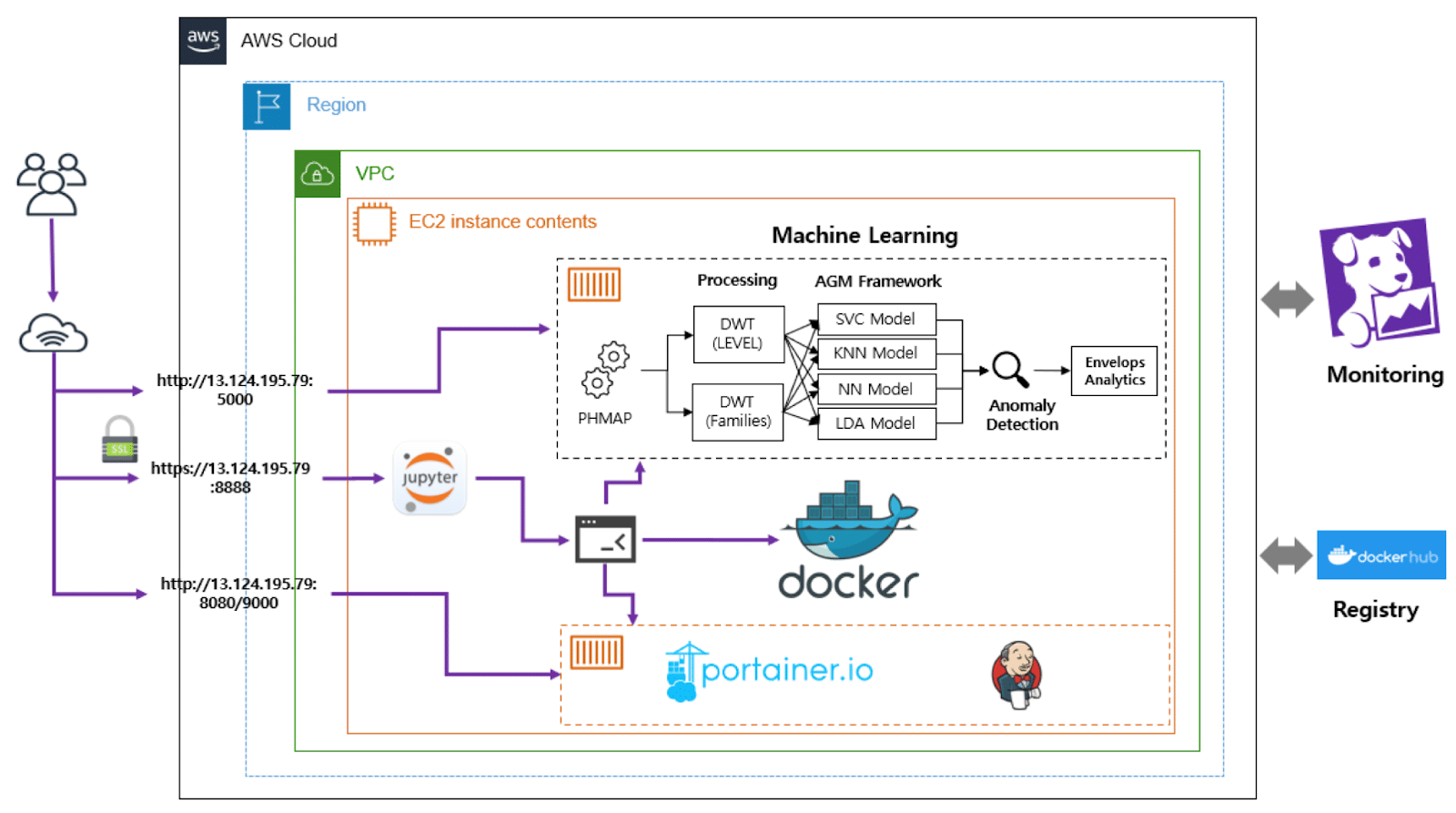

To show how all these principles work together in an actual production deployment, Figure 4 summarises a standard AI production pipeline constructed with Docker containers on AWS infrastructure. It emphasises secure access control, DevOps automation, monitoring, and lifecycle management of AI models.

Table 1: Use cases of Dockerized AI in edge deployments

| Application | Docker role | Deployment context |

| Respiratory disease detection via AI from audio data | Containerises inference models for low-resource edge devices | Rural clinics, portable devices |

| Predictive maintenance for industrial machinery | Hosts data pipelines and ML models for anomaly detection | On-premises edge gateways |

| Customer footfall and behaviour analytics | Deploys AI vision models with local privacy-preserving inference | Smart shelves, CCTV analytics |

| Crop monitoring and pest detection | Supports containerised image classifiers on mobile field devices | Drones, IoT sensors in farmlands |

| Object detection in autonomous vehicles | Enables real-time model inference on GPU-accelerated edge nodes | Drones, self-driving units |

The road ahead

Looking ahead, the intersection of containerisation and AI is going to become even more profound. AI technology is being made increasingly complex, distributed, and context aware. Hence it needs modular, resilient, and adaptable architectures. Docker and Kubernetes are at the centre of this revolution, empowering not only containerisation but also orchestration of advanced multi-model systems in cloud, hybrid, and edge infrastructures.

Serverless AI containers, automated MLOps pipelines, and federated learning deployments are current trends — all of which take advantage of Docker’s scalability and reproducibility. Docker AI is one of the tools trying to reduce infrastructure complexity so developers can spend more time on AI logic and less time on deployment challenges. We are also seeing the emergence of domain-specific containers for real-time video processing, large language model inference, and privacy-preserving AI with the use of secure enclaves — fuelled by container runtimes that are optimised for GPUs and hardware acceleration.

Docker will continue to be a foundational technology, transitioning from a packaging tool to a full-fledged enabler of AI-as-a-service models, decentralised computing, and real-time edge intelligence.

Career opportunities

For professionals and students entering the domains of IT and AI, Docker and Kubernetes skills are not an option but a requirement. As companies battle to expand AI solutions and transform their infrastructure, they are frantically searching for engineers who specialise in containerised environments, CI/CD pipelines, and cloud-native architectures.

Mastery over Docker opens doors to professional opportunities in DevOps, MLOps, cloud engineering, data science, and AI product development. Good Kubernetes knowledge adds extraordinary value, particularly if you’re responsible for running large-scale AI deployments in AWS, Azure, or GCP. Self-taught certifications, familiarity with open source tools, and Docker-facilitated project portfolios can set you apart within a growing competitive pool.

Docker and Kubernetes, in a way, form the foundation of today’s AI software stack. Learning these skills, therefore, not only future-proofs one’s career, but also puts individuals in a position to drive innovation.