The integration of generative AI in the AWS Database Migration Service enhances schema conversion, making data migrations more accurate, speedy, and efficient.

In today’s rapidly shifting digital landscape, organisations are compelled to modernise their data infrastructure to stay competitive and agile. Database migration is an essential part of this transformation, yet it presents a range of challenges—complex schemas, diverse technologies, and the risk of downtime. Amazon Web Services (AWS) offers the Database Migration Service (DMS) to streamline this process, and recent advancements in generative AI are further revolutionising how migrations are carried out.

Homogeneous vs heterogeneous database migration

Homogeneous database migration refers to the movement of data between two databases that share the same engine or technology. For instance, migrating from MySQL to MySQL, or Oracle to Oracle. These migrations typically involve fewer compatibility issues because the source and target databases speak the same ‘language’, making schema conversion and data transfer relatively straightforward.

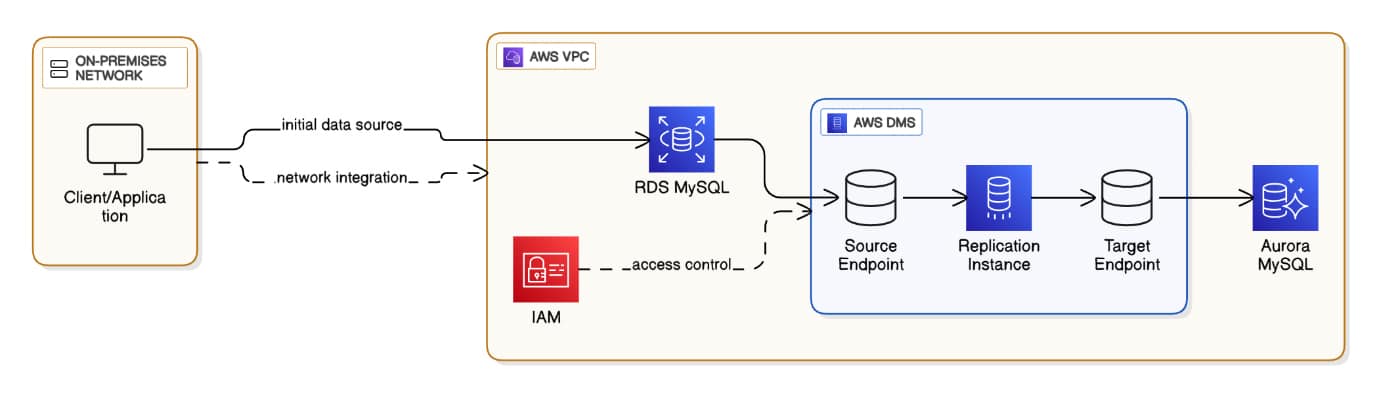

Consider the scenario of an organisation running its production workload on Amazon RDS MySQL. To improve performance and scalability, the business decides to migrate to Amazon Aurora MySQL, a high-availability, cloud-native database service.

AWS DMS facilitates this process by connecting to the source RDS MySQL instance, extracting and transforming the data as needed, and loading it into Aurora MySQL. Since both databases share the same engine, schema conversion is usually direct, and the risk of data loss or incompatibility is minimal.

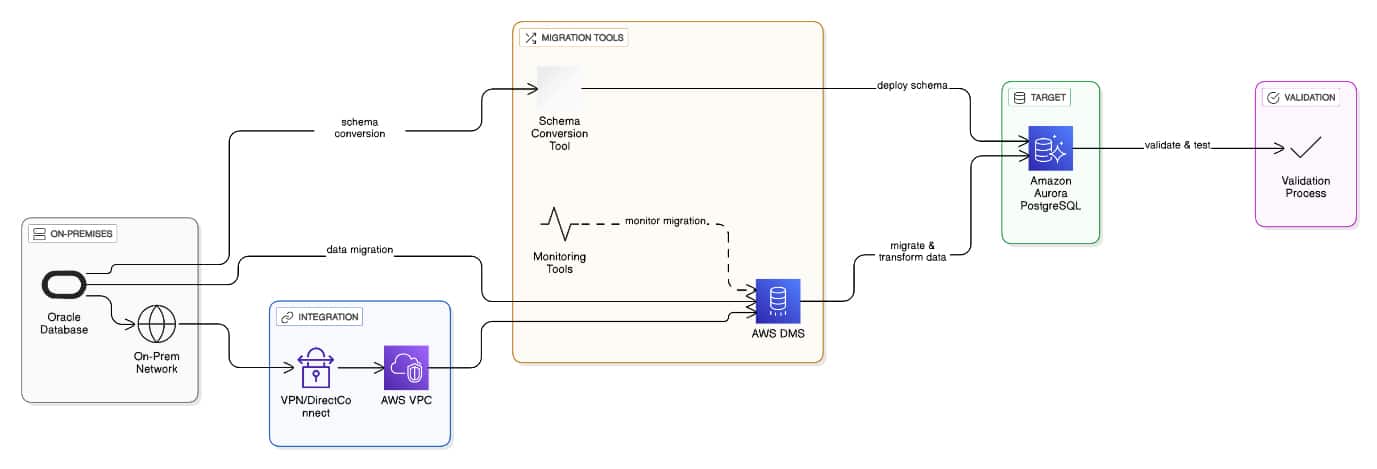

Heterogeneous database migration involves moving data between databases that use different engines or technologies. Examples include migrating from Oracle to PostgreSQL, or SQL Server to MySQL. These migrations are inherently more complex, as differences in data types, functions, and procedural code must be reconciled to ensure seamless compatibility.

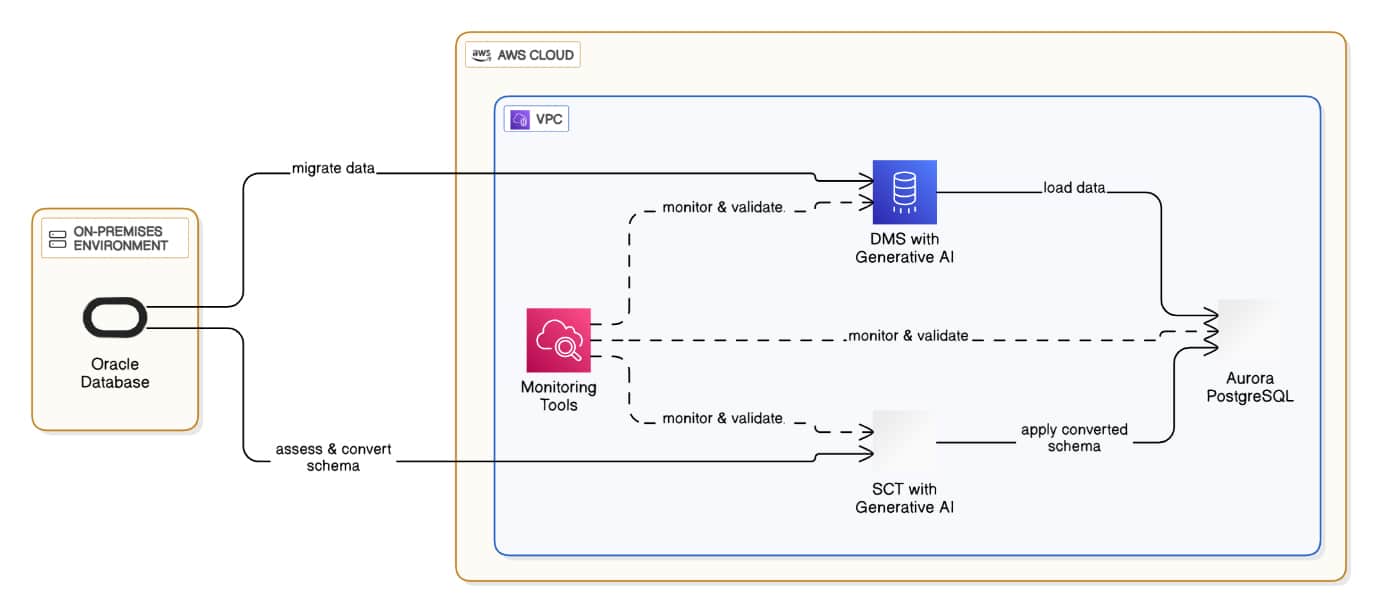

Imagine a financial institution operating a legacy Oracle database on-premises, seeking to modernise by migrating to Amazon Aurora PostgreSQL on AWS. This transition is not straightforward as Oracle and PostgreSQL have distinct ways of handling data, constraints, and stored procedures. Therefore, AWS DMS works in tandem with the AWS Schema Conversion Tool (SCT), which analyses the source Oracle schema and generates conversion scripts for Aurora PostgreSQL.

This process requires careful mapping of data types and business logic to ensure that the target database functions as intended.

Pain areas in AWS database migration

While cloud adoption is a strategic imperative, the actual mechanism of moving data—specifically heterogeneous migration—remains one of the most daunting tasks for IT leaders. The manuscript touches upon ‘complex schemas’ and ‘downtime’, but the pain points run significantly deeper when analysed through a technical lens.

The primary bottleneck in database migration is arguably the schema conversion ‘impedance mismatch’. When migrating from legacy commercial engines (like Oracle or SQL Server) to open source cloud-native engines (like Amazon Aurora PostgreSQL), the challenge is rarely the data itself—it is the application logic residing in the database. Proprietary procedural code (PL/SQL or T-SQL) often contains decades of business logic, bespoke functions, and proprietary extensions that have no direct equivalent in the target database. Traditional rule-based tools, such as the standard AWS Schema Conversion Tool (SCT), are excellent at converting about 80% of this code automatically. However, the remaining 20%—often referred to as the ‘last mile’—requires manual rewriting. This manual intervention is error-prone, requires niche expertise, and often delays project timelines by months.

Secondly, performance degradation risks pose a significant psychological and operational barrier. A database tuned for twenty years on-premises has execution plans optimised for specific hardware and storage subsystems. Moving this to a cloud architecture can initially result in query regression, where queries that ran in milliseconds suddenly take seconds. Identifying these regressions before the cutover is difficult because simulating full production loads in a staging environment is resource-intensive and complex.

Finally, data integrity and validation at scale is a massive pain point. In multi-terabyte migrations, verifying that every row, constraint, and index has been replicated accurately is computationally expensive. Traditional checksum validations can slow down the migration process itself. The fear of ‘silent data corruption’—where data types are coerced incorrectly during transit without throwing an error—keeps database administrators up at night. This anxiety often leads to extended ‘parallel run’ periods where both old and new systems are kept active, doubling costs and operational overhead.

Using generative AI in database migration

The integration of generative AI into the database migration lifecycle marks a shift from ‘deterministic translation’ to ‘semantic understanding’. Historically, migration tools operated like a dictionary: if they saw Word A in the source, they replaced it with Word B in the target. If Word A had no direct translation, the tool failed. Generative AI, specifically large language models (LLMs) integrated into services like AWS DMS Schema Conversion, changes this paradigm by analysing the ‘intent’ of the code.

Using generative AI in this context involves a multi-step workflow that mimics a senior database engineer’s reasoning. When the migration service encounters a complex stored procedure or a proprietary function that cannot be automatically converted by standard rules, the AI model analyses the code structure, comments, and variable dependencies. It does not just look for syntax equivalents; it attempts to understand the business logic. For example, if an Oracle DECODE function is nested deeply within a complex WHERE clause, a rule-based tool might flag it as an error. A generative AI model, however, can deconstruct the logic and rewrite it as a clean, optimised PostgreSQL CASE statement or a Python-based Lambda function if necessary.

Furthermore, generative AI assists in code optimisation and modernisation. It doesn’t just port legacy technical debt to the cloud; it can suggest more cloud-native ways to write the query. For instance, it might suggest replacing a cursor-based iteration (which is slow) with a set-based operation that performs better in Amazon Aurora.

However, the implementation of genAI in this field is designed to be ‘human-in-the-loop’. The AI generates the conversion script and highlights the confidence level of its suggestion. This empowers database engineers to act as reviewers rather than writers. Instead of writing thousands of lines of boilerplate code, they spend their time validating the AI’s output, significantly accelerating the velocity of the migration project while maintaining high standards of code quality.

How AWS DMS facilitates database migration

AWS DMS is designed to minimise disruption and simplify database migration. It supports both one-time migrations and ongoing replication, allowing for a seamless cutover with minimal downtime. The service connects to the source and target databases, extracts data, applies necessary transformations, and loads the data into the target database. Key features include:

- Support for a wide range of database engines, both on-premises and in the cloud

- Continuous data replication for near-zero downtime migrations

- Automatic handling of schema and data type mapping

- Integration with AWS SCT for complex schema conversions in heterogeneous migrations

By leveraging these features, organisations can migrate large and complex databases with confidence, reducing the risk of data loss and service interruption.

Generative AI for schema conversion in DMS

Generative AI introduces a new paradigm in database migration by automating and intelligently enhancing the schema conversion process. Traditionally, schema conversion—especially in heterogeneous migrations—has been a manual, time-consuming task. Generative AI leverages machine learning models trained on vast amounts of database schemas and migration patterns to analyse, interpret, and generate optimal schema mappings between source and target databases.

For example, during a heterogeneous migration, such as Oracle to Aurora PostgreSQL, generative AI embedded within AWS DMS or SCT evaluates the source schema, understands complex relationships and procedural logic, and automatically suggests or applies conversion scripts. For example, Oracle procedural code (PL/SQL) may be converted into equivalent PostgreSQL functions, with AI handling nuances such as exception handling or data type differences. This not only accelerates the migration process but also reduces errors that manual conversion might introduce.

Advantages of generative AI features in DMS

Improved accuracy

AI models reduce human error by automatically identifying and converting compatible schema elements, ensuring data integrity throughout the migration.

Faster migration

Automated schema conversion and mapping drastically cut down the time required for migration, allowing organisations to modernise their infrastructure swiftly.

Reduced manual effort

Generative AI takes on the heavy lifting of complex conversions, freeing database engineers to focus on validation and optimisation rather than repetitive tasks.

Enhanced compatibility

AI-driven tools can learn from previous migrations, continuously improving conversion techniques and ensuring seamless compatibility between diverse database technologies.

These advantages combine to make generative AI an indispensable ally in large-scale database migrations, especially for organisations with limited resources or tight timelines.

AI infusion in one-time data migration and real-time data synchronisation in AWS DMS

The application of AI in AWS DMS differs significantly depending on whether the task is a one-time ‘full load’ or continuous ‘change data capture’ (CDC). Understanding this distinction is crucial for architects designing resilient data pipelines.

In one-time data migration (full load), the role of AI is primarily preparatory and structural. Before a single byte of data moves, AI-driven assessment tools analyse the source database to map data types to the target environment. This is where the ‘schema conversion’ capabilities shine. The AI evaluates the data distribution to recommend optimal partitioning keys for the target database. For example, if migrating to a distributed data store, the AI can analyse query patterns and data cardinality to suggest a shard key that prevents ‘hot partitions’—a common issue that usually requires weeks of manual analysis to identify. Additionally, during the bulk load, AI algorithms can dynamically adjust the detailed settings of the migration task (such as commit rates and batch sizes) based on the observed throughput and resource utilisation of the source and target, ensuring the migration completes as fast as possible without crashing the production source database.

In real-time data synchronisation (CDC), once the initial load is complete, the focus shifts to keeping the databases in sync. Here, AI infusion moves into the realm of operational intelligence and anomaly detection. In a standard replication scenario, network glitches or transaction log spikes can cause ‘replication lag’. AI models trained on historical metric data can predict these lags before they impact the business. For instance, if the AI detects a specific pattern of write-heavy transactions on the source that historically causes delays, it can pre-emptively auto-scale the DMS replication instance to handle the incoming surge.

As cloud adoption accelerates across India and globally, the need for efficient, reliable database migration continues to grow. AWS DMS, empowered by generative AI, offers a comprehensive solution for both homogeneous and heterogeneous migrations, addressing the challenges of schema conversion with intelligence and speed. The integration of generative AI not only improves accuracy and reduces manual effort but also lays the foundation for future innovations in automated database management. Cloud professionals, IT managers, and database engineers can look forward to a future where database migrations are smarter, faster, and more secure than ever before. Ultimately, the integration of generative AI into AWS DMS does more than just move data; it acts as a catalyst for the AI-driven enterprise.