MIT CSAIL’s open source Recursive Language Models let LLMs reason over 10 million tokens without retraining, redefining long-context AI through systems-level design rather than bigger proprietary models.

MIT CSAIL has introduced Recursive Language Models (RLMs), an open-source inference framework that allows large language models to process more than 10 million tokens without context rot and without retraining. The approach shifts long-context AI from proprietary scaling to open systems innovation, enabling both frontier and open-source models to operate at extreme scale.

Instead of forcing long prompts into a fixed context window, RLMs treat the prompt as an external environment. Models programmatically inspect, decompose and recursively reason over massive text corpora, reframing long-context reasoning as a systems problem rather than a model architecture problem.

Designed as a wrapper around existing LLMs, RLMs function as a drop-in replacement for standard API calls, making them immediately usable across enterprise applications without retraining. Target use cases include codebase analysis, legal and compliance review, multi-step reasoning and retrieval over massive documents.

Technically, long text is stored as a variable in a Python REPL environment. The LLM writes code to explore the data and selectively pulls only relevant snippets into its active context. The system draws inspiration from classical out-of-core algorithms, which process datasets larger than memory by loading data on demand.

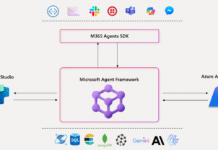

RLMs use a two-agent architecture, with a root language model orchestrating planning and a cheaper recursive model processing extracted snippets. In benchmarks such as BrowseComp-Plus, RLMs achieved 91.33% accuracy at multi-million-token scale, where base models failed entirely.

The framework is available on GitHub, positioning RLMs as an open experimentation layer that complements retrieval-based methods like RAG rather than replacing them.