Cloud-scale AI has found its way into the tiniest chips, but the real challenge lies not in training models; it is in running them on devices that cannot rely on cloud power. Here is how an open-source shift led by Meta, Arm, and Alif Semiconductor is redefining what is possible on the edge.

AI is going small; very small. What once required racks of GPUs (graphics processing units) now fits inside a smartwatch or an industrial sensor. But as AI moves from the cloud to the edge, a fundamental problem emerges: frameworks like PyTorch, built for powerful data centres, struggle to function within the tight power, memory, and latency limits of embedded systems.

That is where ExecuTorch, an open-source runtime developed by Meta and now adopted by Arm and Alif Semiconductor, steps in. As Alif’s Henrik Flodell explained in conversation with Akanksha Sondhi Gaur from Electronics For You, “The real challenge is not training models, it is running them where there is no room, power, or cloud connection to spare.”

Edge devices, like wearables, sensors, and IoT nodes, cannot afford constant cloud communication. Sending raw data to remote servers drains batteries, increases latency, and raises privacy and storage costs. Every time a branch moves in front of your security camera, you do not need to upload that clip. You just want the system to recognise what matters and act locally.

Until recently, microcontrollers simply could not handle AI workloads of that complexity. PyTorch models were too large and challenging to quantise for the small, integer-based processors typical of embedded hardware.

ExecuTorch changes this. It converts PyTorch-trained models into lightweight formats that can run directly on Arm Ethos-U55 and U85 NPUs (neural processing units), hardware accelerators integrated into Alif’s Ensemble MCUs. This means models for convolutional, recurrent, and even transformer networks can now execute locally with minimal loss in accuracy.

In Flodell’s words, “It is now straightforward to take a model built for a GPU server and deploy it on a constrained system without rewriting the architecture or sacrificing precision.”

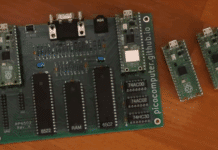

The company’s split-silicon architecture divides workloads between two domains: a low-power side that continuously collects sensor data, and a high-performance side that wakes up only to process critical information. The result: near-real-time performance at ultra-low power. This enables always-on intelligence in devices like health trackers or smart sensors that must process data continuously without overheating or draining batteries.

Perhaps the most striking innovation is the company’s claim to be the first to offer full hardware acceleration for transformer networks, the same neural architecture driving today’s generative AI. This allows microcontrollers to handle workloads previously reserved for servers: speech-to-text translation, context-aware assistants, and even story generation from visual input. The company demonstrated two examples:

- A speech-to-text system using a Conformer network running fully on a microcontroller.

- A camera-based storytelling demo using a miniaturised LLaMA-2 model that generated short narratives about what the camera ‘saw.’

Open-source collaboration is the only way to keep up with AI’s relentless pace. Proprietary runtimes cannot evolve fast enough. By using open frameworks like PyTorch and ExecuTorch, you get a living ecosystem, updated weekly by thousands of contributors. This partnership between Meta, Arm, and Alif marks a broader shift: AI is no longer a cloud privilege; it is becoming an embedded standard. Flodell predicts that “AI acceleration will soon be as standard in MCUs as sensor integration is today.”

The frameworks are ready. The chips are ready. But is the embedded ecosystem ready to catch up? Stay tuned for the full interview!