As systems grow in complexity and data sizes become unmanageable, it is AI-powered edge intelligence that will help IoT devices perform efficiently. And this technology, not surprisingly, is evolving with the help of a range of open source tools.

By 2030, the global edge AI market is projected to exceed US$ 200 billion, driven by one fundamental constraint: latency. When a manufacturing robot needs to detect a defect, an autonomous vehicle must avoid a collision, or a medical device requires real-time patient monitoring, sending data to the cloud and waiting for a response isn’t just inefficient—it’s dangerous.

Yet, despite this explosive growth, most organisations struggle with a critical question: How do we build production-ready, AI-powered IoT systems that are both cost-effective and secure?

The answer lies in open source. Let’s explore proven architectural patterns, open source tools, and real-world implementation strategies that bridge the gap between proof-of-concept and production deployment.

The cloud bottleneck

Traditional IoT architectures relied heavily on sending raw sensor data to centralised cloud infrastructure for processing and decision-making. This model performed adequately when handling periodic updates and non-time-critical applications. However, as IoT deployments scale and use cases demand real-time responsiveness, the limitations of cloud-first approaches become huge barriers to innovation and efficiency as can be seen in Table 1.

| Dimension | Cloud-centric architecture | Edge-native architecture |

| Response latency | 100-500ms round-trip time; unsuitable for real-time applications requiring sub-10ms response | 1-10ms response times; enables real-time decision-making at the source |

| Bandwidth economics |

Continuous streaming of raw data can cost $40+ per device monthly for high-resolution sensors; costs scale linearly with device count | 90% bandwidth reduction through local processing and selective data transmission; costs remain relatively flat as fleet grows |

| Data privacy and compliance | Sensitive data must traverse public networks and reside in third-party infrastructure; complex compliance requirements | Data processed and retained locally; sensitive information never leaves premises; simplified compliance posture |

| Operational resilience |

Complete dependency on network connectivity and cloud availability; single point of failure | Autonomous operation during network outages; graceful degradation; continues functioning independently |

| Scalability economics |

Infrastructure costs grow proportionally with data volume and processing needs; potential for runaway costs | Processing is distributed across edge devices; cloud costs grow sub-linearly; predictable cost structure |

| Decision making | Centralised analysis enables global optimisation but requires complete data visibility | Local autonomy with federated intelligence; can implement hierarchical decision-making |

| Initial complexity | Lower barrier to entry; well-understood patterns and abundant expertise | Higher initial architectural complexity requires distributed systems expertise |

| Maintenance | Centralised updates and monitoring; simpler operational model | Distributed device management requires robust OTA update mechanisms |

Table 1: Cloud vs edge: A comparative analysis

The comparison reveals that edge intelligence isn’t simply ‘better’ than cloud computing—rather, it addresses a fundamentally different set of requirements. The optimal architecture often employs a hybrid approach, leveraging edge computing for real-time, latency-sensitive operations while utilising cloud resources for long-term analytics, model training, and enterprise-wide insights. Understanding when and where to apply each paradigm becomes the critical architectural decision that separates successful deployments from failed experiments.

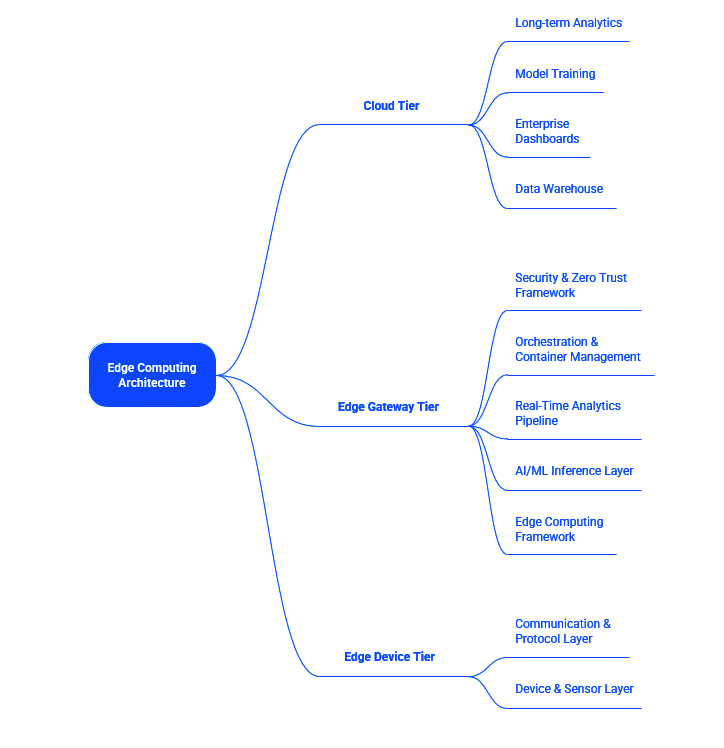

The architecture blueprint

Designing a production-grade edge AI system requires careful orchestration of multiple technology layers, each serving distinct functions while maintaining seamless integration. The architecture must balance competing concerns: computational efficiency on resource-constrained devices, robust communication in unreliable network conditions, security against evolving threats, and operational manageability across potentially thousands of distributed nodes. The following architectural framework represents battle-tested patterns that have proven successful in enterprise deployments across various industries, including manufacturing, retail, healthcare, and smart city applications.

Layer 1: Device and sensor layer — the physical foundation

The device and sensor layer is the backbone of an edge AI system, integrating digital intelligence with the physical world. It includes sensors to capture environmental data, edge computing hardware for processing, and the operating system for runtime. Hardware choices have a significant impact on power consumption, processing capabilities, security, and operational costs. Common platforms include the Raspberry Pi 4 for general computing, the NVIDIA Jetson Nano for GPU-accelerated applications, the ESP32 for low-power wireless use, and the Arduino for basic sensor tasks.

Layer 2: Communication and protocol layer – the nervous system

This layer functions as the nervous system of edge AI, enabling data flow between devices, gateways, and the cloud. MQTT is the standard for IoT messaging, with Eclipse Mosquitto as a robust broker. Its publish-subscribe model simplifies connections and ensures reliable message delivery. For resource-constrained devices, CoAP provides a lightweight alternative.

Layer 3: Edge computing framework – the coordination layer

EdgeX Foundry addresses the complexity of IoT by providing a microservices framework that offers vendor-neutral abstractions for connectivity, data normalisation, and data export. Its architecture allows for component swapping without requiring redesign of the entire system. The device service simplifies diverse protocols, while the central data service ingests telemetry for other services.

Layer 4: AI/ML inference layer – the intelligence core

The AI inference layer evolves edge devices from simple data collectors into intelligent decision-makers. TensorFlow Lite is the leading framework for edge AI, optimising model sizes and accelerating inference through techniques like quantization and pruning. TensorFlow Lite for Microcontrollers enables complex neural networks on devices with under 50 kilobytes of memory, making it ideal for battery-powered sensors and wearables.

Layer 5: Real-time analytics pipeline – the intelligence amplifier

The analytics pipeline aggregates insights across edge devices, revealing patterns not visible in isolated nodes. Apache Kafka acts as a distributed commit log, while Spark Streaming processes events in micro-batches, enabling fleet-wide anomaly detection and adaptive control strategies. Alternative architectures, such as InfluxDB and Grafana, focus on time-series data, optimising storage and queries for metrics-driven applications.

Layer 6: Orchestration and container management – the operational foundation:

K3s simplifies Kubernetes for edge environments, offering a lightweight footprint that enables the management of containerised workloads on resource-constrained devices. It ensures consistent deployments across diverse infrastructures, supports reliable rollouts and rollbacks, and manages the complexities of distributed systems. Service mesh technologies, such as Linkerd, enhance security and observability. Container orchestration allows sophisticated deployment strategies, ensuring systems run reliably over time with minimal intervention.

Layer 7: Security framework – the trust foundation

Edge deployments face unique security challenges due to physical accessibility and hostile network environments. Zero trust architecture and robust security measures are essential to protect these systems effectively. Network segmentation isolates device networks from enterprise networks and the public internet, utilising secure tunnelling protocols like WireGuard to provide encrypted communication channels with minimal overhead.

This layered architecture enables teams to work on different layers independently while maintaining well-defined interfaces. Open source components at every layer eliminate vendor lock-in while providing production-grade capabilities developed and validated by global communities.

However, this sophistication comes with complexity. The learning curve spans multiple domains, including embedded systems and networking, as well as distributed systems and machine learning. Integration challenges arise at layer boundaries, requiring careful attention to data formats and error handling.

Real-world application: Intelligent retail supply chain

The retail industry faces relentless pressure to optimise inventory, reduce waste, and deliver seamless customer experiences while operating on razor-thin margins. Traditional supply chain management relies on batch processing, periodic inventory counts, and reactive responses to stockouts or overstocking situations. Edge AI transforms this reactive model into a proactive, continuously optimising system that anticipates problems before they impact operations and customers.

The challenge: Complexity at scale

Modern retail supply chains operate with extraordinary complexity. Distribution centres process thousands of SKUs daily, each with unique handling requirements, shelf-life constraints, and demand patterns. Temperature-sensitive products, such as fresh produce, dairy, and frozen foods, require constant monitoring to prevent spoilage. Transportation networks move products across varying climate conditions, with refrigerated trucks facing compressor failures, door seal degradation, and inadequate airflow that create hot spots and cold spots within individual trailers.

Store-level operations present their own challenges. Inventory accuracy has a direct impact on both customer satisfaction and capital efficiency; however, physical inventory counts are labour-intensive and error-prone. Stockouts drive customers to competitors while overstocking ties up capital and leads to markdowns or waste. Theft and shrinkage drain profitability, with traditional loss prevention focused on detection rather than prevention. Customer behaviour analytics remain shallow, based on point-of-sale data that reveals what people bought but not what they considered, what they couldn’t find, or how they navigated the store.

Cloud-centric approaches to these challenges face fundamental limitations. Sending every RFID read event, every computer vision frame, and every sensor measurement to the cloud creates untenable bandwidth costs and latency that prevent real-time intervention. A single distribution centre with a thousand cameras generating standard-definition video at 15 frames per second produces over 200 gigabytes per hour of raw data. Multiply across hundreds of locations and the costs become prohibitive.

Edge AI architecture

The intelligent retail supply chain deploys edge computing infrastructure at every level. Distribution centres receive powerful edge gateway servers running K3s clusters, hosting computer vision models for visual inspection, TensorFlow Lite inference engines for quality prediction, and EdgeX Foundry for device integration. Refrigerated transport vehicles carry ruggedized edge computers with cellular connectivity, running anomaly detection models that predict equipment failures hours before breakdown. Individual store locations deploy edge gateways coordinating networks of smart shelves, computer vision cameras, and environmental sensors.

At the device level, smart shelves equipped with weight sensors and RFID readers continuously track inventory levels and product movement patterns. Computer vision cameras monitor produce quality, detecting indicators of bruising, discolouration, or spoilage. Environmental sensors throughout the cold chain track not just temperature and humidity but also vibration, light exposure, and gas composition, building a rich context for predictive quality models. Each device runs lightweight AI models that perform local inference, publishing only actionable insights and anomalies, rather than raw sensor streams.

The communication layer implements MQTT for reliable, bandwidth-efficient telemetry with quality of service guarantees, ensuring that critical alerts are never lost. Devices publish to hierarchical topics, enabling flexible subscription patterns. Store-level gateways aggregate local data and forward summary statistics and exceptions to regional and enterprise tiers. The protocol layer implements store-and-forward logic, buffering data during network outages and intelligently prioritising transmission when connectivity is restored, ensuring that high-priority alerts always reach their destination, even under adverse conditions.

EdgeX Foundry at the gateway tier integrates diverse devices through protocol-specific device services, normalising data into consistent formats and providing unified access for higher-level services. The rules engine implements local business logic, automatically triggering replenishment orders when inventory falls below safety stock levels, alerting staff when temperature excursions threaten product quality, and coordinating responses across multiple systems. Application services filter and transform data for export to enterprise systems, implementing rate limiting, privacy filtering, and protocol translation.

The AI inference layer runs sophisticated models trained on historical data and continuously refined through federated learning. Quality prediction models analyse and produce images, predicting remaining shelf life and optimal markdown timing. Demand forecasting models combine historical sales patterns, local events, weather forecasts, and real-time foot traffic to optimise inventory positioning. Anomaly detection models identify unusual patterns suggesting equipment problems, security concerns, or process breakdowns.

The analytics pipeline aggregates insights across locations, identifying patterns invisible at individual sites. Apache Spark Streaming processes event streams, correlating inventory levels across distribution networks to optimise allocation. Time-series analysis identifies systematic problems with suppliers, transportation providers, or specific SKUs.

The intelligent retail supply chain delivers transformative improvements across multiple dimensions. Inventory accuracy improves from typical levels of 85% to 98%. This directly translates to reduced stockouts, with availability improving from 92% to 98%. This captures sales previously lost to out-of-stock conditions while simultaneously reducing safety stock requirements and capital tied up in inventory.

Waste reduction is equally dramatic, particularly for perishable products. Predictive quality models enable dynamic markdown timing, selling products while they are still fresh but approaching the end of their shelf life, rather than holding them for full price until spoilage forces disposal. Smart cold chain monitoring detects equipment problems and temperature excursions immediately, rather than discovering spoilage during routine inspections.

Operational efficiency gains are evident in reduced labour requirements for inventory management, faster replenishment cycles, and improved space utilisation. Staff members receive automated task lists that prioritise activities by impact and urgency, rather than following rigid schedules that may not align with actual needs. Computer vision enables contactless checkout, reducing labour costs. Predictive maintenance prevents equipment failures during peak periods.

Implementation realities and the lessons learned

Starting small with focused pilot projects in controlled environments enables teams to learn and refine their approaches before scaling them across entire operations. Initial projects often focus on single pain points, such as cold chain monitoring or inventory accuracy, for specific high-value or high-risk categories, demonstrating clear ROI and building organisational confidence before tackling more complex applications.

Data quality and model accuracy require sustained attention, as model performance degrades over time when conditions drift from those in the training data. Successful organisations implement continuous monitoring of model predictions against ground truth, automated retraining pipelines that incorporate new data, and clear escalation paths when model confidence falls below acceptable thresholds. The goal isn’t perfect prediction but rather measurably better performance than manual processes while handling uncertainty.

Change management often presents greater challenges than technology implementation. Store associates and logistics staff require training not just on system operation but on why edge AI helps them perform their jobs more effectively. Transparency about what systems measure and how data gets used builds trust essential for adoption. Efficiency gains don’t simply mean reduced hours for frontline workers but rather an opportunity to focus on higher-value activities that machines cannot perform.

Open source tools: The foundation of edge AI innovation

The rapid maturation of edge AI owes much to thriving open source communities that have collectively invested millions of developer hours in creating production-grade tools accessible to organisations of all sizes. Unlike proprietary platforms that create vendor lock-in and charge premium prices for essential capabilities, open source tools provide transparency, flexibility, and freedom to innovate without constraints.

Table 2 catalogues the most impactful open source tools for edge AI deployments, organised by architectural function and annotated with practical guidance on selection criteria.

| Category | Tool | Best for | Learning curve | Community strength | Enterprise adoption |

| Edge framework |

EdgeX Foundry | Enterprise-scale deployments requiring vendor-neutral device integration and microservices architecture | Medium — requires understanding of microservices, containers, and IoT protocols | Strong — Linux Foundation project with contributions from Intel, Dell, Samsung, and 3000+ developers | High-production deployments across manufacturing, energy, and smart cities |

| Home Assistant | Smart building automation, residential IoT, and rapid prototyping with extensive device support | Low-visual configuration, large pre-built integration library, active community support | Very strong — one of the largest open source IoT communities with 2000+ integrations | Medium — primarily small to medium deployments, growing enterprise interest | |

| Open Horizon | Autonomous edge computing with self-managing nodes and model distribution at scale | Medium — IBM architecture requires a learning curve, but extensive documentation | Medium — IBM-led with growing external contributions | Medium — primarily IBM customers and partners | |

| Communication | Eclipse Mosquitto | MQTT messaging in any scale deployment, from single devices to million-message-per-second brokers | Low — simple installation and configuration, excellent documentation | Very strong – Eclipse Foundation project, de facto standard for MQTT | Very high — production standard across all industries |

| ChirpStack | Private LoRaWAN networks for long-range, low-power connectivity | Medium — requires understanding of LoRaWAN protocol and RF considerations | Strong — active development, comprehensive documentation, commercial support available | Growing — primarily industrial IoT and smart city deployments | |

| CoAP libraries | Ultra-lightweight UDP-based communication for battery-powered devices | Medium — less mature tooling than MQTT, requires deeper networking knowledge | Medium — standardised but less tooling than MQTT | Medium — specific to constrained device scenarios | |

| AI/ML | TensorFlow Lite | Edge inference for any device class from smartphones to embedded Linux systems | Medium — requires ML background and model optimisation knowledge | Very strong — Google-backed with massive community | Very high — industry standard for edge AI |

| TensorFlow Lite Micro | AI on microcontrollers with <50KB memory footprint | High — requires embedded systems expertise and C++ proficiency | Strong — growing community focused on TinyML applications | Growing — emerging standard for MCU-class AI | |

| PyTorch Mobile | Alternative to TensorFlow Lite with different optimisation trade-offs | Medium — similar to TensorFlow Lite with PyTorch-specific patterns | Very strong — Facebook-backed with academic and industry adoption | High — particularly in research and computer vision | |

| Edge Impulse | End-to-end platform for developing and deploying TinyML applications | Low — visual development environment, extensive tutorials | Strong — commercial with active community | Growing — particularly in prototyping and small-scale deployments | |

| Analytics | Apache Kafka | Distributed streaming platform for high-throughput event processing | High — complex distributed system requiring expertise | Very strong — Apache project with commercial support from Confluent | Very high — enterprise standard for event streaming |

| Apache Spark | Large-scale data processing and machine learning on streaming data | High — requires distributed computing knowledge and JVM expertise | Very strong — Apache project with commercial support from Databricks | Very high — standard for Big Data analytics | |

| InfluxDB | Purpose-built time-series database optimised for IoT metrics | Low — straightforward installation and query language | Strong — InfluxData-backed open source with active community | High — standard for time-series workloads | |

| Grafana | Visualisation and alerting for operational metrics and time-series data | Low — visual dashboard builder, extensive pre-built dashboards | Very strong — Grafana Labs-backed with massive adoption | Very high – de facto standard for observability | |

| Orchestration | K3s | Lightweight Kubernetes for edge computing with minimal resource footprint | Medium — requires Kubernetes knowledge but simpler than full K8s | Strong — SUSE/Rancher-backed, growing rapidly | High — becoming standard for edge orchestration |

| Docker Compose | Simpler container orchestration for single-node deployments | Low — straightforward YAML configuration | Very strong — part of Docker ecosystem | High — ubiquitous for development and simple deployments | |

| Nomad | Flexible orchestrator supporting containers, VMs, and standalone applications | Medium — HashiCorp architecture with learning curve | Medium — HashiCorp-backed with commercial support | Medium — growing in edge scenarios | |

| Workflow | Node-RED | Visual programming for IoT workflows and rapid prototyping | Low — drag-and-drop interface, minimal coding required | Very strong — IBM-originated, now OpenJS Foundation with 3000+ community nodes | High — particularly for prototyping and operational workflows |

| Apache NiFi | Enterprise data integration and routing with visual design | Medium — requires understanding data flow patterns | Strong — Apache project with commercial support | High — standard for data integration pipelines | |

| Security | WireGuard | Modern, lightweight VPN for secure edge-to-cloud connectivity | Low — simple configuration, built into Linux kernel | Strong — rapidly growing adoption | Growing — becoming preferred over older VPN technologies |

| Suricata | Network intrusion detection and prevention system | High — requires security expertise and rule development | Strong — OISF-maintained with commercial support | High — standard for network security monitoring | |

| CryptoAuthLib | Hardware-based cryptographic operations for secure device identity | High — requires embedded systems and cryptography expertise | Medium — microchip-backed | Growing — increasingly important for device security | |

| Digital twin | Eclipse Ditto | Digital twin framework with state synchronisation and API gateway | Medium — requires understanding digital twin concepts | Medium — Eclipse Foundation project with growing adoption | Growing — emerging standard for digital twin implementations |

| Azure digital twins OSS tools | Open source tooling for digital twin development and integration | Medium — Microsoft ecosystem familiarity helpful | Medium — Microsoft-backed with community contributions | Medium — primarily Azure customers but growing |

Table 2: The best open source tools for edge AI deployments

Open source tools provide more than just cost savings through the elimination of licensing fees. The transparency inherent in open source enables security auditing impossible with proprietary black boxes, allowing organisations to verify exactly what code executes on their infrastructure and identify potential vulnerabilities before deployment. Customisation becomes practical when source code provides the starting point rather than opaque APIs with limited extension points. Organisations can modify behaviour, add integration points, and optimise performance for specific requirements without waiting for vendors to prioritise their unique needs.

Vendor lock-in elimination provides strategic flexibility as technology and requirements evolve. Organisations can migrate between tools, replace components, or adopt new technologies without renegotiating contracts. The ability to fork projects if development direction diverges from organisational needs prevents abandonment or adverse licensing changes.

Transforming edge AI concepts into production reality requires methodical progression through clearly defined phases, each building capabilities and confidence for subsequent stages.

The trajectory towards edge-native architectures isn’t a matter of ‘if’ but ‘when’. Organisations that recognise this trend and build capabilities early will capture advantages in operational efficiency, customer experience, and innovation velocity. The edge AI revolution is here and accessible to anyone with vision and commitment. The only question is whether you will build the future or be disrupted by it. The technology is ready. The tools are available. The opportunity is yours to seize.

Disclaimer: The opinions expressed in this article are the author’s and do not reflect the views of the organisation he works in.