Docker is an open source platform for development and operation teams to transport, build and run distributed applications in an effective manner. It can combine an application along with its dependencies in a virtual container that can run on a Linux server by providing abstraction and automation.

The concept behind using containers is to isolate resources from physical hosts, limit the services and provision them to have a private view of the operating system with their own file system structure, process ID space and network interfaces. A single kernel can have multiple containers but each container can use only a defined amount of I/O, CPU and memory.

This leads to an increase in portability and flexibility for the applications that can run on cloud deployment models such as private and public clouds, and also on bare metal and so on. Docker consists of:

- Docker Engine: This is a portable packaging tool which has lightweight application runtime.

- Docker Hub: This is a cloud service for application sharing and workflow automation.

Docker uses resource isolation features of the kernel such as kernel namespaces and cgroups to allow independent containers to run within a single instance, thereby avoiding the overhead of starting virtual machines.

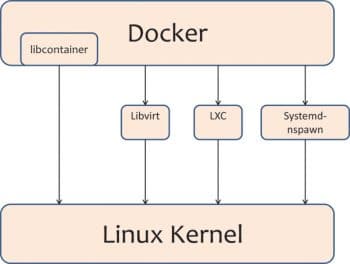

The Linux kernels namespace provides isolation to the applications view of the operating environment, which includes the network, process trees, mounted file systems and user IDs. The kernels cgroups provides isolation from the network, memory, CPU and block I/O. In some versions of Docker, the libcontainer library directly uses the facilities for virtualisation provided by the kernel. It also uses abstracted virtualisation interfaces via LXC, system–nspawn and libvirt.

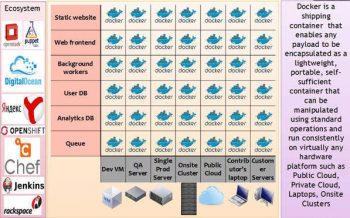

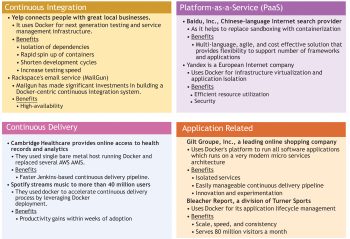

Docker enables applications to be assembled from components and eliminates the friction between the production environment, QA and development. By using Docker, we can create and manage containers; it enables us to create highly distributed systems by allowing workers tasks, multiple applications and other processes. Essentially, it provides a Platform as a Service (PaaS) style of deployment and, hence, enables IT to ship faster and run the same application unchanged on the data centre, VMS, the cloud and on laptops.

Docker can be integrated into various infrastructure automation or configuration management tools (such as Chef, CFEngine, Puppet and Salt), in continuous integration tools, in cloud service providers such as Amazon Web Services, private cloud products like OpenStack Nova, and so on.

Docker installation

Docker can be installed on various operating systems such as Microsoft Windows, CentOS, Fedora, SUSE, Amazon EC2, Ubuntu, and so on. We will be covering installation for three operating systemsMicrosoft Windows, CentOS and Amazon EC2.

On Windows

As the Docker engine uses Linux kernel features, to execute it on Windows, you need to use a virtual machine. A Windows Docker client is used to control the virtualised Docker engine to execute, manage and build Docker containers.

Docker has been tested on Windows 7.1 and 8, apart from other versions. The processor needs to support hardware virtualisation. To make the process easier, an application called Boot2Docker has been designed that installs the virtual machine and runs the Docker.

1. First, download the latest version of Docker for the Windows operating system.

2. Follow the steps to complete the installation, which will result in the installation of Boot2Docker, Boot2Docker Linux ISO, VirtualBox, Boot2Docker management tool and MSYS-git.

3. After this, run Boot2Docker to start the shell script or execute Program Files > Boot2Docker. Start script will ask you to enter an ssh key passphrase or simply press [Enter]. This script will connect to a shell session in the virtual machine. According to requirements, it will initialise a new VM virtual machine and start it.

To upgrade, download the latest version of Docker for the Windows operating system, and run the installer to update the Boot2Docker management tool. To upgrade the existing virtual machine, open a terminal and perform the following steps:

boot2docker stop boot2docker download boot2docker start

On CentOS

Docker is available by default in the CentOS-Extras repository on CentOS 7. Hence, to install it, run sudo yum install docker. In Centos-6.5, the Docker package is part of Extra Packages for the Enterprise Linux (EPEL) repository.

On Amazon EC2

Create an AWS account. To install Docker on AWS EC2, use Amazon Linux that includes the Docker packages in its repository, or Standard Ubuntu Installation.

Choose an Amazon-provided AMI and launch the Create Instance Wizard menu on AWS Console. In the Quick Start menu, select the Amazon-provided AMI, use the default t2.micro instance, configure the Instance Details button, select standard choices where default values can be kept, and wait for the Amazon Linux instance to run the SSH instance to install Docker: ssh -i <path to your private key> ec2-user@<your public IP address>. Connect to the instance, type sudo yum install -y docker; sudo service docker start to install and start Docker, and then set up Security Group to allow SSH.

Why Docker is considered hot in IT

Docker is widely used by developer teams, systems administrators and QA teams in different environments such as development, testing, pre-production and production, for various reasons. Some of these are:

- Docker can run everywhere regardless of its kernel version.

- It can run on the host or in a container.

- It consists of its own process space, a network interface and can run resources as the root.

- It can share the kernel with the host.

- Docker containers, and their workflow, help developers, sysadmins, QA teams and release engineers to work together to get code into production.

- It is easy to create new containers, enable rapid iteration of applications and increase the visibility of changes.

- Containers are light in weight and quick; they also consist of sub-second launch times, which reduces the time of the development phase, testing and production.

- It can run almost everywhere such as on desktops, virtual machines, physical servers, data centres, and in cloud deployment models such as a private or public cloud.

- It can run on any platform; it can be easily moved from one application environment to another, and vice versa.

- As containers are light in weight, scaling is very easy, i.e., as per needs, they can be launched anytime and can be shut down when not in use.

- Docker containers do not require a hypervisor, so we can get more value out of every server and can potentially reduce the expenditure on equipment and licences.

- As Dockers speed is high, we can make small changes as and when required; moreover, small changes reduce risks and enable more uptime.

Comparison between Docker and virtual machines (VMs)

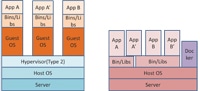

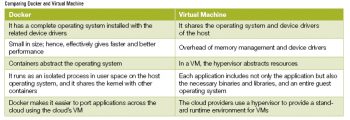

The significant difference between containers such as Docker and VMs is that the hypervisor abstracts an entire device while containers abstract the operating system kernel. One thing hypervisors can do that containers cant is use different operating systems or kernels. So, for example, you can use Amazons public cloud or the VMware private cloud to run instances of both Windows Server 2012 and SUSE Linux Enterprise Server in parallel. With Docker, containers must use the same operating system and kernel. On the other hand, if all you want to do is get the most server application instances running on the least amount of hardware, you dont need to worry about running multiple operating system VMs. If different copies of the same application are what you want, then its better to consider containers.

Containers are smaller in size than VMs, the starting process is much faster and they have enhanced performance. However, this comes at the expense of less isolation and greater compatibility requirements due to sharing the hosts kernel. Virtual machines have a full OS with their own device drivers, memory management, etc. Containers share the hosts OS and are therefore lighter in weight.

References

[1] http://en.wikipedia.org/wiki/Docker_%28software%29

[2] https://www.docker.com/whatisdocker/

[3] https://www.docker.com/resources/usecases/

[4] https://docs.docker.com/

[5] http://www.zdnet.com/article/what-is-docker-and-why-is-it so-darn-popular/

[6] http://en.wikipedia.org/wiki/Docker_%28software%29

[7] https://medium.com/@_marcos_otero/docker-vs-vagrant-582135beb623

[8] http://www.quora.com/What-is-the-difference-between-Docker-and-Vagrant-When-should-you-use-each-one

[9] http://searchcloudcomputing.techtarget.com/tip/Three-apps-in-which-Docker-containers-really-shine

[10] http://www.scriptrock.com/articles/docker-vs-vagrant

[11] http://serverfault.com/questions/538466/relationship-between-vagrant-docker-chef-and-openstack-or-similar-products

[12] http://www.rightscale.com/blog/cloud-management-best-practices/docker-vs-vms-combining-both-cloud-portability-nirvana