In this article we’ll setup a ready-to-deploy WordPress-compatible Varnish service as our HTTP reverse caching proxy, and have it cache the desktop and mobile themes separately.

Our original WordPress recipe had a place for Varnish in the mix if you remember. But we had to skip it for the time being when we initially deployed the website on a LEMP (Linux, Nginx, MySQL, PHP) stack. The reason was the inability to iron out some of the issues then:

- have Varnish maintain a separate cache for WPTouch (our mobile theme), and

- an automated way to have Varnish purge Web pages in its cache whenever we edited/updated posts.

Since, then we have deployed Varnish 3.0.2 on our CentOS 6.2 server (which has been live for over a month now). [Yes, I know I said stuff about how I dislike the Red Hat way — but then, I’m fickle ;-) ]

Our final production Varnish config had to have a security check to lock down certain WordPress directories from random public access over the Web. We rolled that out last weekend — I’ll share the recipe for this small tweak on a later post (hopefully by the end of the week).

Alert: This by no means is a guide, nor a definitive recipe for a WordPress-compatible Varnish config file. Just like the other two previous posts, it simply documents how you too can also deploy Varnish HTTP accelerator.

Least to say it’s been fun to play with Varnish… although, if you ask me the finer details about how it works, well I won’t be the right person for the job. I just google, stitch together a few recipes publicly available, test the final output on how it’s performing and wallah, I’m happy.

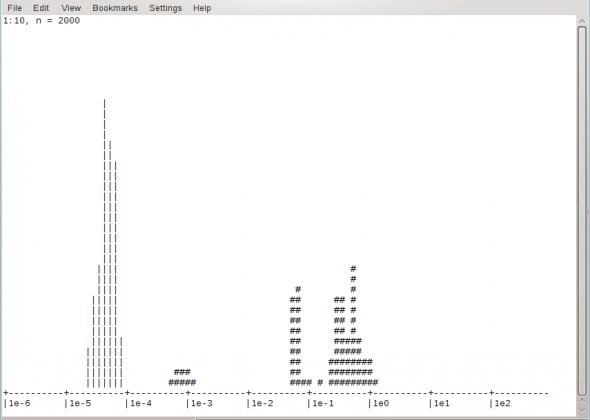

Figure 1 gives an bird’s eye view of how good or bad Varnish is serving us in production.

A pipe (|) indicates when a request is served from the Varnish cache, while a hash (#) indicates when it’s fetched from the backend (the LEMP stack in our case). On the horizontal axis, the closer a pipe or a hash is from the left the lesser the time taken to serve a request out.

| What you talkin’ about, Willis? |

| This is for the uninitiated — even I was one a few months back. Back then my idea of caching by using WordPress plugins. And it was while troubleshooting an awkward issue I was facing with W3 Total Cache when I chanced upon mentions of Varnish.

The thing with WP caching plugins like W3 Total Cache or WP Super Cache is that the request still hits the Web server, just that these plugins can save up on database (MySQL) and PHP queries while serving posts. Which is good enough under most circumstances. But what if I want the a cached post to be served without troubling the Web server? This is where Varnish comes in. They like to call it an HTTP accelerator that stores cache either in memory ( |

The business of caching

Implementing caching on a dynamic website is an odd idea. Here, by dynamic I mean poor man’s dynamic — a website that gets updated every now and then, and there’s a commenting facility additionally available for posts.

So, before implementing a cache, make sure you have some of the things figured out:

- Do you want to purge (expire) a cache every time a new comment comes in? If not, that means a super hit (or controversial) post might attract loads of comments, yet people can’t start a conversation because they can’t read each others’ comments (because the same stale copy of the post is served from cache to everyone).

- How will you handle any updates to home page?

The comment thing, at least in our case is handled by outsourcing the facility to a third party. We use Livefyre. A lot of folks out there use Disqus. So, that part is solved as comments are fetched by calling Lifefyre (or Disqus) externally, as opposed to the default WordPress comment system internally.

The home page can’t be outsourced to a third party ;-) So, every time new content is posted, at least the home page has to be purged, so that the next request doesn’t get a stale copy. Then again, a super hit post won’t drive your traffic to the home page but to the specific post. Under the circumstances you’re not losing out on anything. Yet, your regular visitors who land on the home page would probably be the last ones to get to land on the super hit post — because a straightforward navigation to the post is simply missing.

Again, when post content is edited with updated information, the specific cached page must be purged so that updates are immediately available. Ideally, there has to be an automated way to purge the specific post cache and home page, whenever content is edited/updated.

Of course, in WordPress the same content is linked to other sections also — categories, tags, archives, etc. Ideally, all these need to be purged. But it’s not a deal-breaker if they aren’t automatically immediately. Anyway, this automated business is not under the scope of Varnish itself. A Varnish configuration can honour any URL purge request as long as it comes from an authorised source. The plugin/script that connects the WordPress post Publish/Update button and Varnish needs to be able to intelligently send all affected URLs to Varnish with a purge request.

Fetch the latest version

Most popular distros for server use have Varnish in their repositories. However, they mostly still carry the older 2.1 version as of date. Thankfully, the Varnish project maintains CentOS/Red Hat, Ubuntu, Debian, FreeBSD repositories/packages.

So, on our CentOS server, as the documentation states, we run:

rpm --nosignature -i http://repo.varnish-cache.org/redhat/varnish-3.0/el5/noarch/varnish-release-3.0-1.noarch.rpm

…and then:

yum install varnish

If you’re on Debian/Ubuntu/FreeBSD, check out the documentation and you’ll be all set.

Varnish configuration starters

There are two plain-text files that drive the whole logic:

/etc/sysconfig/varnish(on Red Hat/CentOS/Fedora systems); or/etc/default/varnish(under Debian and Ubuntu)/etc/varnish/default.vcl

Note: Both these files are commented nicely to get you started. However before making any changes, I highly recommend saving a backup just in case you want to start all over again.

Let’s start with the first file — that passes parameters to the varnish daemon when it starts:

# Configuration file for varnish

#

# /etc/init.d/varnish expects the variable $DAEMON_OPTS to be set from this

# shell script fragment.

#

# Maximum number of open files (for ulimit -n)

NFILES=131072

# Locked shared memory (for ulimit -l)

# Default log size is 82MB + header

MEMLOCK=82000

# Maximum size of corefile (for ulimit -c). Default in Fedora is 0

# DAEMON_COREFILE_LIMIT="unlimited"

# Set this to 1 to make init script try reload to switch vcl without restart.

# To make this work, you need to set the following variables

# explicit: VARNISH_VCL_CONF, VARNISH_ADMIN_LISTEN_ADDRESS,

# VARNISH_ADMIN_LISTEN_PORT, VARNISH_SECRET_FILE, or in short,

# use Alternative 3, Advanced configuration, below

RELOAD_VCL=1

# This file contains 4 alternatives, please use only one.

## Alternative 1, Minimal configuration, no VCL

#

# Listen on port 6081, administration on localhost:6082, and forward to

# content server on localhost:8080. Use a fixed-size cache file.

#

#DAEMON_OPTS="-a :6081 \

# -T localhost:6082 \

# -b localhost:8080 \

# -u varnish -g varnish \

# -s file,/var/lib/varnish/varnish_storage.bin,1G"

## Alternative 2, Configuration with VCL

#

# Listen on port 6081, administration on localhost:6082, and forward to

# one content server selected by the vcl file, based on the request. Use a

# fixed-size cache file.

#

#DAEMON_OPTS="-a :6081 \

# -T localhost:6082 \

# -f /etc/varnish/default.vcl \

# -u varnish -g varnish \

# -S /etc/varnish/secret \

# -s file,/var/lib/varnish/varnish_storage.bin,1G"

## Alternative 3, Advanced configuration

#

# See varnishd(1) for more information.

#

# # Main configuration file. You probably want to change it :)

VARNISH_VCL_CONF=/etc/varnish/default.vcl

#

# # Default address and port to bind to

# # Blank address means all IPv4 and IPv6 interfaces, otherwise specify

# # a host name, an IPv4 dotted quad, or an IPv6 address in brackets.

VARNISH_LISTEN_ADDRESS=192.168.2.5

VARNISH_LISTEN_PORT=80

#

# # Telnet admin interface listen address and port

VARNISH_ADMIN_LISTEN_ADDRESS=127.0.0.1

VARNISH_ADMIN_LISTEN_PORT=6082

#

# # Shared secret file for admin interface

VARNISH_SECRET_FILE=/etc/varnish/secret

#

# # The minimum number of worker threads to start

VARNISH_MIN_THREADS=1

#

# # The Maximum number of worker threads to start

VARNISH_MAX_THREADS=1000

#

# # Idle timeout for worker threads

VARNISH_THREAD_TIMEOUT=120

#

# # Cache file location

#VARNISH_STORAGE_FILE=/var/lib/varnish/varnish_storage.bin

#

# # Cache file size: in bytes, optionally using k / M / G / T suffix,

# # or in percentage of available disk space using the % suffix.

VARNISH_STORAGE_SIZE=1G

#

# # Backend storage specification

VARNISH_STORAGE="malloc,${VARNISH_STORAGE_SIZE}"

#

# # Default TTL used when the backend does not specify one

VARNISH_TTL=120

#

# # DAEMON_OPTS is used by the init script. If you add or remove options, make

# # sure you update this section, too.

DAEMON_OPTS="-a ${VARNISH_LISTEN_ADDRESS}:${VARNISH_LISTEN_PORT} \

-f ${VARNISH_VCL_CONF} \

-T ${VARNISH_ADMIN_LISTEN_ADDRESS}:${VARNISH_ADMIN_LISTEN_PORT} \

-t ${VARNISH_TTL} \

-w ${VARNISH_MIN_THREADS},${VARNISH_MAX_THREADS},${VARNISH_THREAD_TIMEOUT} \

-u varnish -g varnish \

-S ${VARNISH_SECRET_FILE} \

-s ${VARNISH_STORAGE}"

#

## Alternative 4, Do It Yourself. See varnishd(1) for more information.

#

# DAEMON_OPTS=""

I’ve highlighted a few lines in that file — the ones you need to take a look at to get started quickly. Of special note are the following lines:

- Line #60: In our file this has an internal IP address because our Web server is behind a NAT. Generally with off-the-shelf VPS and cloud offerings you should put the public IP of the server here.

- Line #61: I’m making it listen on port 80 because that’s where the HTTP Web traffic hits by default. Unless you want your users to hit a different port, make this 80. Although, you might want to test it out on a different port before binding the daemon to 80. And when you do bind it to 80, make sure you change your Web server’s port to listen on some other port number.

- Line #80: This one defines the location of file on disk where Varnish should store its cache. Since I’d rather store the cache in memory (memory reads are faster than disk reads remember?), I commented the line out. However, if your server doesn’t have sufficient free RAM to take advantage of, you can use this directive.

- Line #84: I chose to give it 1GB. Your mileage may vary. If it’s a personal blog, you can get away with 128M nicely enough.

- Line #87: By default, this line looks like:

VARNISH_STORAGE="file,${VARNISH_STORAGE_FILE},${VARNISH_STORAGE_SIZE}Let it be like that if you want to store your cache on disk. Else change like I have if you want cache in memory.

- Finally line #94-101 is what the

varnishdaemon picks up when you start the Varnish service — these variables are what we’ve defined earlier lines.

Of course, there are very many possibilities in this file itself — but not required just to get started.

The real deal

The next factor to consider, and this is most important, is the /etc/varnish/default.vcl file — this is were we define the logic like what Varnish should do when it receives a request, how it should query its cache or Web server, and finally how to send it back to the user.

Here’s our example file — feel free to deploy it straight away if you are running WordPress (as always, save a copy of the original file):

backend default {

.host = "127.0.0.1";

.port = "8080";

}

acl purge {

"127.0.0.1";

"192.168.2.5";

"192.168.2.1";

}

sub vcl_recv {

# First call our identify_device subroutine to detect the device

call identify_device;

if (req.request == "PURGE") {

if (!client.ip ~ purge) {

error 405 "Not allowed.";

}

return(lookup);

}

if (req.http.Accept-Encoding) {

#revisit this list

if (req.url ~ "\.(gif|jpg|jpeg|swf|flv|mp3|mp4|pdf|ico|png|gz|tgz|bz2)(\?.*|)$") {

remove req.http.Accept-Encoding;

} elsif (req.http.Accept-Encoding ~ "gzip") {

set req.http.Accept-Encoding = "gzip";

} elsif (req.http.Accept-Encoding ~ "deflate") {

set req.http.Accept-Encoding = "deflate";

} else {

remove req.http.Accept-Encoding;

}

}

if (req.url ~ "\.(gif|jpg|jpeg|swf|css|js|flv|mp3|mp4|pdf|ico|png)(\?.*|)$") {

unset req.http.cookie;

set req.url = regsub(req.url, "\?.*$", "");

}

if (req.url ~ "\?(utm_(campaign|medium|source|term)|adParams|client|cx|eid|fbid|feed|ref(id|src)?|v(er|iew))=") {

set req.url = regsub(req.url, "\?.*$", "");

}

if (req.http.cookie) {

if (req.http.cookie ~ "(wordpress_|wp-settings-)") {

return(pass);

} else {

unset req.http.cookie;

}

}

}

sub identify_device {

# Default to thinking it's a PC

set req.http.X-Device = "pc";

if (req.http.User-Agent ~ "iPad" ) {

# It says its a iPad - so let's give them the tablet-site

set req.http.X-Device = "mobile-tablet";

}

elsif (req.http.User-Agent ~ "iP(hone|od)" || req.http.User-Agent ~ "Android" ) {

# It says its a iPhone, iPod or Android - so let's give them the touch-site..

set req.http.X-Device = "mobile-smart";

}

elsif (req.http.User-Agent ~ "SymbianOS" || req.http.User-Agent ~ "^BlackBerry" || req.http.User-Agent ~ "^SonyEricsson" || req.http.User-Agent ~ "^Nokia" || req.http.User-Agent ~ "^SAMSUNG" || req.http.User-Agent ~ "^LG") {

# Some other sort of mobile

set req.http.X-Device = "mobile-other";

}

}

sub vcl_hash {

# Your existing hash-routine here..

# And then add the device to the hash (if its a mobile device)

if (req.http.X-Device ~ "^mobile") {

hash_data(req.http.X-Device);

}

}

sub vcl_fetch {

if (req.url ~ "wp-(login|admin)" || req.url ~ "preview=true" || req.url ~ "xmlrpc.php") {

return (hit_for_pass);

}

if ( (!(req.url ~ "(wp-(login|admin)|login)")) || (req.request == "GET") ) {

unset beresp.http.set-cookie;

set beresp.ttl = 1h;

}

if (req.url ~ "\.(gif|jpg|jpeg|swf|css|js|flv|mp3|mp4|pdf|ico|png)(\?.*|)$") {

set beresp.ttl = 365d;

}

}

sub vcl_deliver {

# multi-server webfarm? set a variable here so you can check

# the headers to see which frontend served the request

# set resp.http.X-Server = "server-01";

if (obj.hits > 0) {

set resp.http.X-Cache = "HIT";

} else {

set resp.http.X-Cache = "MISS";

}

}

sub vcl_hit {

if (req.request == "PURGE") {

set obj.ttl = 0s;

error 200 "OK";

}

}

sub vcl_miss {

if (req.request == "PURGE") {

error 404 "Not cached";

}

}

Note the highlighted lines in the above configuration? You are free to chuck these out if you don’t have a separate mobile theme like WPTough. We do, so that’s our logic to have Varnish maintain a separate cache for all mobile requests.

In fact, our whole logic is a straight off copy paste job from two resources available online. Varnish project hosts the VCL configuration example that we can use when we have a separate WordPress plugin that takes care of purging content. We’re using Varnish HTTP Purge. You’re good to go using this alone if you don’t need a separate mobile theme.

In case you do, then add some directives from this excellent guide with neat explanations written with Varnish 2.1 in mind — Mobile Detection with Varnish and Drupal — like we have in the above example. I just needed to change line #74 to make the directive Varnish 3.0 compatible.

The important directives (or sections) in a VCL file are backend, acl, vcl_recv, vcl_hash, vcl_fetch, vcl_deliver, vcl_hit, vcl_miss. Let’s just quickly go thru some of them…

Note: By default, Varnish comes with a VCL logic in-built. If you open your the default.vcl file you backed up earlier, you can see that logic is commented out there. All these will, in fact, append to our custom logic defined in the new file above once we (re)start Varnish server.

- Line #1-4: Under

backend, we define the Web server IP address and port number. Since our Web server is on the same server, we’ve simply put the localhost IP. If yours is on a different server, define it’s IP and port here. - Line #6-10: In this

acl(Access Control List) section we include the IP addresses that are authorised to sendPURGErequests. Purge requests from any other IP address will be returned with an error. We’ll define the logic in the next section. - Line #12-47: The

vcl_recvsection is, in fact, the first section that any request is received by Varnish. The sequence of logic is very important here — in fact, it’s throughout the VCL file, as Varnish reads it from top to bottom. If something matches, it skips the rest of the section and jumps to the next section.- Likewise we defined our custom

call identify_devicelogic to gauge if the request has come from a PC or a phone. The request basically first jumps to theidentify_devicesection and once a user agent string matches there, it again comes back here. - Next it matches if there is a

PURGErequest (line #15) against the ACL of IP addresses. If matches, then purge (line #16); else return a 405 error (line #17). - If it’s not a

PURGErequest, it continues down the path that defines logic for different file types and URIs. - Between line #40-46 we define the cookie logic. Varnish doesn’t save anything in cache if it sees cookies flying in and out of it. So, in this VCL config, cookies are unset for all page requests besides authenticated pages (where we can’t do without it). Besides we only need caching in the WP frontend, and not the backend. When the requested URL has a cookie, it matches it with valid cookies — if they match, the request is passed to the backend Web server with the

return(pass)directive. If the cookies are of some other form, they are stripped off with theunset req.http.cookiedirective. Likewise, for all sorts of static file requests (line 33), cookies are stripped (line 34).

- Likewise we defined our custom

- Line #49-67: This is where we’ve defined the user agent logic. Although we’re currently serving only two themes — standard and WPTouch — it does have room if you’d like to serve various types of themes optimised for various forums of user agents. Maybe Onswipe for tablets?

- Line #69-76:

vcl_hashsection basically makes sure when the same URL is requested from different user agents, Varnish caches different copies for each, and serves the right copy to the right user agent. Without this one if I open a non-cached URL using my Android, and if you open the same URL next using your desktop — you’ll be served the WPtouch theme even on your desktop. Yikes! - Line #78-89: Now that varnish has received a request, it’s time to fetch it. Here the logic goes like this: if the requested URL is for wp-admin, wp-login.php or a “preview post” (from the WP post editor), it’s sent to the backend Web server. For any other HTML page requests, unset the cookie, and save the page in cache for 1 hour. For all static files, save for a year.

- Line #90-100: This is the delivery time, now that the request has been fetched. If the object requested was available in cache, add a cache hit in the HTTP header, else add cache miss.

- Line #101-112:

vlc_hitandvlc_misssections are specifically defined when aPURGErequest is sent. For a purge request, the logic defines, if the object is in cache, purge and return a 200 OK, else return 404 not cached (404, because it couldn’t find anything in cache.)

Yes, I know I defined it like a lay man… because that’s how I understand the file myself. If you ask me to write a VCL file from scratch, I’ll probably retire :-)

Anyway, time to start the varnish service:

# /etc/init.d/varnish start # chkconfig on

If you get back the shell prompt without any error message, that means the Varnish VCL file correctly compiled and is loaded in memory. If there are typos or silly errors in the file, it usually throws an error detailing the line and column number the error is on, and most of the times also hints what you should do to fix it.

Finally, if your Web server is still listening on port 80 and Varnish is listening on a different port (say, on 8080), you can check it out if it’s working by opening http://www.example.com:8080/. Hopefully, your browser should be able to fetch the page. If the browser is just sitting on it waiting, that means somehow Varnish is failing to connect to the backend Web server. Time to check if the backend section is in order.

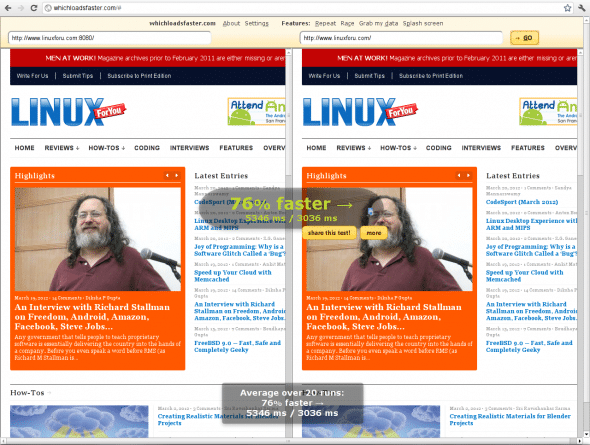

Otherwise, hit a service like whichloadsfaster.com and test your results. I just ran a test for www.opensourceforu.com by allowing port 8080 (the Nginx Web server port) temporarily in the firewall.

Neat performance improvement, eh?

So, if you too are satisfied with your results, why not switch your Web server’s port to something else, and switch varnish to 80, and you’re done.

I hope you’ve already installed Varnish HTTP Purge plugin. This one sends a purge request to the home page and the post itself whenever you update/publish a post. Just in case you’d like to refresh any other category, tag or archive page immediately, you can do that manually by running the following command:

curl -X PURGE http://www.example.com/category/something/

You should see something like this:

<?xml version="1.0" encoding="utf-8"?> <!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Strict//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-strict.dtd"> <html> <head> <title>200 OK</title> </head> <body> <h1>Error 200 OK</h1> <p>OK</p> <h3>Guru Meditation:</h3> <p>XID: 420474792</p> <hr> <p>Varnish cache server</p> </body> </html>

However, you’ll see the following if the page is not available in cache:

<?xml version="1.0" encoding="utf-8"?> <!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Strict//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-strict.dtd"> <html> <head> <title>404 Not cached</title> </head> <body> <h1>Error 404 Not cached</h1> <p>Not cached</p> <h3>Guru Meditation:</h3> <p>XID: 420474818</p> <hr> <p>Varnish cache server</p> </body> </html>

Hey, what about logs, and all?

Ah, yeah. Well, your Web server access logs will be rendered useless once Varnish is the frontend. All requests would appear as if they have come from localhost. So, configuring Varnish logs are very important at this stage.

If you’re on CentOS/Red Hat, it’s as simple as staring the varnishncsa service:

# service varnishncsa start # chkconfig on

On Debian, it’s not so easy. But not so difficult as well. Run the following command:

# varnishncsa -a -w /var/log/varnish/varnishncsa.log -D -P /var/run/varnishncsa.pid

Don’t forget to append the line in your rc.local file so that it starts automatically after a server reboot.

By the way, at least, on CentOS, we need to tweak the logrotate script a bit, otherwise, depending on the traffic, within a couple of days you might hit a 100MB log file. You should edit /etc/logrotate.d/varnish to make it look like:

/var/log/varnish/*.log {

daily

missingok

rotate 52

notifempty

sharedscripts

compress

delaycompress

postrotate

/bin/kill -HUP `cat /var/run/varnishlog.pid 2>/dev/null` 2> /dev/null || true

/bin/kill -HUP `cat /var/run/varnishncsa.pid 2>/dev/null` 2> /dev/null || true

endscript

}

Change the parameters as per your requirements, of course.

Finally, there a few commands you can use to monitor Varnish. The screenshot I used in Figure 1 was varnishhist — which presents a realtime ASCII graph. Another handy command to monitor realtime stats is varnishstat — the important paramenters here are cache_hit and cache_miss. Also to see which pages are hitting the backend, run varnishtop -i txurl.

So, kinda like a done deal here, finally!

Of course, all that I’ve covered, including the config files, are just the basics. You can configure Varnish for ESI, load balancing, grace periods, and what not.

References

- Mobile Detection with Varnish and Drupal

- WordPress VCL when you have a plugin that purges content

- Links to useful VCL examples

- Varnish 3.0 Documentation

Feature image courtesy: desertspotter. Reused under the terms of CC-BY-NC-ND 2.0 License.

odio linux

Thanks for this useful blog post!

Sadly, I’ve not been able to get this to work yet, despite MUCH reading – probably many of the sources you used to put this together! Although I see the hash output in varnishlog for mobile devices, the cached and ultimately served version depends on which device gets there first – if a mobile device is first, then regular computers will see the mobile version of a page.

Output from varnishlog:

—

15 VCL_call c recv lookup

15 VCL_call c hash

15 Hash c /wp-content/plugins/buddystream/extentions/default/colorbox/images/lightbox/loading.gif

15 Hash c my.domain.name

15 Hash c mobile-smart

15 VCL_return c hash

—

As you can see, it does appear to be hashing the mobile-smart X-Device.

I may be wrong, but I don’t think that the vcl_hash will work as it is in your example either? Here is what I have, combining your article with others:

sub vcl_hash {

hash_data(req.url);

if (req.http.host) {

hash_data(req.http.host);

} else {

hash_data(server.ip);

}

# And then add the device to the hash (if its a mobile device)

if (req.http.X-Device ~ “^mobile”) {

hash_data(req.http.X-Device);

}

return (hash);

}

Did you experience any such difficulties? I’m rapidly losing hair over this particular problem! :)

Thanks!

@CraigLuke I’m assuming you’re using the latest WP and WPTouch.

Let me know what happens, when you try this:

===========

sub vcl_hash {

# And then add the device to the hash (if its a mobile device)

if (req.http.X-Device ~ “^mobile”) {

hash_data(req.http.X-Device);

}

hash_data(req.url);

if (req.http.host) {

hash_data(req.http.host);

} else {

hash_data(server.ip);

}

return (hash);

}

============

In fact, the following routine will be appended to your vcl’s vcl_hash when you load it in memory:

===========

hash_data(req.url);

if (req.http.host) {

hash_data(req.http.host);

} else {

hash_data(server.ip);

}

return (hash);

=============

Any particular reason why you want it before the hash mobile statements?

[…] in case you’re running Varnish as a reverse proxy in front of your Web server, like we are, then adding these rules in your Web server’s config files won’t make any difference. […]

Atanu Datta nice post, I doubt a beginner, how do I confirgurar perfectly vanirsh totalcache3?

For I see that when I’m running it the server’s memory is used in more than 60%.

Do you have problems with backends with ports different to 80 and 8080?