This step-by-step guide provides a good understanding of the Dalai Library and how to get started with the LLaMA and Alpaca language models on your own machine. Once you have done that, the applications are limited only by your imagination.

Have you ever thought about running cutting-edge language models like ChatGPT on your own computer? You’re not alone! In this adventure, we’ll explore how to make it happen with the LLaMA and Alpaca models, using the Dalai Library. Get ready for a detailed, step-by-step, and entertaining journey into the world of local AI! At the end of it you will, hopefully, get a good understanding of how generative AI can reside in your machine.

The local AI dilemma: Our own private sanctuary

Let’s face it, we’re all excited about the possibilities generative AI models bring. But with great power comes great responsibility (thanks, Uncle Ben). One of the biggest concerns we have is data privacy. Centralised models like OpenAI and Microsoft’s offerings are fantastic, but do we really want to hand over our data on a silver platter?

Imagine if Batman had to share his Batcave location with everyone. Not cool, right? That’s where running an AI model on your local machine comes into play. It’s like having your very own Batcave (minus the cool gadgets and bat-themed vehicles, of course).

LLaMA: The compact powerhouse created by Meta AI

LLaMA is a foundational language model that has managed to achieve something incredible. Despite being 13x smaller than the colossal GPT-3, it outperforms the latter on most benchmarks! This compact powerhouse is capable of running on local machines – one daring individual even managed to get it working on a Raspberry!

Now, thanks to some unforeseen circumstances, LLaMA is available for non-commercial use. Developed by the talented team at Meta AI, LLaMA and its sibling Alpaca are making local AI usage more accessible than ever before. So, without further ado, let’s get started on this great ride!

Installing and running LLaMA

Clone the repo and install the necessary prerequisites from https://github.com/cocktailpeanut/dalai.

To kick things off, run the command:

npx dalai llama install 7B

Before you proceed, though, be aware that LLaMA-7B needs around 31GB of storage. So make sure there’s enough space on your computer for this small yet mighty guest. I struggled with this a lot!!

To run LLaMA, simply type:

npx dalai serves

..and you’ve done it! You now have a large language model running locally. Give yourself a pat on the back! Why? Because, I had to go through a lot of trouble to get this running like specific versions of Python and Node. You can find the details in the Readme file at https://github.com/cocktailpeanut/dalai.

However, when I ran this, there were a lot of meaningless machine letters that came in answer to a simple question “I feel like having some snacks because . .” Upon further research, it looks like this is a well-known issue. Someone in a discussion channel suggested: “Check Alpaca model,” so I did that.

Alpaca: The instruction-following marvel

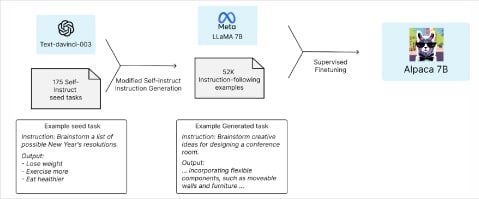

Alpaca is a fine-tuned version of LLaMA that’s been designed to follow instructions, much like ChatGPT. The jaw-dropping part is that the entire fine-tuning process cost less than US$ 600! When you compare that to GPT-3’s staggering US$ 5,000,000 price tag, it’s an absolute steal!

So, how did they do it? Well, OpenAI’s text-davinci-003 model lent a helping hand unwittingly by transforming 175 self-instruction tasks into a whopping 52,000 instruction-following examples for supervised fine-tuning. Talk about a clever workaround! The brains behind this amazing model are Rohan Taori, et al. It’s so creative — they basically used da-vinci-03 as a teacher for LLaMA to produce AIpaca!! You can find the entire paper on this at https://crfm.stanford.edu/2023/03/13/alpaca.html.

Installing and running Alpaca

To install Alpaca, all you have to do is run:

npx dalai alpaca install 7B

Alpaca is a lightweight champ, requiring only 4GB of storage, so it won’t take up much space on your computer.

To run Alpaca, just repeat the command:

npx dalai serve

..and you’ve got it — your very own ChatGPT-like model, ready to serve!

The versatile Dalai API

The fun doesn’t end here – the Dalai Library also offers an API that enables you to integrate both LLaMA and Alpaca into your own applications. This opens up a world of possibilities for innovative projects and experiments on your local machine.

Think about creating your own AI-powered chatbot, building a smart writing assistant, or even developing an AI tutor for your favourite subject! Now that you are not limited to 32k words, think about feeding all the classics written by your favourite author (who is no longer there to give us more magical creations), and create the books you always craved for! The only limit is your imagination, and I would love to hear about the creative ways you’re using LLaMA and Alpaca in your projects.

So, go ahead and explore the potential of these powerful yet accessible AI models. Just remember, both LLaMA and Alpaca are intended for non-commercial use only. Happy experimenting, and don’t forget to share your groundbreaking ideas with the community!