By understanding the inner workings of connection pooling and building your own pools, you will be able to build more reliable and high-performing applications, especially at scale. This first part of a two-part series demystifies the magic of connection pooling.

If you’re a software engineer, you’ve likely encountered the need to manage database connections efficiently. That’s where connection pooling comes in! Connection pools are a critical aspect of software engineering. They allow applications to efficiently manage connections to a database or any other system. If your application requires constant access to a system, establishing a new connection to the system for every request can quickly become resource-intensive, causing the application to slow down or even crash. This is where connection pools come in.

As engineers, we often don’t spend a lot of time thinking about connections. A single connection is typically inexpensive. However, as things scale up, the costs of creating and maintaining these connections increase accordingly. This is why I believe understanding the world of connection pooling is important. It will enable us to build more performant and reliable applications, especially at scale.

Typical connections

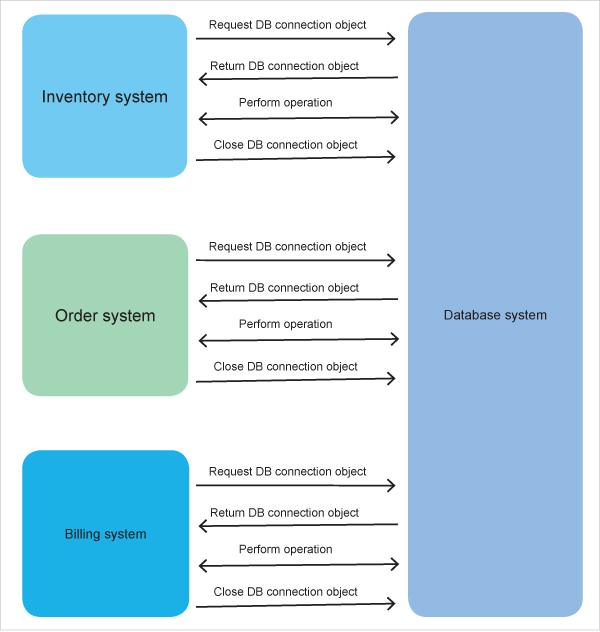

Before jumping to connection pooling, let us understand how an application typically connects to a system to perform any operation:

1. The application attempts to open a connection.

2. A network socket is opened to connect the application to the system.

3. Authentication is performed.

4. The operation is performed.

5. The connection is closed.

6. The socket is closed.

As you can see, opening and closing the connection and the network socket is a multi-step process that requires resource computation. However, not closing the connection, or keeping it idle, also consumes resources. This is why we need connection pooling. While you will mostly see connection pooling being used in database systems, the concept can be extended to any application that communicates with a remote system over a network.

What are connection pools?

Connection pools are typically a cache of connections that can be reused by an application. Instead of creating a new connection each time an application needs to interact with the system, a connection is borrowed from the pool. When it’s no longer needed, it is returned to the pool to be reused later. This approach ensures that the application always has access to a ready-to-use connection without the need to create new connections continuously.

Connection pooling reduces the cost of opening and closing connections by maintaining a ‘pool’ of open connections that can be passed from one operation to another as needed. This way, we are spared the expense of having to open and close a brand new connection for each operation the system is asked to perform.

Here, I’ll demystify connection pools, explain how they work and how to implement them, and explore some of the common issues associated with connection pools. I’ll also discuss connection pooling in the cloud and why it’s important for modern day applications. By the end of this post, you should have a good understanding of connection pools and how they can help you build more efficient and robust applications.

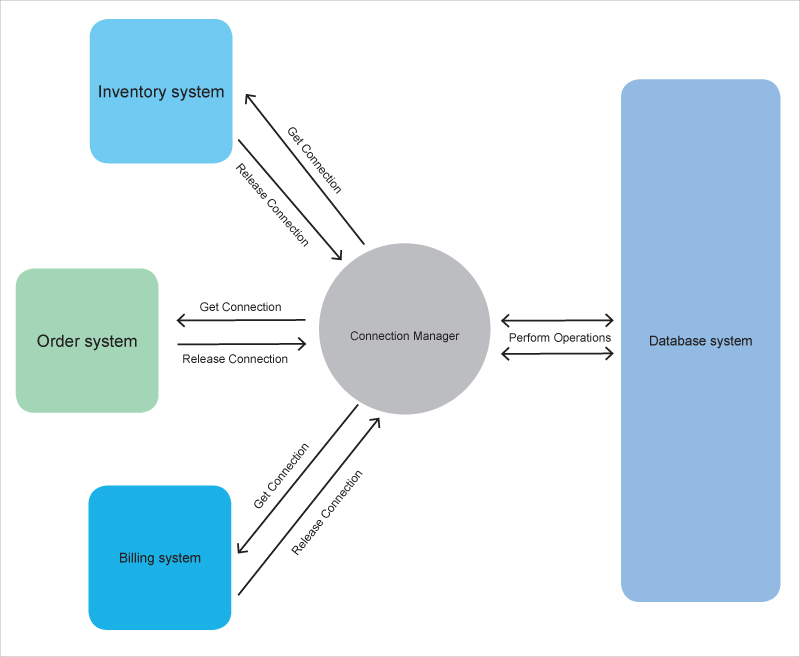

How connection pools work

The basic principle of connection pooling is to maintain a pool of connections that are ready for use, rather than creating and destroying connections as required. When a client requests a connection from the pool, the connection pool manager checks if there are any available connections in the pool. If one exists, the connection pool manager returns the connection to the client. Otherwise, the connection pool manager creates a new connection, adds it to the pool, and returns the new connection to the client.

Connection pooling algorithms are used to manage the pool of connections. These algorithms determine when to create new connections and when to reuse existing connections. The most common algorithms used for connection pooling are LRU (least recently used), and round-robin or FIFO (first in, first out).

In LRU, the connection pool manager keeps track of the time that each connection was last used. When a new connection is required, the connection pool manager selects the least recently used connection from the pool and returns it to the user.

In FIFO, the connection pool manager manages connections in the order they were added to the pool. When a new connection is required, the connection pool manager selects the connection that has been in the pool the longest and returns it to the user.

Connection pooling configurations are used to set the parameters for the connection pool. These configurations include settings such as the minimum and maximum number of connections in the pool, the maximum time a connection can be idle before it is closed, and the maximum time a connection can be used before it is returned to the pool.

Overall, the basic principles of connection pooling involve creating a pool of database connections, managing the pool using algorithms and configurations, and reusing the connections as required to reduce overhead and improve performance.

Implementing our own connection pool

To implement connection pooling in a specific programming language or framework, developers typically use connection pool libraries or built-in connection pool features. Code snippets and examples for implementing connection pools are often available in library documentation or online resources.

However, simply integrating an existing library in some dummy application is no good for us. Additionally, as software engineers, implementing our own connection pool can bring a wealth of knowledge benefits. First, it can significantly improve the performance of our application by reducing the overhead associated with establishing new connections. Additionally, it can help to prevent connection leaks and other issues that can arise from improperly managed connections.

Moreover, it provides us with fine-grained control over connection creation, usage, and destruction, allowing us to optimise our application’s resource utilisation. By implementing our own connection pooling, we can gain a deeper understanding of how our application works and thereby improve its scalability and reliability.

Building blocks

For ease of demonstration, we can use an SQLite3 database and implement our own custom pooling for it. In this example, we’ll be using the Go programming language due to its simplicity. However, you can use any language of your choice.

To start with, our ConnectionPool struct will look something like this:

type ConnectionPool struct {

queue chan *sql.DB

maxSize int

currentSize int

lock sync.Mutex

isNotFull *sync.Cond

isNotEmpty *sync.Cond

}

Here, the ConnectionPool struct contains the queue, maxSize, currentSize, lock, isNotFull, and isNotEmpty fields. The queue field is a channel that holds pointers to sql.DB connections. sql.DB type belongs to Go’s built-in database/SQL package. The latter provides a generic interface around SQL or SQL-like databases. This interface is implemented by the github.com/mattn/go-sqlite3 package which we will be using as an SQLite3 driver.

The maxSize field represents the maximum number of connections that the pool can have, and the currentSize field represents the current number of connections in the pool. The lock field is a mutex (https://en.wikipedia.org/wiki/Lock_(computer_science)) that ensures concurrent access to shared memory is synchronised. The isNotFull and isNotEmpty fields are condition variables that allow for efficient waiting and are used to signal when the pool is not full and not empty, respectively.

The sync.Cond is a synchronisation primitive in Go that allows multiple goroutines to wait for a shared condition to be satisfied. It is often used in conjunction with a mutex, which provides exclusive access to a shared resource (in this case the queue), to coordinate the execution of multiple goroutines. Channels can also be used for synchronisation, but they come with some overhead in terms of memory usage and complexity. In this case, the use of sync.Cond provides a simpler and more lightweight alternative as it allows for efficient signalling for waiting goroutines.

By using sync.Cond, the implementation can ensure that goroutines waiting on the condition will be woken up only when the condition is actually met, rather than relying on a buffer channel that might have stale data. This improves the overall performance and reduces the likelihood of race conditions or deadlocks.

Getting a connection object from the pool

Next, we will implement a Get method which will return a database object from an existing ConnectionPool:

func (cp *ConnectionPool) Get() (*sql.DB, error) {

cp.lock.Lock()

defer cp.lock.Unlock()

// If queue is empty, wait

for cp.currentSize == 0 {

fmt.Println(“Waiting for connection to be added back in the pool”)

cp.isNotEmpty.Wait()

}

fmt.Println(“Got connection!! Releasing”)

db := <-cp.queue

cp.currentSize--

cp.isNotFull.Signal()

err := db.Ping()

if err != nil {

return nil, err

}

return db, nil

}

This function, Get(), retrieves a connection from the pool. First, it acquires the lock to ensure exclusive access to the shared state of the connection pool. If the pool is currently empty, the function waits until a connection is added back to the pool.

Once a connection is available, the function dequeues it from the queue, decrements currentSize, and signals that the pool is not full. It then checks whether the connection is still valid by calling Ping(). If the connection is not valid, an error is returned, and the connection is not returned to the caller. If the connection is valid, it is returned to the caller.

Adding a connection object to the pool

Moving on, we introduce an Add method whose responsibility it will be to add the connection object to the pool once it has been used:

func (cp *ConnectionPool) Add(db *sql.DB) error {

if db == nil {

return errors.New(“database not yet initiated. Please create a new connection pool”)

}

cp.lock.Lock()

defer cp.lock.Unlock()

for cp.currentSize == cp.maxSize {

fmt.Println(“Waiting for connection to be released”)

cp.isNotFull.Wait()

}

cp.queue <- db

cp.currentSize++

cp.isNotEmpty.Signal()

return nil

}

This function, Add(), adds a connection to the pool. It first checks whether the connection is ‘nil’ and returns an error if it is. Then, it acquires the lock to ensure exclusive access to the shared state of the connection pool. If the pool is currently full, the function waits until a connection is released from the pool.

Once there is space in the pool, the function enqueues the connection onto the queue, increments currentSize, and signals that the pool is not empty. The function returns ‘nil’ to indicate success.

Closing the connection pool

As the name suggests, we will implement a Close function which will be responsible for closing all database connections in the pool. It starts by acquiring a lock and then iterates through all the connections in the pool, and closes them one by one. After closing each connection, it decrements the currentSize counter and signals any waiting goroutines that there is space available in the pool now.

func (cp *ConnectionPool) Close() {

cp.lock.Lock()

defer cp.lock.Unlock()

for cp.currentSize > 0 {

db := <-cp.queue

db.Close()

cp.currentSize--

cp.isNotFull.Signal()

}

close(cp.queue)

}

With the basic building blocks in place, can we use whatever we have as of now and run our own connection pool? Not yet. We still need a way to initialise our connection pool. What’s missing you ask? Answers to that and more will be covered in the next part of this series. Watch out for this space!