As AI adoption accelerates, enterprises are increasingly embracing multi-cloud strategies to leverage the unique strengths of different cloud providers. But managing these complex environments can be daunting. Open source tools offer a compelling solution, providing cost-effective, customisable, and vendor-neutral options for deployment, orchestration, monitoring, and governance. Let’s explore the advantages of multi-cloud for AI, the key open source tools, and best practices for success.

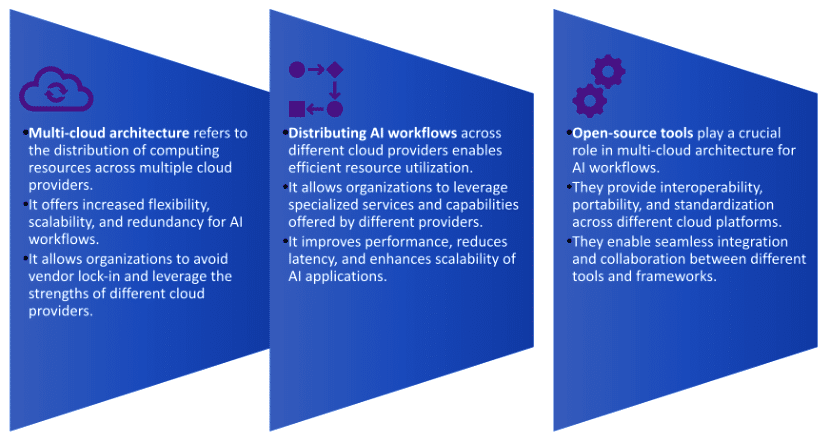

Multi-cloud architecture is the practice of distributing workloads and applications across multiple cloud providers. This approach allows organisations to leverage the strengths and capabilities of different cloud platforms, mitigating the risks associated with vendor lock-in, and increasing flexibility and scalability. While it brings vendor diversity by utilising multiple cloud providers, organisations are exposed to the risk of service disruptions when managing complex environments. Most organisations still opt for it, as choosing the right product and platform improves performance in a geographically distributed cloud infrastructure of each provider. Also, it can lead to cost optimisation by choosing the right workload management with proper allocation of resources.

Navigating the multi-cloud landscape for AI

In the AI era, businesses are turning to multiple cloud providers to gain:

- Flexibility: Access a wider range of services and features tailored to specific AI tasks

- Scalability: Efficiently handle fluctuating workloads, from massive training jobs to real-time inferences

- Cost optimisation: Choose the most cost-effective solutions for different needs

- Availability: Minimise downtime and maximise data security by distributing it across regions and providers

However, managing multi-cloud environments introduces new challenges.

- Complexity: Coordinating configurations, deployments, and operations across diverse platforms

- Vendor lock-in: Risk of reliance on a provider, limiting agility and cost advantages

- Security: Ensuring consistent security policies and procedures across different cloud environments

- Governance: Maintaining visibility, control, and compliance across cloud deployments

Open source: The key to unlocking multi-cloud agility

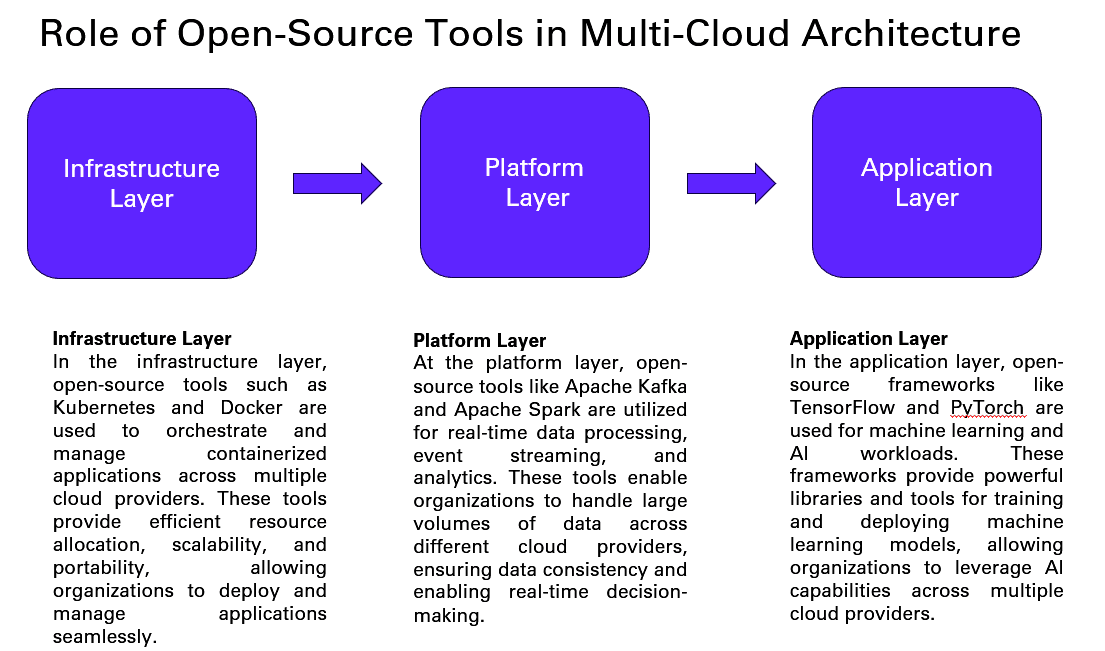

Open source tools play a crucial role in each layer of the multi-cloud architecture, providing flexibility, scalability, and cost-effectiveness. These tools enable organisations to leverage the benefits of multi-cloud environments while avoiding vendor lock-in and promoting interoperability.

Open source tools empower organisations to address these challenges by offering:

- Cost-effectiveness: Freely available or with lower licensing costs, reducing infrastructure expenses

- Customisation: Adaptable to specific needs and workflows, promoting flexibility and innovation

- Vendor neutrality: Avoid lock-in, enabling switching between providers for optimal cost and performance

- Community-driven development: Continuous improvement, bug fixes, and security updates through active communities

Essential open source tools for your multi-cloud AI toolkit

- Infrastructure as Code (IaC): Terraform, Ansible, and Pulumi codify deployments for consistency, repeatability, and automation

- Container orchestration: Kubernetes manages containerised workloads across clusters, simplifying AI model deployments

- Monitoring and logging: Prometheus, Grafana, and Elasticsearch provide unified, real-time insights into multi-cloud resources and AI models

- Cost management: CloudHealth and OpenStack CloudKitty track, analyse, and optimise cloud spending across providers

- Security and compliance: OpenFaaS and Knative provide serverless functions for secure and scalable AI deployments

- CI/CD pipelines: Jenkins and GitLab automate AI development and deployment life cycles across clouds

Best practices for open source multi-cloud AI success

- Adopt a cloud-native approach: Design cloud-agnostic AI applications for portability and flexibility

- Standardise on open APIs: Facilitate communication and integration between AI models and services across clouds

- Choose tools based on maturity and adoption: Select well-established, actively maintained tools

- Build a strong community: Actively engage with open source communities for support and collaboration

- Prioritise security and governance: Implement robust security policies and compliance measures across clouds

- Plan for automation: Automate deployments, scaling, and operations for efficiency and reliability

In a nutshell, multi-cloud architecture with distributed workloads is employed for various reasons including mitigation of vendor lock-in concerns. Organisations do so as they weigh in benefits such as improved reliability, scalability, and cost optimisations, which outweigh the concerns. Distributed workflow, especially of AI across different cloud platforms, provides significant workload management benefits enabling the true strengths of each cloud service provider’s native services to be leveraged for an optimised performance.

Figure 2 indicates the positive effects of proper multi-cloud architecture that has distributed workloads across different cloud service providers with the use of open source tools. This helps unleash the true power of AI at a lower cost as compared to proprietary platforms.

Managing multi-cloud deployments for AI unlocks tremendous potential. With the right open source tools and best practices, you can navigate the complexity, avoid vendor lock-in, and achieve true agility and cost-effectiveness. Embrace the multi-cloud multiverse with open source tools and empower your AI journey!