This article deals with setting up LVS (Linux Virtual Server) for clustering on SUSE. Also discover how it acts as a scalable and high-performance delivery platform — just like an ideal load balancer.

Load balancing is a frequently used term in today’s software industry. Any organisation that deals with Web services of any kind has to use load balancing to handle its Web traffic. Let me explain load balancing using the example of the biggest Web presence, Google. Let’s look at the most basic Google query.

Every second, across the globe, around a million queries are made to Google’s search engine. To process these queries in milliseconds, you need an architecture that seldom fails, and scales and balances load very proficiently. Google has around a million servers around the globe. In this complicated architecture, there lies a configuration of load balancers of different platforms, out of which Linux load balancers are an increasing percentage, as they are better performers than the others.

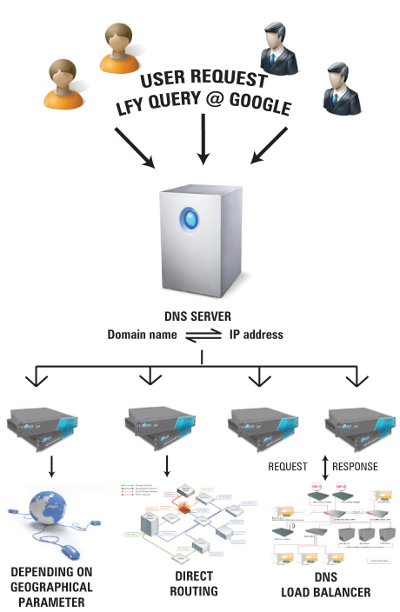

When you enter a Google query, such as “LFY”, your browser first performs a domain name system (DNS) lookup to map www.google.com to a particular IP address. Load balancing occurs over clusters, and a single cluster consists of a thousand machines. A DNS-based load-balancing system selects a cluster, by accounting for your geographic proximity to each physical cluster. The load-balancing system minimises the query processing time for your request, while also considering the available capacity at the various clusters.

A hardware-based load balancer in each cluster monitors the available set of Google Web servers, and performs local load balancing of requests across a set of them. After receiving a query, a Web server machine coordinates the query execution, and formats the results into an HTML response to your browser. This process is depicted in Figure 1.

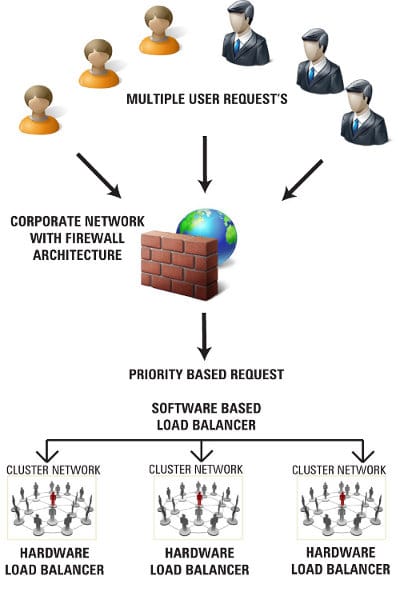

Load balancing can be categorised into three de-facto types:

- Load balancing through NAT: This can be a bottleneck when it comes to a large number of clusters, because both requests and response have to be routed through the same load-balancer configuration.

- Load balancing through IP tunnelling: The load balancer just schedules requests to different real servers, and the real servers return replies directly to users. Thus, the load balancer can handle a huge amount of requests without being a bottleneck in the system.

- Load balancing through direct routing: This is similar to the previous category, in which real servers reply on their own, but the difference is that the response can be routed, and need not incur tunnelling overhead.

There are numerous open source load balancers in the industry, which are getting better, day by day, as they are being taken care of by contributors and the community. They outclass the others due to extra features, multi-platform support, low or no license costs, and their association with other open source-based load balancers. For example, LVS (Linux Virtual Server) and Ultra Monkey, exhibit these characteristics.

Some of the open source load-balancing solutions available in the market are given below (I have tried to prioritise the list):

- Linux Virtual Server Project

- Ultra Monkey

- Balance

- BalanceNG

- Red Hat Cluster Suite

- The High Availability Linux Project

Now, I will explain how to configure LVS for clustering on SUSE, and how LVS acts as a scalable and high-performance delivery platform.

LVS for clustering on SUSE

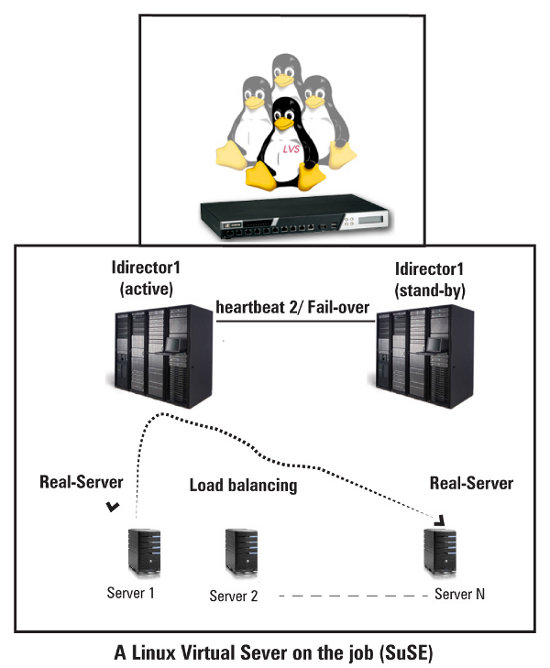

Figure 3 depicts the intended logical layout of our load-balancer setup.

LVS, like an ideal load balancer, acts as a pathway to real server configuration. There are both hardware-based and software load-balancing configurations available for LVS. LVS load balances incoming requests, and distributes them across the architecture using some scheduling methods. It uses all three types of load balancing — i.e., NAT, IP tunnelling and direct routing.

A load balancer is a single point of entrance for all requests, so it’s really important to have a backup or fail-over ready. In this example, Heartbeat2 is used as a fail-over monitor for the load balancers. Ldirectord monitors the health of the real clusters by periodically requesting a known URL, and checking that the response contains an expected string. If a service fails on a server, then the server is taken out of the pool of real clusters, and can be segregated for maintenance.

In this way, LVS maintains two important parameters during load balancing requests: availability and scalability. Heartbeat2 is a program that sits across load balancers, and makes sure that there is an active-standby configuration in a healthy state, so in case of a failure, the other load balancer can take over the work of the failed unit.

The ldirectord daemon takes care of scalability, by making sure that all cluster machines are active, and in a healthy state, ready for processing.

gh availability behaviour

- Node-level monitoring: If one of the nodes (ldirector1/ldirector2) running cluster resources stops sending out heartbeat signals, declare it dead, reboot the node and fail over all resources to a different node.

- Service-level monitoring: If the VIP or

ldirectordservice fails, try to restart the service. If this fails, reboot the node, and fail over all resources to a different node. - Service “stickiness”: If a dead or stand-by node becomes active again, keep the resources where they run now, and don’t fail back.

Heartbeat2

It sits on both load-balancer machines, and also monitors the ldirectord daemon.

The main high-availability configuration file (/etc/ha.d/ha.cf) contains the following (in our scenario):

crm on udpport 694 bcast eth0 node ldirector1 ldirector2

/etc/ha.d/authkeys contains…

auth 1 1 sha1 YourSecretKey

This file is not readable or writeable by anyone other than the root user:

chmod 600 /etc/ha.d/authkeys

Name resolution

Add node names to /etc/hosts on both linux-directors:

192.168.0.10 ldirector1 192.168.0.20 ldirector2

To start the cluster, run the following command:

/etc/init.d/heartbeat start Node: ldirector2 (f8f2ad4a-a05d-416a-92a9-66b759768fb9): online Node: ldirector1 (5792135e-ed53-438b-8a71-85f0285464c2): online

On the linux-directors, set the password for the user as: hacluster

/usr/bin/passwd hacluster

Configuration: linux-director (load balancer)

It is recommended that you disable SuSEfirewall2 for the configuration, to avoid networking issues.

rcSuSEfirewall2 stop chkconfig SuSEfirewall2_init off chkconfig SuSEfirewall2_setup off

The linux-directors must be able to route traffic to the real servers. This is achieved by enabling the kernel IPv4 packet forwarding system, as follows:

# /etc/sysctl.conf net.ipv4.ip_forward = 1

Ldirectord

Create the file /etc/ha.d/ldirectord.cf and add the following statements:

checktimeout=3

checkinterval=5

autoreload=yes

logfile="/var/log/ldirectord.log"

quiescent=yes

virtual=192.168.0.200:80

fallback=127.0.0.1:80

real=192.168.0.110:80 gate

real=192.168.0.120:80 gate

service=http

request="test.html"

receive="Still alive"

scheduler=wlc

protocol=tcp

checktype=negotiate

ldirectord will connect to each real server in certain intervals of a few seconds, and request 192.168.0.110:80/test.html. It will be added again, once the check succeeds.

Test

Start ldirectord and check the real server table:

/etc/init.d/ldirectord start Starting ldirectord... success

Disable the ldirectord service

Make sure ldirectord is not running, and won’t start on boot. Only Heartbeat2 will be allowed to start and stop the service.

/etc/init.d/ldirectord stop /sbin/chkconfig ldirectord off

Although Linux Virtual Server and other Linux systems are feature-rich and capable of attaining parameters like scalability and availability, there are features being added every day, and can be sustained at the same module where load balancing is done. The idea behind adding these features can be to provide an ideal solution for all Web issues that an organisation encounters — that too, at a minimal cost.

Some of the extra features that are, or can be, added to a Linux load balancer (or, say, an ideal load balancer) are:

- SSL accelerator: As we know, SSL traffic takes a considerable time to process, so an accelerator can be included.

- Asymmetric load balancing: This can be configured in case one server is to be assigned more load than the others.

- Authentication: Users can be authenticated through a variety of authentication sources, before allowing them to go through.