This article examines the rise of Network Functions Virtualisation or NFV (which is built using cloud technologies in the service provider network), provides an overview of the ETSI reference architectural framework for NFV, and concludes with the role that open source projects play in this emerging area.

The service providers dilemma

The demand for networks is increasing. Propelled by smartphones and social networking applications, people now produce and consume more pictures and videos every day resulting in an exponentially growing volume of traffic. With increasing network penetration, more and more people and devices access the Internet every day. It is estimated that there are approximately 9 billion devices connected to the Internet already. While this may seem huge, technologists predict an even more rapid increase in Internet connected devices (to around 50 billion devices by 2020, according to some reports), a trend now called the Internet of Things (IoT).

While this appears to put network (Internet) service providers in a sweet spot, there is a problem. The revenues do not grow as fast, since more bandwidth and downloads need to be provided at lower costs. Handling the huge increase in scale (number of devices, number of flows) and throughput requires large capacity addition. They need to buy more networking equipment, wire it together, manage and operate it. Todays network design does not make this easy.

This results in a dilemmanot catering to the rising network demand would result in losing market share, customer mind share and a leadership position; but to cater to the growth in demand would require huge investments in capacity, particularly for peak loads, and also involve additional management and operational complexities and costs.

Networks today

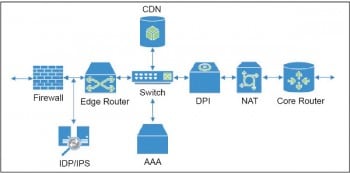

For the layman, the service provider network is an interconnection of routers and switches. In reality, diverse networking equipment is used in a service provider network. In addition to routers and switches, there are traffic load balancers, CG-NAT devices, content caching/serving devices (CDN), VPN boxes, a variety of security equipment starting with firewalls to intrusion detection and prevention devices to more complex threat management equipment, and many more. Figure 1 provides a conceptual illustration showcasing the diversity of equipment and their interconnections.

The service provider needs to buy the best-of-breed equipment from a variety of device vendors. Typically, each device is configured manually via Command Line Interface (CLI) by network experts trained for a particular category of devices. The way the devices are interconnected is static and limits the service flexibility that can be provided. Adding new devices or services into the mix is a daunting task.

Factors behind the rise of NFV

Server virtualisation: A little more than a decade ago, the server world underwent a transformation. It began when virtualisation technologies began to mature with support from CPU vendors. Instead of virtual machines (VMs) running on CPU emulators such as Bochs (http://bochs.sourceforge.net/), CPUs started to provide ways to run the VMs instructions natively. This boosted the performance of applications running in VMs to be nearly the same as when running natively. This enabled enterprises to consider server virtualisation as an option.

The migration from running applications on physical servers to running them within virtual machines enabled enterprises to make better use of their physical servers. By running services within VMs and orchestrating virtual machine creation/deletion and steering traffic to the new VM instances, enterprises were able to get elasticity, i.e., the ability to deal with fluctuating demands in an optimal manner. More VMs were provided to services seeing higher loads and less VMs to services with low load conditions.

Service providers too face similar problems. Load on a network is not the same at all times. Hence, they began to seriously consider leveraging virtualisation technologies to solve their network problems. If network services such as NAT or IDP/IPS could be run in VMs on server platforms, they realised they could save on hardware costs and reap the same benefits as enterprises.

I/O virtualisation: Once the ability to run a VMs CPU instructions at near native speeds was provided, CPU vendors started adding the ability to virtualise I/O. Until then, the I/O performance was constrained by the hypervisors capacity or the host-OSs capacity. Thus, virtualisation was suited only for compute-heavy workloads. But, networking applications are I/O heavy.

I/O virtualisation technologies such as SR-IOV and the ability to DMA (direct memory access) from physical devices directly into a virtual machines address space provides a way for VMs to access the physical Ethernet ports directly, rather than via the hypervisors device emulation. This enables the VM based applications to utilise the full bandwidth of the network ports.

Software defined networking (SDN): SDN is an emerging trend to make networks programmable. SDN is about providing applications both visibility and the ability to control how traffic flows in the network. To that end, applications are provided the required abstractions of the network, information about traffic flowing via the network and the APIs to instruct how traffic must flow.

To provide a network level view, SDN involves the separation of the data plane and control plane, moving the control plane out of the network equipment, and centralising the control plane. For interoperability and vendor independence, the data plane and control plane communicate via a standards based protocol, the most popular one being OpenFlow. It abstracts the data plane as a set of match-action rules, where N-tuple from the Ethernet-frame is looked up and the action defined by the rule is performed.

In a traditional network, each device in the network maintains its own view of the network and exchanges messages with its peers via routing protocols, so that all devices maintain the same view of the network. Configuring the control plane protocols requires special knowledge and training. When new network services are to be introduced into the network, the control plane configuration needs to be tweaked and tested, in order to ensure that there is no unintended impact on the network. This requires planning, time and effort, and hence a cost for the service providers.

In SDN, the controller takes the role of the control plane. The job of the SDN controller is to obtain information from the SDN data plane elements, build a topology, and translate the information from the network into an abstract form that can be provided to applications. Similarly, instructions received from the applications are translated into actions for each piece of equipment in the SDN network.

Coming to cloud deployments, elasticity the ability to create or remove VMs based on load – is a key requirement for operational efficiency. This requires that traffic from the data centre gateway be steered to the new VM instance, when a VM is created, or steered to other VM instances, when a VM is removed. SDN enables rapid traffic steering by helping the SDN controllers to modify the actions to be taken by SDN data plane elements for individual traffic flows.

The need for rich services

With the cost of bandwidth being lowered, service providers aim to improve revenues by providing a rich set of value added services. In current networking equipment, services are typically handled in a separate service plane, kept in the same chassis as the data and control plane components. With increasing line-card capacity, the service plane complex becomes more and more overwhelmed. To remedy this, services are provided via dedicated high performance equipment, as shown in Figure 1. These service equipment are chained together to provide a set of services, and this is called service chaining. Today, it is static in nature and depends on how the devices are connected.

However, once services are moved out of the chassis, it also creates the possibility to run the same services as virtual instances running in server platforms. With SDN, we can create service chains dynamically. This provides a lot of flexibility and operational convenience to service providers. Thus, service chaining is a primary driver for service providers to consider NFV, while retaining the investments already made by them in high-capacity routers and switches.

Other factors

The service providers are looking for standards based solutions so that they can get the best-of-breed equipment and avoid vendor lock-in. As the network becomes software-centric rather than hardware-centric, they are considering open source solutions as well.

The emergence of Ethernet, once considered a LAN technology, into the WAN arena also helps. Ethernet speeds have been increasing with constant innovations in the physical (optical) layers. Today we talk of servers with 40G ports and even 100G ports. This means that servers running virtualised network appliances no longer need other equipment to terminate protocols such as SONET and ATM. They can directly terminate network traffic and provide service.

ETSI architecture for NFV

In 2012, the European Telecommunications Standards Institute (ETSI) created an industry standards group (ISG), supported by some service providers, to define requirements, use-cases, architectural references and specifications in the area of NFV.

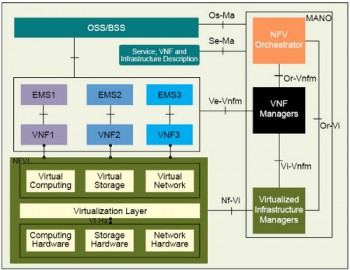

Since then, ETSI has published various documents, defining a reference architectural model, shown in Figure 2, and various recommendations to enable NFV adoption and interoperability.

The NFVI block shown at the bottom of Figure 2 comprises the hardware, i.e., physical servers, storage devices and physical network comprising networking hardware and interconnects. Above this lies the hypervisor layer, which provides virtualised compute, storage and networks for running applications. Various Layer 2 tunnelling technologies (e.g., VXLAN, NVGRE) help create a virtual network overlay over the physical network.

The virtual network functions (VNFs), managed by their respective element management systems (EMS), run above this virtualised infrastructure. The VNFs provide the functionality that is currently provided via physical network hardware.

The NFV management and orchestration layer (MANO) comprises the orchestrator, virtual machine manager and virtualised infrastructure manager. The orchestrator works with the OSS/BSS system to determine load and network services for which VNFs need to be created or deleted. It works with the virtual machine manager to create/remove VNFs and with a virtual infrastructure manager to assign hardware, storage and network resources to the VNFs. It also steers traffic to the VNFs and creates service chains by connecting multiple service VNFs.

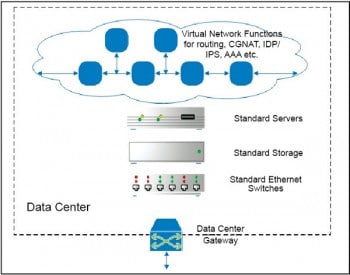

Figure 3 illustrates what a future service provider network based on cloud technologies would look like.

Open source projects in NFV

Open source projects are playing a key role in the evolution and adoption of cloud technologies in general, and NFV in particular. These projects are also well supported by the industry.

In this section, we will look at some well-known open source projects relating to NFV. A brief about each project is provided along with a mention as to where they fit in the ETSI NFV reference architecture.

There is a rich open source ecosystem covering various aspects of virtualisation, SDN, NFV and the cloud. Thus, this section is not and cannot be exhaustive. Nor is it intended to be an endorsement or otherwise of any specific project mentioned or omitted here.

QEMU/KVM (wiki.qemu.org, www.linux-kvm.org): KVM, together with QEMU, is a basic hypervisor providing both processor and board virtualisation. It is also integrated into the Linux kernel and hence is widely available and increasingly used in many cloud deployments.

In the ETSI reference architecture, QEMU/KVM provides the virtual compute capability.

Data Plane Development Kit – DPDK (www.dpdk.org): The data plane deals with handling packets/frames and applying the required functionality. The performance of networking equipment depends on the performance of the data plane. To build a high performance data plane, network equipment vendors have been using custom ASICs or network processors (NPUs). The NPUs are specialised processors optimised for packet processing.

However, with advances in CPU architecture and the advent of multi-core x86 processors, it is possible to use DPDK to create reasonably high-performance data planes running on x86 processors. DPDK provides a set of libraries, drivers and template applications to help create a data plane application.

In the ETSI reference architecture, DPDK is a building block to create VNFs.

Open vSwitch (openvswitch.org): The Open vSwitch project provides an open source virtual Ethernet switch. VNFs can comprise multiple VMs that use a private Ethernet network to interconnect them. This would be the case when a legacy chassis based network application is being virtualised.

Open vSwitch can also be used to connect multiple VNFs into a single virtual Ethernet switch, thereby providing the ability to locate VNFs in different physical nodes. It provides a virtual LAN overlay over the physical Ethernet network of a data centre.

In the ETSI reference architecture, Open vSwitch comes under the virtual network component. It can also be considered as a VNF providing switching functionality.

OpenDaylight (www.opendaylight.org): OpenDaylight is an open source SDN controller backed by some of the biggest names in the networking industry. It is also a project that comes under the Linux Foundation. According to the website, OpenDaylight (ODL) is a highly available, modular, extensible, scalable and multi-protocol controller infrastructure built for SDN deployments on modern heterogeneous multi-vendor networks. It provides a model-driven service abstraction platform that allows users to write apps that easily work across a wide variety of hardware and southbound protocols.

In the context of NFV, OpenDaylight can be used together with compatible Ethernet switches to create an SDN network for service chaining.

In the ETSI reference architecture, OpenDaylight primarily covers the virtualised infrastructure manager for the network functionality.

ONOS (onosproject.org): ONOS describes itself as the first open source SDN network operating system. ONOS is promoted by both service providers and networking vendors, and aims to provide a network operating system that caters to mission critical needs such as high-availability, scale and performance. On the southbound interface it supports OpenFlow protocol. On the northbound interface it provides abstractions for network information and APIs, using which applications can communicate intent and let ONOS take care of the details, such as how to provision the various devices to implement the intent.

In the ETSI reference architecture, ONOS covers the virtualised infrastructure manager for network functionality.

OpenStack (www.openstack.org): Among the important open source projects in the area of NFV (and for cloud computing, in general) is OpenStack, which describes itself as a cloud operating system. OpenStack helps manage physical compute, storage and networking resources, and provides APIs to create, connect and manage virtual resources. It also provides an API to help create orchestration applications.

In the ETSI reference architecture, OpenStack provides the virtualised infrastructure manager along with basic MANO capabilities that can be used as building blocks to create cloud management applications.

OPNFV (www.opnfv.org): The Open Platform for NFV (OPNFV) is an initiative to create an integrated carrier-grade and open platform for NFV. It is also a project that comes under the Linux Foundation. The project aims to work with many upstream open source projects (such as OpenStack, OpenDaylight, Open vSwitch, etc), and integrate and test them for various use cases. What the industry gets is an integrated and tested platform which can become a base for NFV deployments, thereby enabling faster adoption.

OPNFV brings together various independent open source technologies, and provides a complete platform that covers the various architectural blocks shown in ETSIs reference architecture.

References

[1] ETSI NFV ISG: http://www.etsi.org/technologies-clusters/technologies/nfv

[2] AT&T Domain 2.0 Whitepaper: https://www.att.com/Common/about_us/pdf/AT&T%20Domain%202.0%20Vision%20White%20Paper.pdf