This article is about the next generation Docker clustering and distributed system, which comes with various interesting built-in features like orchestration, self-healing, self-organising, resilience and security.

Docker is an open platform designed to help both developers and systems administrators to build, ship and run distributed applications by using containers. Docker allows the developer to package an application with all the parts it needs, such as libraries and other dependencies, and to ship it all out as one package. This ensures that the application will run on any other Linux machine, regardless of any customised settings that the machine might have, which could differ from the machine used for writing and testing the code.

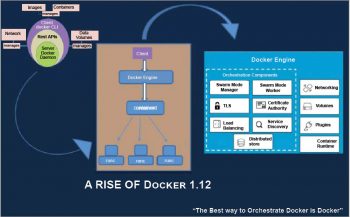

Docker Engine 1.12 can be rightly called the next generation Docker clustering and distributed system. One of the major highlights of this release is the Docker Swarm Mode, which provides a powerful yet optional ability to create coordinated groups of decentralised Docker engines. Swarm Mode combines your engine in swarms of any scale. It is self-organising and self-healing. It enables an infrastructure-agnostic topology. The newer version democratises orchestration with out-of-the-box capabilities for multi-container on multi-host app deployments, as shown in Figure 1.

Built as a uniform building block for self-organising and healing a group of engines, Docker ensures that orchestration is accessible to every developer and operation user. The new Swarm Mode adopts the de-centralised architecture rather than the centralised one (key-value store) as seen in the earlier Swarm releases. Swarm Mode uses the Raft consensus algorithm to perform leader selection, and maintain the cluster’s states.

In the Swarm Mode, all Docker engines will unite into a cluster with a management tier. It is basically a master-slave system, but all Docker engines will be united and they will maintain a cluster state. Instead of running a single container, you declare a desired state for your application, which means multiple containers and then the engines themselves will maintain that state.

Additionally, a new ‘Docker service’ feature has been added in the new release. The command,‘docker service create’ is expected to be an evolution of ‘docker run’, which is an imperative command and it helps you to get the container up and running. The new docker service create command declares that you have to set up a server, which can run one or more containers and those containers will run, provided the state you declare for the service is maintained in the engine inside the distributed store based on the Raft consensus protocol. That brings up the desired state reconciliation. Whenever any node in the cluster goes down, the Swarm itself will recognise that there has been a deviation in the desired state, and it will bring up a new instance to reconstruct the reconciliation.

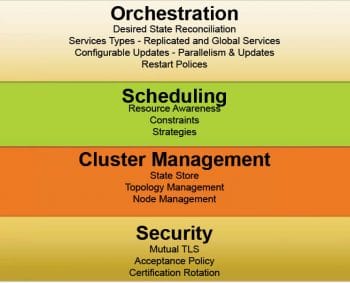

Docker Swarm Mode is used to orchestrate distributed systems at any scale. It includes primitives for node discovery, Raft-based consensus, task scheduling and much more. Let’s look at the features Docker Swarm Mode adds to Docker Cluster functionality, as shown in Figure 2.

Looking at these features, Docker Swarm Mode brings the following benefits.

- Distributed: Swarm Mode uses the Raft Consensus algorithm in order to coordinate, and does not rely on a single point of failure to make decisions.

- Secure: Node communication and membership within a Swarm are secure out-of-the-box. Swarm Mode uses mutual TLS for node authentication, role authorisation, transport encryption, and for automating both certificate issuance and rotation.

- Simple: Swarm Mode is operationally simple and minimises infrastructure dependencies. It does not need an external database to operate. It uses the internally distributed State store.

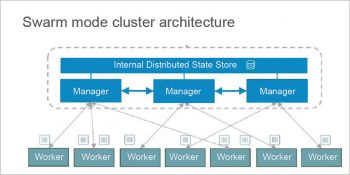

Figure 3 depicts Swarm Mode cluster architecture. Fundamentally, it’s a master and slave architecture. Every node in a swarm is a Docker host running a Docker engine. Some of the nodes have a privileged role called the manager. The manager node participates in the ‘Raft consensus’ group. As shown in Figure 3, components in blue share an internal distributed state store of the cluster, while the green coloured components/boxes are worker nodes. The worker nodes receive work instructions from the manager group, and this is clearly shown in dashed lines.

Getting started with Docker Engine 1.12

In this section, we will cover the following aspects:

- Initialising the Swarm Mode

- Creating the services and tasks

- Scaling the service

- Rolling updates

- Promoting a node to the manager group

To test drive the Docker Mode, I used a four-node cluster in the Google Cloud Engine — all running the latest stable Ubuntu 16.04 system as shown in Figure 4.

Initialising the Swarm Mode

Docker 1.12 is still in the experimental phase. Setting up Docker 1.12-rc2 on all the nodes should be simple enough with the following command:

#curl -fsSL https://test.docker.com/ | sh

Run the command (as shown in Figure 5) to initialise Swarm Mode under the master node.

Listing of the Docker Swarm master node is shown in Figure 6.

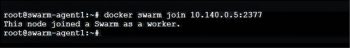

Let us add the first Swarm agent node (worker node) as shown in Figure 7.

Let’s go back to the Swarm master node to see the latest Swarm Mode status.

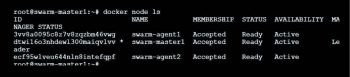

Similarly, we can add other Swarm agent nodes to the swarm cluster and see the listings, as shown in Figure 8.

Creating services and tasks

Let’s try creating a single service called collab, which uses the busybox image from Dockerhub, and all it does is ping the collabnix.com website.

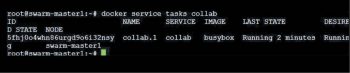

A task is an atomic unit of service. We actually create a task whenever we add a new service. For example, as shown in Figure 11, we have created a task called collab.

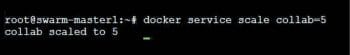

Scaling the service

To scale the service to 5, run the command shown in Figure 12.

Now you can see that there are five different containers running across the cluster, as shown in Figure 13.

The command in Figure 13 declares a desired state on your swarm of five busybox containers, reachable as a single, internally load balanced service of any node in your swarm.

Rolling updates

Updating a service is pretty simple. The ‘Docker service update’ is feature-rich and provides loads

of options to play around with the service. Let’s try updating the redis container from 3.0.6 to 3.0.7 with a 10s delay and parallelism count of 2.

Promoting a node to the manager group

Let’s try to promote Swarm Agent Node-1 to the manager group, as shown in Figure 14.

To summarise, Docker comes with a distribution platform, and makes multi-host and multi-container orchestration easy. It has new API objects like services and nodes that will let you use the Docker API to deploy and manage apps on a group of Docker engines, and provides scaling, promotion and rolling updates for the cluster nodes.

[…] Building a multi-host, multi-container orchestration and distributed system using Docker […]

[…] the entire survey base, 47 percent of users are using containers to orchestrate apps with Kubernetes, whereas 65 percent are running OpenStack services within their containers using […]