Linux containers is an operating system level virtualisation method that is used to run multiple, isolated Linux systems on a control host with just a single Linux kernel.

If you look at the dictionary’s definition of containers, it would be ‘an object for holding and transporting something’. In the same way, Linux containers (mostly abbreviated as LXC in technical language) help in providing virtualisation to the Linux operating system. Tough to comprehend? Wait! Let’s suppose that your enterprise requires to move your code from one environment to another, or that you want to keep your machines and servers tidy and clean. Whether these systems are for development or production purposes, or if you want to try out some different application or development stack on your regular machine without contaminating it, the solution is containers. Linux containers empower enterprises and companies in wrapping and isolating their products with all the necessary files used for running them. This makes it super easy to move containerised code between different environments and, at the same time, retain their full functionality. Technically, containers are created for the services that your server will provide, and the underlying hardware resources or kernel will be shared by all the containers.

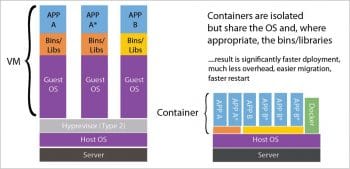

Most of the time, Linux containers are mistaken for virtual machines or VMs. A virtual machine is just an emulation of a real computer that acts and responds like a real machine. The main difference between a container and a VM is that containers share the host machine’s kernel with all the other containers deployed on it, while VMs have many operating systems run on one machine with the help of a hypervisor. This feature of containers makes them light in weight compared to VMs and also helps in effective memory management. Containers have a number of benefits, some of which have been enumerated below:

1. Usually, containers require memory in megabytes, whereas a virtual machine can occupy several gigabytes. So, a single server can host a far greater number of containers, compared to how many VMs it can host.

2. Since containers are lightweight, the boot up time taken is also low. Further, they can be shut instantly. In comparison, virtual machines take a much longer time in booting up and shutting down.

3. Containers also provide modularity in development. Instead of running an entire application in a single container, it can be split into modules and run on different containers — for example, the front-end, the database, etc. This not only increases the performance of an application but also makes maintenance of the code easier.

Because of this, Linux containers are gaining significant ground in today’s world, and a number of corporations are making use of containerisation technology in their software development and testing cycle. This infrastructure is also developing very fast in cloud technology and cloud based services.

History of Linux containers

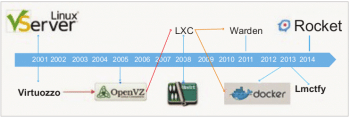

Container technology has evolved from a number of previous innovations and ideas. Starting from 1979, a lot of work has been done in the field of containerisation, which has given birth to present day container management systems like Docker.

- 1979 – UNIX V7: When the development of UNIX V7 was under way in 1979, the chroot system call was invented for changing the root directory of a process and its children to a new file system location. The thinking behind this was to provide an isolated hard drive space for each process. This was the first step in the field of containerisation.

- 2000 – FreeBSD Jails: Approximately 20 years later, the owner of R&D Associates Inc., Derrick T. Woolworth, came up with the earliest container technology in 2000 and named it FreeBSD Jails. This was done in order to partition a computer system into numerous independent smaller systems called jails. It had the ability to assign an IP address, custom software installations and configurations.

- 2001 – Linux VServer: This was similar to FreeBSD’s jail mechanism and could be used to securely partition resources on a computer file system by patching a Linux kernel. Each partition is given the name and security context, and the virtualised system within is called a virtual private server. Its last stable patch was released in 2006.

- 2004 – Oracle Solaris container: Oracle released the beta version of the Solaris container in 2004 and the full release took place in 2005, which was a combination of system resource controls and boundary separation provided by zones. These zones act as a complete isolated server within a single operating system instance.

- 2005 – OpenVZ (Open Virtuzzo): This is similar to the Solaris container in its implementation of a patched Linux kernel for isolation, resource management and virtualisation purposes. Each container has an isolated file system, its users and user groups, devices, networks, etc.

- 2006 – Process containers: Process containers were introduced by Google in 2006 to limit and isolate resource usage of a collection of processes. These were later renamed Control Groups in order to avoid being confused with the ‘container’ used in the Linux kernel context, and were ultimately merged with Linux kernel 2.6.24.

- 2008 – LXC: LXC was the first and the most complete Linux container. It was developed by using cgroups and Linux namespaces. It did not require any patches to work and was made to work on the vanilla Linux kernel. It is widely used since it provides support for different languages such as Java, Ruby, Python3, etc.

- 2011 – Warden: CloudFoundry developed Warden in 2011. This container was not limited to the Linux operating system; instead, it was made to work on any OS. Though in the initial stages of its development it was based on LXC, it later replaced LXC with its own execution. It works by isolating environments by running on daemons, and providing an interface for managing the containers.

- 2013 – LMCTFY: LMCTFY or ‘Let me contain that for you’, can be thought of as an open source form of Google’s container stack. The aim behind this project was to develop Linux application containers with high utilisation of shared resources, so as to get the maximum performance with the containers. This project proved to be a benchmark in the field, since many organisations have built tools as a result of it. After Google’s contribution of the central LMCTFY ideas to libcontainer, the work on this project stopped in 2015.

- 2013 – Docker: When Docker appeared in 2013, it was the most efficient container management system and is still rated as the market leader. It was initially named dotCloud but was later renamed to Docker. It uses its own container library known as libcontainer for its management, though initially it made use of LXC just like Warden. It differed from the earlier container management systems in the way that it was a complete ecosystem for handling containers.

- 2016 – Windows containers: After watching the popularity of containers in the Linux operating system, Microsoft released Microsoft Windows Server 2016.

Security of containers

The security of Linux containers is of paramount importance, especially if you are dealing with sensitive data like in the banking domain. Since different software is installed on different containers, it becomes very important to secure your container properly to avoid any hacking or phishing attempt. Also, all the containers share the same Linux kernel; so if there’s any vulnerability in the kernel itself, it will affect all the containers attached to it. This is the reason why some people consider virtual machines far more secure than Linux containers. Although VMs are not totally secure due to the presence of the hypervisor, the latter is still less vulnerable due to its limited functionalities. A lot of progress has been made in making these containers safe and secure. Docker and other management systems these days have made it mandatory for their administrators to mark container images to avoid deployment of untrusted containers.

Here are some of the ways to make your containers more secure.

Updating the kernel: One of the most vulnerable components in container management is the kernel, since it is shared among all the containers. So special care should be taken to maintain the kernel at its latest update whenever it’s available. But updating a kernel is a two-step process. The first step is to update the code of the kernel and the second is to reboot your kernel. Software like Ksplice updates your kernel without rebooting it.

Access controls: The deep understanding of two controls — discretionary access control (DAC) and mandatory access control (MAC) — can also add to the safety of the containers. As the name suggests, DAC comprises policies that can be modified depending upon the user running the process. One such example of DAC is file system permissions. For example, if the owner of the file has set the mode of the file to 0600, then that file will not be available for read or write purposes to other users. DAC provides very good safety cover to the containers and its contents, since it is easy to use. On the other hand, MAC provides the mandatory policies which can only be overridden after they have been changed or disabled entirely. These controls are difficult to troubleshoot since they are not visible on the system.

Securing system calls: Another way of securing container systems is to limit the system calls or syscalls, as they are known, so that the unwanted calls are not made by the processes. So, whenever a call is made by a process that is not certified, that call will be killed and the security of the system will be maintained.

Advantages and disadvantages of using containers

Advantages:

- Running different servers on one machine minimises the hardware resources used and the power consumption.

- Reduces time to market for the product since less time is required in development, deployment and testing of services.

- Containers provide great opportunities for DevOps and CI/CD.

- Space occupied by container based virtualisation is much less than that occupied by virtual machines.

- Starting containers requires only a few seconds; so in data centres, they can be readily used in case of higher loads.

Disadvantages:

- Security is the main bottleneck in implementing and using container technology.

- Efficient networking is also required to connect containers deployed in isolated locations.

- Containerisation leads to fewer opportunities with regard to OS installation as compared to virtual machines.

The road ahead

The future of Linux containers looks very promising, since more and more organisations are moving towards them and away from virtual machines, due to the lower cost and greater flexibility. Tech giants like Google and Microsoft have also contributed to the container space, and used container technology to provide support for their products.

The Host cluster is also an upcoming trend in the IT world. Still in its initial stages, it should become more stable and robust in the coming years. Host cluster technology requires different containers to be run on different hosts to avoid single points of failure. There are various such open source projects like Apache Mesos, Kubernetes, Docker Swarm, etc.

Containers are also being used in cloud computing to provide cloud based services. Container based infrastructure is a fast evolving cloud technology because it is light in weight and high in performance.

It can now safely be said that Linux containers will completely replace virtual machines pretty soon.