LXC or Linux containers are OS level virtualisations that run several isolated applications on the parent control host system. Through containerisation, developers can package applications with their dependencies so that the apps can run on other host systems flawlessly.

Linux containers are applications that are isolated from the host system on which they are running. Developers are allowed by the containers to package an application through the libraries and dependencies needed for the application, and ship it as one package. For developers and sysadmins, the transfer of code from the development environments into production is rapid and replicable.

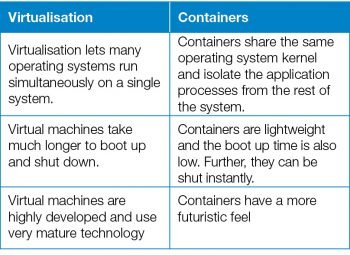

Containers are similar to virtual machines. With containers, one does not have to replicate a whole operating system. Containers only need individual components in order to operate. This boosts performance and reduces the size of the application.

A perfect example to understand containers

To understand containers better, just imagine that you are developing an application. You do your work on a laptop and your environment has a specific configuration, which might be different from that of other developers. The application you’re developing relies on that configuration and is dependent on specific files. Meanwhile, your business has test and production environments which are standardised with their own configurations and their own sets of supporting files. You want to emulate those environments as much as possible locally but without all of the overhead of recreating the server environments. So, how do you make your app work across these environments, pass quality assurance and get your app deployed without massive headaches, rewriting or break-fixing? The answer is: containers.

The container that holds your application has the necessary configuration (and files) so that you can move it from development, to testing and on to production seamlessly. So a crisis is averted and everyone is happy!

That’s a basic example, but there are many different ways in which Linux containers can be applied to problems, when solutions for ultimate portability, configurability and isolation are needed. It doesn’t matter whether the infrastructure is on-premise, on the cloud or a hybrid of the two—containers are the solution. Of course, choosing the right container platform is just as important as the containers themselves.

How do I orchestrate containers?

Orchestrators manage the nodes and containers manage the work load with the goal of maintaining a stable and scalable environment. This includes auto-scaling and self-healing capabilities, e.g., taking nodes offline on discovering abnormal behaviour, restarting containers, and the possibility of setting resource constraints to maximise your cluster usage. Kubernetes, Docker, Swarm, Docker EE and Red Hat OpenShift are vital components of orchestrators.

Linux containers help reduce encounters between your development and operations teams by separating areas of responsibility. Developers can concentrate on their apps and the operations team can focus on the infrastructure. Since Linux containers are based on open source technology, you get the latest and best advances as soon as they’re available. Container technologies, including CRI-O, Kubernetes and Docker, help your team simplify, speed up and orchestrate application development and deployment.

But isn’t this just virtualisation?

Yes and no. Here’s an easy way to think about the two: The security of Linux containers is of paramount importance, especially if you are dealing with sensitive data like in banking. Since different software are installed on different containers, it becomes very important to secure your container properly to avoid any hacking or phishing attempts. Also, all the containers share the same Linux kernel; so if there’s any vulnerability in the kernel itself, it will affect all the containers attached to it. This is the reason why some people consider virtual machines far more secure than Linux containers.

The security of Linux containers is of paramount importance, especially if you are dealing with sensitive data like in banking. Since different software are installed on different containers, it becomes very important to secure your container properly to avoid any hacking or phishing attempts. Also, all the containers share the same Linux kernel; so if there’s any vulnerability in the kernel itself, it will affect all the containers attached to it. This is the reason why some people consider virtual machines far more secure than Linux containers.

Security of containers

Although VMs are not totally secure due to the presence of the hypervisor, the latter is still less vulnerable due to its limited functionalities. A lot of progress has been made in making these containers safe and secure. Docker and other management systems these days have made it mandatory for their administrators to mark container images to avoid deployment of untrusted containers.

Here are some of the ways to make your containers more secure:

- Updating the kernel

- Access controls

- Security system calls

Advantages and disadvantages of using containers

Advantages:

- Running different servers on one machine minimises the hardware resources used and the power consumption.

- Reduces time to market for the product since less time is required in development, deployment and testing of services.

- Containers provide great opportunities for DevOps and CI/CD.

- Space occupied by container based virtualisation is much less than that required for virtual machines.

- Starting containers requires only a few seconds; so in data centres, they can be readily used in case of higher loads.

Disadvantages:

- Security is the main bottleneck when implementing and using container technology.

- Efficient networking is also required to connect containers deployed in isolated locations.

- Containerisation leads to fewer opportunities with regard to OS installation as compared to virtual machines.

The most important thing about containers is the process of using them, not the containers themselves. This process is heavily automated. No more are you required to install software by sitting in front of a console and clicking ‘Next’ every five minutes. The process is also heavily integrated. Deployment of software is coupled with the software development process itself. This is why cloud native applications are being driven by application developers—they write the applications to deploy the software into software abstractions of infrastructure that they also wrote. When they check in code to GitHub, other code (that they wrote) notices and starts the process of testing, building, packaging and deployment. If you want to, the entire process can be completely automated so that new code is pushed to production as a direct result of checking in this new code.