Among container orchestration technologies, Kubernetes and Amazon ECS lead the pack. However, on what basis should systems administrators choose one over the other? This article will help them make an informed choice.

Shipping containers are regarded as ubiquitous and standardised. They can be accessible from anywhere in the world by following simple procedures. Software containers operate in somewhat a similar manner. Packing the software container involves defining what is required for your application to work. This includes the operating system, library files, configuration files, application binaries and other components of the technology stack.

A container image is used to create containers that can operate in almost any environment, from standard desktop systems to data centres and even on the cloud. Containers are regarded as highly efficient as many of them can run on the same machine allowing full resource utilisation. Container technology was introduced in the late 1970s but became extremely popular only when Docker was introduced in 2013. Since then, container technology has drastically changed the DevOps landscape and the way applications are developed, shipped and run.

A single container ordinarily runs a single application. The running of multiple operations simultaneously with a single container has been made possible with the concept of container orchestration.

The importance of containers

- Effective DevOps and continuous delivery: When the application consists of multiple containers with clear interfaces among them, it is simple to update a container, assess the impact and even roll back to an earlier version. By having multiple containers, the same capability enables updating every container without any sort of hiccup.

- Environment replication: When making use of containers, it is fairly easy to instantiate identical copies of the full application stack and configuration. This can be performed by using new developers and new support teams to safely research and experiment in total isolation.

- Testing: With containers, a stress test can be performed to ensure the environment required matches with the normal operational requirements.

- Scalability: By building an application from multiple container instances, adding more containers scales out capacity and throughput. Similarly, containers can be removed when the demand falls. Using orchestration frameworks such as Kubernetes and Apache Mesos further simplifies elastic scaling.

- Performance: As compared to virtual machines, containers are very lightweight and have minimal impact on performance.

- Hundred per cent availability: With the deployment of multiple containers, redundancy can be built into the application. So, if any container fails, the other peers will take over to provide the service.

Container orchestration

Container orchestration is defined as the process of automated arrangement, coordination and the management of computer systems, middleware and services.

Container orchestration is linked with service-oriented architecture, virtualisation, provisioning, converged infrastructure and dynamic data centres. It is regarded as the process of aligning the business requests with the applications, data and infrastructure. It defines the policies and service levels via automated workflows, provisioning and change management. It creates a powerful application-aligned infrastructure that can be scaled up or down depending on application needs. Orchestration reduces the time and effort for deploying multiple instances of a single application.

Container orchestration tools offer powerful and effective solutions for creating, managing and updating multiple containers in coordination across multiple hosts. Orchestration allows the users to share data between services and process tasks in an asynchronous fashion.

Orchestration offers the following unique features:

- Provisioning hosts

- Instantiating a set of containers

- Rescheduling failed containers

- Linking containers together via simple administration-oriented GUI interfaces

- Exposing services to machines outside of the cluster

- Scaling in, out and down the cluster by adding or removing containers at any point of time

Consider an example in which IT software infrastructure comprises Apache, PHP, Python, Ruby, Node.js and React apps running on a few containers which are connected to a replicated DB. In such a case, there is no requirement for container orchestration. But when the application keeps on growing rapidly with time, it requires massive resources in terms of CPU, RAM, etc. That’s when it needs to be split into smaller chunks, where every chunk is responsible for an independent task, maintained by respective teams like microservices. So, now there is a requirement for a caching layer, as well as a queuing system to increase performance, effectively synchronise the tasks and share data successfully among the services.

Various challenges come into this operational scenario, like service discovery, load balancing, configuration management, storage management, regular system health checks, auto-configuration of nodes and zero-downtime deployments.

Container orchestration provides effective and powerful solutions to meet various demands. There are many players in the market like Kubernetes, AWS ECS and Docker Swarm. So, it is important to compare the performance of Kubernetes against that of AWS ECS to assist IT sysadmins in selecting the better of the two.

Kubernetes

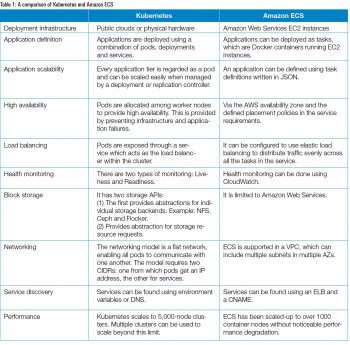

Kubernetes is an open source platform designed by Google and now maintained by the Cloud Native Computing Foundation. It is regarded as the most feature-rich and widely used orchestration framework for automation, scaling and operating application containers across clusters of hosts, and it provides container-centric infrastructure. It works with a range of container tools including Docker.

Kubernetes is designed to work in multiple environments including bare metal, on-premise VMs and public clouds.

With Kubernetes, one can perform the following operations:

- Orchestrate containers across multiple hosts.

- Make effective use of hardware to maximise resources to run all sorts of enterprise apps.

- Control and automate application deployments and updates.

- Mount and add storage to stateful apps.

- Scale containerised applications and all resources, on-the-fly.

- Manage all sorts of services, which guarantee that all deployed apps are running in the same way as they are deployed.

- Perform regular health checks and troubleshoot the apps with auto placement, auto restart, auto replication and auto scaling.

Kubernetes architecture

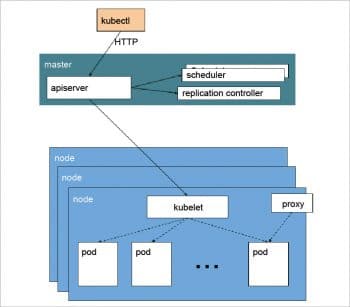

Kubernetes follows the master-slave architectural model.

The following are the components of Kubernetes’ architecture.

- Kubernetes master: This is the main controlling unit of the cluster, which performs the tasks of managing all sorts of workloads and communication across the entire system. It controls all nodes connected in the cluster.

- API server: This is regarded as a key component to serve the Kubernetes API using JSON over HTTP, and provides both internal as well as external interfaces to Kubernetes.

- Scheduler: This tracks the resource utilisation of every node to ensure that no excess workload is scheduled with regard to available resources. The scheduler keeps track of resource requirements, availability, and a variety of user-provided constraints and policy directives like QoS, location of data, etc.

- Replication controller: This controls how many identical copies of a pod should be running on the cluster.

- Nodes: These are machines that perform the requested and assigned tasks. The rubbernecks master controls all nodes connected in the cluster.

- Kubelet: This is primarily responsible for knowing the state of each node with regard to its performance. It performs the tasks of starting, stopping and maintaining application containers as organised by the control plane.

- Proxy: This is regarded as the implementation of the network proxy and load balancer, and provides abstraction of the service level along with other network operations. It is also responsible for routing traffic to the appropriate container, based on the IP and port number of the incoming request.

- Pod: This is a group of one or more containers deployed in a single node. All containers in a pod share an IP address, IPC, host name and other resources. Pods abstract network and storage away from the underlying container, which helps in making containers move across the cluster in a proper manner.

Amazon ECS (Elastic Container Service)

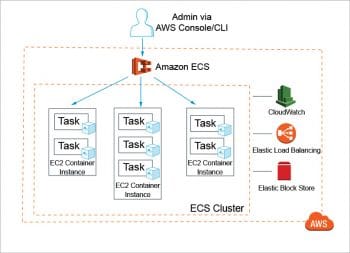

Amazon Elastic Container Service (Amazon ECS) is a highly scalable and fast container management service provided by Amazon AWS (Amazon Web Services). It supports Docker containers and allows users to run applications on a cluster of Amazon EC2 instances, eliminating the need for end users to install, operate and scale their own cluster management infrastructure.

It allows users to launch and stop container-based applications with simple API calls, and to create state-of-art clusters via a centralised service. Amazon ECS also helps users to schedule the placement of containers across the cluster depending on resource needs, isolation policies and availability requirements.

Amazon ECS can be used to create a consistent deployment and build experience. It can manage and scale batch and extract-transform-load (ETL) workloads, and build sophisticated application architectures on a microservices model. Its main features are listed below.

- Task definition: The task definition is a text file, in JSON format. It describes the containers that together form an application. Task definitions specify various parameters for the application, e.g., container image repositories, ports, storage, etc.

- Tasks and the scheduler: A task is an instance of a task definition, created at runtime on a container instance within the cluster. The task scheduler is responsible for placing tasks on container instances.

- Service: A service is a group of tasks that is created and maintained as instances of a task definition. The scheduler maintains the desired count of tasks in the service. A service can optionally run behind a load balancer, which distributes traffic across the tasks that are associated with the service.

- Cluster: A cluster is a logical grouping of EC2 instances on which ECS tasks are run.

- Container agent: The container agent runs on each EC2 instance within an ECS cluster. The agent sends telemetry data about the instance’s tasks and resource utilisation to Amazon ECS. It will also start and stop tasks based on requests from ECS.

- Image repository: Amazon ECS downloads container images from container registries, which may exist within or outside of AWS, such as an accessible private Docker registry or Docker hub. Figure 2 highlights the architecture of Amazon ECS.

The AWS services that are used with ECS are:

- Elastic load balancer: Routes traffic to containers.

- Elastic block store: Provides persistent block storage for ECS tasks.

- CloudWatch: Collects metrics from ECS.

- Virtual private cloud: ECS cluster runs within the virtual private cloud.

- CloudTrail: Logs ECS API calls.

- The advantages of Kubernetes over Amazon ECS are:

- Can be deployed on physical hardware, over public clouds or anywhere on an end user machine.

- Consists of a wide range of storage options like SANs and public clouds.

- Is supported both by Google and Red Hat.

- Has large community support.

The final verdict

Kubernetes is regarded as the better orchestration container tool when compared to Amazon ECS, as it can be deployed anywhere and has more hybrid features with regard to scalability and health checks, as compared to Amazon ECS.