ChatGPT has taken the world by storm. But there is a lot more happening in the world of AI. We take a look at that, and also peek into the strengths and a few shortcomings of ChatGPT-3.

Generative AI refers to the use of artificial intelligence algorithms to generate new data, text, images, or other content that is similar to existing data or content. It involves the use of machine learning techniques to create models that can generate new data based on patterns found in existing data.

OpenAI developed a Generative AI architecture called generative pretrained transformer (GPT), and ChatGPT-3 is an example of a generative AI model that is used for natural language processing tasks, such as chatbots, language translation, and text summarisation. It is a deep learning model that is capable of generating human-like text responses based on the input it receives.

The model is trained on a massive data set of text from the internet, which includes a wide range of topics and writing styles. This allows it to generate responses that are contextually relevant and stylistically appropriate for a given conversation. One of the key benefits of generative AI models like ChatGPT-3 is their ability to adapt and learn over time. As they are exposed to more data and feedback from users, they can refine their responses and become even more accurate and effective.

However, there are also concerns about the potential for generative AI models to generate biased or misleading content, particularly when they are used to create news articles or other forms of content that could impact public opinion.

Generative AI has the potential to revolutionise many industries and applications, including chatbots, content creation, and even scientific research. As technology continues to advance and data becomes more widely available, it is likely that we will see even more powerful and sophisticated generative AI models like ChatGPT-3 emerge in the coming years.

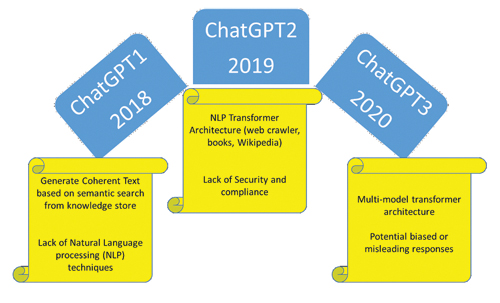

History and development of ChatGPT solutions

ChatGPT is a large language model developed by OpenAI that utilises the GPT architecture. It was officially released in June 2020 and has since become one of the most powerful natural language processing models in the world.

GPT-1 was the first model in the GPT series and was introduced by OpenAI in 2018. It was trained on a massive data set of text from the internet and was capable of generating coherent text based on a given prompt. However, its performance was limited by the relatively small size of its training data set and the lack of advanced natural language processing techniques.

In 2019, OpenAI released GPT-2, which was a significant improvement over GPT-1 in terms of performance and capabilities. GPT-2 was trained on a much larger data set of text, which included web pages, books, and even Wikipedia. It also utilised advanced natural language processing techniques, such as the Transformer architecture, which enabled it to generate more coherent and contextually relevant text.

However, due to concerns over the potential misuse of GPT-2 for malicious purposes, such as generating fake news or propaganda, OpenAI initially decided to withhold the full version of the model from the public. Instead, they released only a smaller, less powerful version of the model, known as GPT-2 117M.

In June 2020, OpenAI released ChatGPT, which is based on the same transformer architecture as GPT-2 but is specifically designed for conversational applications. ChatGPT is trained on a massive data set of text from the internet, including social media, online forums, and blogs. This enables it to generate human-like responses to a wide variety of prompts and questions.

One of the key innovations of ChatGPT is its ability to generate not just text, but also images, audio, and video. This is made possible by the use of a multimodal transformer architecture, which combines natural language processing with computer vision and audio processing techniques.

In addition to its technical capabilities, ChatGPT also represents a significant milestone in the history of artificial intelligence and natural language processing. It has demonstrated the potential for machines to generate human-like text and engage in meaningful conversations with humans.

However, as with any powerful technology, there are also concerns about the potential misuse of ChatGPT. Its ability to generate convincing fake news, propaganda, or other types of malicious content could be used to manipulate public opinion or spread disinformation. The evolution of ChatGPT and its predecessors, GPT-1 and GPT-2, represents a significant milestone in the development of artificial intelligence.

Reinforcement learning with human feedback

Reinforcement learning with human feedback (RLHF) is a powerful technique in the field of artificial intelligence (AI) that combines the strengths of both human and machine learning. In this approach, the machine learns from human feedback to refine its decision-making capabilities and improve its overall performance. RLHF has numerous applications across a wide range of domains, including robotics, gaming, finance, and healthcare.

At its core, RLHF is a type of machine learning algorithm that enables an agent to learn from its own experiences through trial and error. The agent is rewarded or penalised based on its actions in a given environment, with the goal of maximising its cumulative reward over time. The agent uses this feedback to adjust its behaviour and make better decisions in the future.

However, in many real-world applications, it can be difficult to define the rewards and penalties that the agent should receive. In these cases, RLHF allows humans to provide feedback on the agent’s behaviour, helping to guide its learning and improve its performance. This feedback can take many forms, including explicit rewards or penalties, natural language instructions, or even physiological signals such as facial expressions or brain waves.

One key advantage of RLHF is its ability to leverage the unique strengths of both humans and machines. Humans can provide rich, nuanced feedback that is difficult for machines to generate on their own, while machines can process vast amounts of data and make decisions at speeds far beyond what humans are capable of. By combining these two approaches, RLHF can accelerate the learning process and achieve higher levels of performance than either approach alone.

There are several technical challenges associated with RLHF, including how to integrate human feedback into the learning process, how to handle noisy or inconsistent feedback, and how to ensure that the agent does not become overly dependent on human guidance. One common approach is to use a hybrid reinforcement learning model that incorporates both human feedback and traditional RL techniques, such as Q-learning or policy gradient methods.

To implement RLHF, several tools and frameworks are available, such as TensorFlow, Keras, PyTorch, and OpenAI Gym. These frameworks provide a rich set of APIs and libraries for building RLHF models, training and evaluating them, and deploying them in real-world applications.

RLHF has several applications in different domains. In robotics, it is used to train robots to perform complex tasks such as grasping and manipulation of objects, navigation, and control. In gaming, it is used to develop more intelligent and responsive game agents that can learn from player behaviour and adapt to changing game environments. In finance, RLHF is used for portfolio optimisation, fraud detection, and risk management. In healthcare, RLHF is used for diagnosis and treatment of diseases, prediction of patient outcomes, and drug discovery.

RLHF is a powerful technique that combines the strengths of human and machine learning to achieve higher levels of performance in a wide range of applications. With the continued development of new tools and frameworks, RLHF is poised to become an increasingly important approach to AI in the years ahead.

Architecture of ChatGPT-3

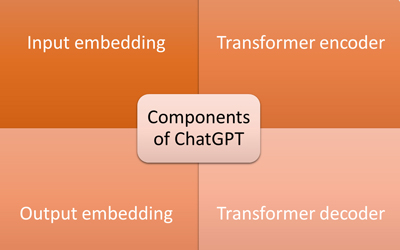

ChatGPT-3 has a complex architecture that involves several layers of artificial neural networks. It is based on the Transformer architecture, which was introduced in a 2017 paper by Vaswani et al. This architecture is a type of neural network that is designed to process sequential data, such as natural language text. The main components of the ChatGPT-3 architecture include:

Input embedding: The input text is converted into a sequence of vectors that can be processed by the neural network. These vectors are called embedding.

Transformer encoder: The embeddings are fed into a stack of transformer encoder layers, which process the input text to create a sequence of hidden representations.

Transformer decoder: The decoder takes the hidden representations generated by the encoder and uses them to generate an output sequence of vectors.

Output embedding: The output sequence is then converted back into text using output embedding.

The number of encoder and decoder layers in the ChatGPT-3 architecture varies depending on the size of the model. The largest version of the model, with 175 billion parameters, has 96 encoder and 96 decoder layers.

Overall, the architecture of ChatGPT-3 is highly complex and requires a significant amount of computational resources to train and run. However, this complexity allows the model to generate highly realistic and coherent text, making it a powerful tool for natural language processing tasks.

Generative AI and RLHF

Generative AI, reinforcement learning with human feedback (RLHF), and generative adversarial networks (GANs) are all related to the field of AI. Generative AI is a subset of AI that focuses on creating new data, such as images, videos, and text, using machine learning algorithms. These algorithms can create original content by learning patterns and structures from existing data.

RLHF is a subfield of reinforcement learning that uses human feedback to train AI models. RLHF is a more interactive form of machine learning, where the AI model is given feedback from human experts to improve its performance.

GANs are a type of Generative AI that uses two neural networks to create new data. The first network generates new data, while the second network evaluates the generated data to ensure it is realistic.

ChatGPT-3 is a state-of-the-art generative AI model that uses a large neural network to generate text based on the input it receives. It can create responses to questions, write essays, and even generate code. The model was trained on a massive amount of data, and has been praised for its ability to generate coherent and useful text.

RLHF and GANs can be used to improve the performance of ChatGPT-3. RLHF can provide feedback to the model based on its generated text, allowing it to improve its responses over time. GANs can generate more realistic and diverse text, further enhancing the model’s capabilities.

Generative AI, RLHF, GANs, and ChatGPT-3 can be used together to create more advanced and sophisticated AI models.

Risks in Generative AI and ChatGPT3

Disruption risk: AI is evolving each day and now has enough potential to disrupt existing business models and diverse markets like no technology has ever done before. Jobs as varied as trucking and customer care to high-end ones like financial trading analysts, or medical jobs like radiologists, etc, are at risk. As per a March 2023 report from Goldman Sachs, as many as 300 million jobs will be eliminated by ChatGPT-like AI including 19 per cent of the existing job market. So, in the next four years, the job market will have a different face with absolutely new skills requirements.

Cyber security risk: There was a massive 38 per cent increase in cyber attacks in 2022 and this figure may even increase to 44-48 per cent by the end of 2024. AI can increase this risk of cyber security, especially when sending phishing emails. Constant evolution of deepfake and voice clone technologies is increasing these risks each day.

Reputation risk: After the launch of ChatGPT, Google and other top IT giants entered the fray to make their own AI products like Google BardAI. However, sometimes, AI doesn’t give the expected results, which puts the reputation of IT companies at stake. As per recent reports by Forrester, 75 per cent of customers face issues in real-time interaction with chatbots and 30 per cent of businesses have migrated to some other technology rather than an AI-driven solution, as it is still at a very young stage and prone to errors.

Risk of legal consequences: Governments of many countries are trying to make proper laws to tackle the diverse issues of AI. In 2023, the US-based National Institute of Standards and Technology (NIST) released an ‘AI Risk Management’ framework to help corporate and other leaders handle risks that originate from AI. In addition, EU has proposed an AI act to ban use of software like biometrics recognition, deepfakes, and more. Many more legal regulations are expected in the coming few months to safeguard normal lives and the functioning of governments.

Operational risk: The most debatable risk of ChatGPT and Generative AI is the operational risk. Recently, tech giant Samsung banned its employees from using ChatGPT after some trade secrets were leaked.

ChatGPT is a good example of advanced AI but it is still in its testing phase, and many shortcomings are being reported by a large number of people.

Software development using ChatGPT-3

ChatGPT is an extremely powerful tool that has the potential to revolutionise almost anything. To leverage its power, we need to integrate it into normal workflows and one of the best ways to do this is software development.

With proper understanding, ChatGPT can be used to improve the software development life cycle. Developers can use its power to improve coding skills and develop error-free projects, thus cutting down the time of delivery of the final product to customers.

In order to make effective use of ChatGPT, developers can make use of libraries like OpenAI’s API, Hugging Face’s Transformers or spaCY.

ChatGPT can revolutionise software development in the following ways.

Code completion and optimisation: Code completion is one of the most fantastic applications of ChatGPT in software development. It can suggest code or develop complete code just by typing the requirement and language name. Developers can use it for:

a. AutoComplete: With this, ChatGPT completes the line of code with function definition, with integration of arguments and return types.

b. Predictive code: It can predict what a developer is expected to write. Taking the base idea, the entire code is generated by the system.

c. Translation: Developers can convert the code from one language to another with just a simple click.

d. Optimisation: If there is any error in the code, ChatGPT finds it, gives suggestions, and automatically fixes the code.

- Example prompt: “Given the code snippet, generate the next line of code that completes the functionality.”

Software documentation: This is quite a long and tedious process. But ChatGPT can write simple, clear, concise and audience-specific documentation with proper guidance

- Example prompt: “Given a set of requirements, generate a technical specification document that outlines the software solution and its components.”

Bug detection and automated testing: ChatGPT can improve code quality considerably, and also carry out strong automated testing.

a. Bug detection: ChatGPT can identify bugs by simply analysing the code base and flagging potential issues at various lines in the code.

b. Automated testing: Testing is time-consuming and a never-ending task. With ChatGPT, developers can get help for generating test case designs and test cases for all possible scenarios.

- Example prompts: “Given the expected output and input parameters, detect any differences in the actual output and provide possible reasons for the error.”

- “Given the code block, generate an automated test that checks for expected output and provides feedback for any unexpected behaviour.”

Natural language processing: ChatGPT is known for natural language processing. It analyses all test inputs by users and takes appropriate action. It has the potential to improve NLP accuracy, generate accurate responses, and carry out text classification and language translation.

Example prompts: “Given a product review, determine the overall sentiment of the review as positive, negative, or neutral.”

“Generate a list of possible responses to a customer inquiry based on previous interactions.”