Dive into the surprising capabilities of these AI models as they evolve to approach problems with increased thoughtfulness, mirroring aspects of human cognition.

When confronted with a problem, the brain can proceed in two ways: quickly and intuitively or slowly and methodically. These two processing methods are referred to as System 1 and System 2, or, as described by Nobel Prize-winning psychologist Daniel Kahneman, “fast” and “slow” thinking. Large language models such as ChatGPT inherently operate rapidly by default. When presented with a question, they tend to provide quick answers, which may only sometimes be accurate, showcasing their ability for fast, System 1-type processing.

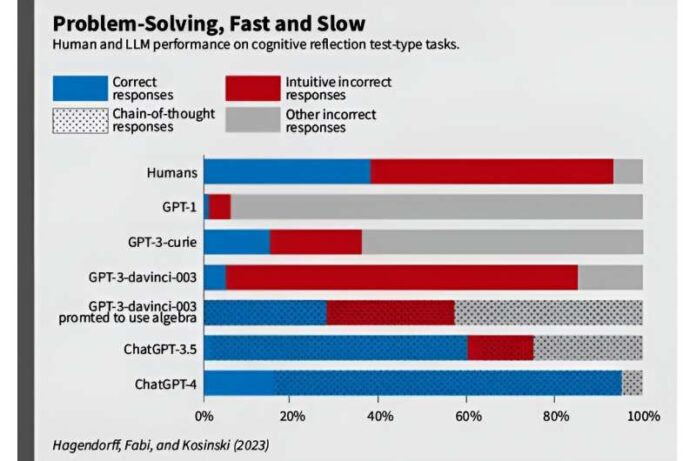

As these models continue to develop, there is potential for them to adopt a more deliberate approach, breaking down problems into steps and thereby reducing inaccuracies that can arise from hasty responses.Kosinski and his two collaborators, philosopher Thilo Hagendorff and psychologist Sarah Fabi, subjected 10 generations of OpenAI LLMs to a series of tasks to elicit rapid System 1 response. Initially, the team focused on determining whether these LLMs would display cognitive biases similar to those encountered by individuals who rely on automatic thinking. They noted that in the case of early models such as GPT-1 and GPT-2, they “struggled to grasp the situation,” their responses became “increasingly System 1-like” as the tests grew more intricate. “Their responses closely resembled those of humans,” he added.

It came as no surprise that Language Models (LLMs), primarily designed for predicting text sequences, could not engage in independent reasoning. These models lack internal reasoning mechanisms; they cannot spontaneously slow down and engage in introspection or assumption analysis. Their sole capability is to intuit the next word in a sentence. However, the researchers made an unexpected discovery with later iterations of GPT and ChatGPT, which demonstrated a more strategic and thoughtful problem-solving approach when presented with prompts. Suddenly, GPT-3 exhibits the ability, almost instantaneously and without retraining or developing new neural connections, to tackle these tasks. It underscores that these models can learn rapidly, akin to human cognition.

Take it slow; moving too quickly

Less than 40% of the human participants who attempted problem types managed to answer them correctly. The earlier versions of generative pre-trained transformer (GPT) models, which preceded ChatGPT, fared even worse in performance. However, GPT-3 demonstrated the ability to arrive at the correct solutions by employing more intricate “chain-of-thought” reasoning when provided with positive reinforcement and feedback from the researchers. When presented with the task alone, GPT-3 correctly solves less than 5% of them, and it never employs step-by-step reasoning. However, when specific guidance is provided, such as using algebra to solve the problem,’ it consistently employs step-by-step reasoning, achieving an accuracy rate of approximately 30%—a remarkable 500% increase. The prevalence of System-1 responses decreased from around 80% to approximately 25%, indicating that even when GPT-3 made an error, it was less prone to intuitive mistakes. ChatGPT-4, when utilising chain-of-thought reasoning, achieved the correct answer in nearly 80% of these test scenarios. The researchers also found that even when ChatGPT was restricted from engaging in System-2 reasoning, it still surpassed human performance. Kosinski suggests that this provides evidence that the intuitive capabilities of LLMs might exceed those of humans.

AI’s Unique Cognitive Abilities

The neural networks underpinning these language models, akin to human brains in certain respects, consistently demonstrate emergent properties that extend beyond their initial training. These models are not thinking; the researchers mention that if the capacity for reasoning can spontaneously emerge in these models, why couldn’t other abilities emerge similarly? While AI often produces outputs that resemble those of humans, it typically operates on fundamentally different principles.