Machine learning is everywhere these days. No wonder people from diverse backgrounds want to acquire ML skills. However, the interfacing is complex for those without proper knowhow of certain underlying concepts. There are many libraries and frameworks that have recently made ML more user-friendly. Ml5.js is one such library that enables you to get started with machine learning without many prerequisites. Let’s explore this wonderful ML library called Ml5.js.

The traditional linear and micromanaged approach of the software development process has been challenged by the emergence of machine learning (ML). The ML based approach has fundamentally changed the manner in which software is developed. The traditional approach required the software developer to provide a micro-level sequence of instructions, which was a big barrier in solving certain types of problems such as object recognition, speech recognition, etc. By using the ML approach, solving these problems has become a possibility, with a manageable level of complexity.

As machine learning is getting more and more popular across a spectrum of domains, users from diverse backgrounds want to acquire ML skills. As some of them may like formal training in subjects such as probability and statistics, there is a need for libraries that can make the process look simpler by hiding the underlying complexities. Recently, a few such powerful libraries have evolved – TensorFlow and Keras, to name a few. To this list, we can now add Ml5.js as well.

Ml5.js

The primary objective of Ml5.js is to make ML user-friendly and accessible to a diverse audience. Ml5.js adds another layer on top of TensorFlow.js to make it even simpler. The primary advantage of Ml5.js is that it can be used in the browser itself without the requirement to install complicated dependencies. The funding and support to Ml5.js has been given by the Google Education Grant at NYU’s ITP/IMA programme.

Ml5.js is available as open source software. It provides a high level interface to the powerful Tensorflow.js library. If you have some exposure to Tensorflow.js, you might be aware that it is both easy to use and very powerful. As Ml5.js has added another layer on Tensorflow.js, it should be even simpler.

The browser based approach is one of the important attributes of Ml5.js. An excellent video that introduces Ml5.js is available at https://youtu.be/jmznx0Q1fP0. It will certainly help you to get started with Ml5.js, as the official documentation itself has recommended this video.

Ml5.js requires no installation as it is browser based. To get started, you can right away open a code editor, start typing the code and execute it in a Web browser to see ML in action. Let’s explore each of the steps to get started with Ml5.js.

As a first step, write the following code to link to the Ml5.js library from the browser, and to print the Ml5 version in the console log window.

<!DOCTYPE html>

<html lang="en">

<head>

<title>Getting Started with ml5.js</title>

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<script src="https://unpkg.com/ml5@0.4.3/dist/ml5.min.js"></script>

</head>

<body>

<script>

console.log('ml5 version:', ml5.version);

</script>

</body>

</html>

The CDN link to Ml5.js is provided as shown below:

<script src="https://unpkg.com/ml5@0.4.3/dist/ml5.min.js"></script>

If you open the aforementioned code in a browser and look at the console log, you will get the version that will look something similar to what’s given below:

ml5 version: 0.4.3

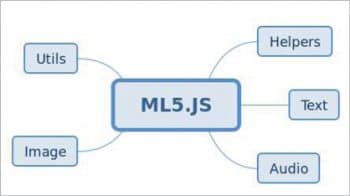

Ml5.js has support for the following categories:

- Helpers

- Image

- Sound

- Text

- Utils

The image, sound and text are used to apply data belonging to the respective type. The helpers category includes components such as NeuralNetworks and FeatureExtractor, which can be used to perform transfer learning.

An example of a simple neural network with Ml5.js

Try the following code to get the basic neural network functionality in Ml5.js:

<!DOCTYPE html>

<html lang=”en”>

<head>

<title>Getting Started with ml5.js</title>

<meta name=”viewport” content=”width=device-width, initial-scale=1.0”>

<script src=”https://unpkg.com/ml5@0.4.3/dist/ml5.min.js”></script>

</head>

<body>

<script>

// Initialize the the neural network

const neuralNetwork = ml5.neuralNetwork(1, 1);

// add in some data

for(let i = 0; i < 100; i+=1){

const x = i;

const y = i * 2;

neuralNetwork.data.addData( [x], [y])

}

// normalize your data

neuralNetwork.data.normalize();

// train your model

neuralNetwork.train(finishedTraining);

// when it is done training, run .predict()

function finishedTraining(){

neuralNetwork.predict( [60], (err, results) => {

console.log(results);

})

}

</script>

</body>

</html>

If you execute this code in the browser, you will get the following output:

{value: 120.65793764591217, label: “output0”}

You may have observed that the output value is input multiplied by 2. As the input here is 60, the output value is 120.65793.

Using a pre-trained model

The FeatureExtractor component can be used to extract the already learned features from the existing models such as MobileNet.

A simple example to load the MobileNet and classify the images is shown below:

// Extract the already learned features from MobileNet

const featureExtractor = ml5.featureExtractor(“MobileNet”, modelLoaded);

// When the model is loaded

function modelLoaded() {

console.log(“Model Loaded!”);

}

// Create a new classifier using those features and with a video element

const classifier = featureExtractor.classification(video, videoReady);

// Triggers when the video is ready

function videoReady() {

console.log(“The video is ready!”);

}

// Add a new image with a label

classifier.addImage(document.getElementById(“dogA”), “dog”);

// Retrain the network

classifier.train(function(lossValue) {

console.log(“Loss is”, lossValue);

});

// Get a prediction for that image

classifier.classify(document.getElementById(“dogB”), function(err, result) {

console.log(result); // Should output ‘dog’

});

You will have noticed that the steps are simple. A live demo with the code and output is available at https://editor.p5js.org/ml5/sketches/FeatureExtractor_Image_Regression.

Human pose estimation

The complicated tasks such as human pose estimation can be performed with Ml5.js with simple steps. The Ml5.poseNet code fragment is shown below:

const video = document.getElementById(“video”);

// Create a new poseNet method

const poseNet = ml5.poseNet(video, modelLoaded);

// When the model is loaded

function modelLoaded() {

console.log(“Model Loaded!”);

}

// Listen to new ‘pose’ events

poseNet.on(“pose”, function(results) {

poses = results;

});

A live demo of pose estimation is available at https://editor.p5js.org/ml5/sketches/PoseNet_image_single. A sample output is shown in Figure 2.

Sound classification

The ml5.soundClassifier method can be used to classify pre-defined sounds. The following example uses the model SpeechCommands18w. This model can be used to detect speech commands for digits ‘Zero’to ‘Nine’. Apart from this, certain classes such as ‘down’, ‘left’ and ’ right’ can be detected with this model.

// Options for the SpeechCommands18w model, the default probabilityThreshold is 0

const options = { probabilityThreshold: 0.7 };

const classifier = ml5.soundClassifier('SpeechCommands18w', options, modelReady);

function modelReady() {

// classify sound

classifier.classify(gotResult);

}

function gotResult(error, result) {

if (error) {

console.log(error);

return;

}

// log the result

console.log(result);

}

Sentiment analysis

The ml5.sentiment() method is used to predict the sentiment of any given text. The following code fragment uses the moviereviews model:

// Create a new Sentiment method

const sentiment = ml5.sentiment(‘movieReviews’, modelReady);

// When the model is loaded

function modelReady() {

// model is ready

console.log(“Model Loaded!”);

}

// make the prediction

const prediction = sentiment.predict(text);

console.log(prediction);

A live interactive demo is available at https://editor.p5js.org/ml5/sketches/Sentiment_Interactive.

The official documentation (https://learn.ml5js.org/docs/#/) has detailed information about the features of Ml5.js as well as links to live working demos of various use cases. If you are trying to incorporate ML abilities into a Web application, then you should explore Ml5.js.