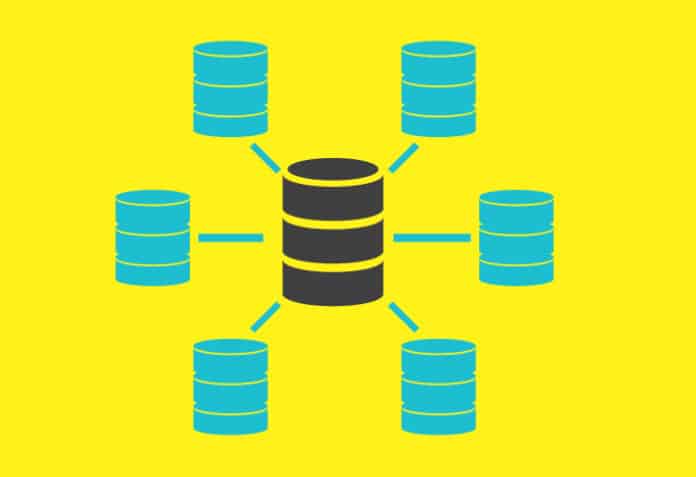

Distributed databases give deeper insights into data, and ensure fault tolerance and data integrity. We take a look at the computational components of distributed databases, as well as the relationship between these databases and polyglot persistence.

As per Wikipedia: “A distributed database is a database in which data is stored across different physical locations. It may be stored in multiple computers located in the same physical location (e.g., a data centre); or may be dispersed over a network of interconnected computers. Unlike parallel systems, in which the processors are tightly coupled and constitute a single database system, a distributed database system consists of loosely coupled sites that share no physical components.”

The need for insight from data has made it necessary to store and process increasingly large and diverse data sets, and ensure high availability, scalability, and fault tolerance.

Let’s begin by exploring the key stages in the evolution of distributed databases.

Centralised databases: In the early days of computing, databases were typically centralised, with all data stored and managed on a single machine. This approach limited scalability and created single points of failure.

Client-server model: With the advent of networking and the client-server model, databases became more distributed. Multiple client machines could connect to a centralised database server to access and manipulate data. While this improved scalability to some extent, it still relied on a single server for data storage and processing.

Replication: To enhance fault tolerance and availability, replication techniques were introduced. Data was replicated across multiple database servers, allowing for redundancy and increased reliability. However, this approach often faced challenges in maintaining consistency between replicas.

Partitioning and sharding: As data sets continued to grow, databases started employing partitioning and sharding techniques. Partitioning involves dividing the data into smaller subsets and storing them across multiple servers. Sharding, a type of partitioning, distributes data based on specific criteria (e.g., by customer ID or geographical location). These techniques improve scalability and performance by enabling parallel processing of queries across multiple servers.

Peer-to-peer databases: Peer-to-peer (P2P) databases emerged as an alternative to the client-server model. In P2P databases, all participating nodes can act as both clients and servers. Each node contributes storage and processing capabilities, forming a decentralised network. P2P databases offer greater fault tolerance and scalability, as there is no single point of failure.

NoSQL and new database models: NoSQL databases, such as document-oriented, key-value, and columnar databases, introduced new data models that better suited distributed environments. These databases prioritise scalability and performance by relaxing the strict consistency guarantees of traditional relational databases.

Distributed database management systems (DDBMS): Modern distributed databases are built on distributed database management systems. DDBMSs provide a framework for managing data across multiple nodes, handling data partitioning, replication, consistency, and query processing in a distributed fashion. They often incorporate techniques like distributed transactions, consensus protocols, and replication strategies to ensure data integrity and fault tolerance.

Cloud databases: Cloud computing and storage technologies have revolutionised the way databases are deployed and managed. Cloud databases leverage the scalability, elasticity, and accessibility of cloud infrastructure to provide distributed database services. They offer features like automated data replication, load balancing, and flexible scalability, making it easier for organisations to handle large scale distributed data.

Blockchain and distributed ledgers: Blockchain technology introduced a new paradigm for distributed databases. Blockchain is a decentralised, append-only ledger that uses consensus algorithms to achieve data consistency across a network of nodes. It ensures trust and immutability by storing data in a distributed and tamper-proof manner. While initially associated with cryptocurrencies, blockchain concepts are being explored for various applications beyond finance.

These are some of the significant stages in the evolution of distributed databases. The field continues to evolve, with ongoing research and advancements in areas like distributed query optimisation, data replication strategies, fault tolerance, and distributed consensus algorithms.

Now that event driven architecture and microservices are getting popular, another key feature in the context of distributed databases and cloud platforms is the relationship between a distributed and a polyglot database.

Computational Components of a Distributed Database

Distributed databases consist of several computational components that work together to provide data storage, processing, and management across multiple nodes or servers. Here are the key computational components of a distributed database.

Data storage layer: The data storage layer is responsible for storing and managing the actual data within the distributed database. It includes the mechanisms and algorithms for data partitioning, replication, and distribution across multiple nodes. Each node in the distributed database typically stores a subset of the data, and the storage layer ensures that data is distributed optimally for scalability and fault tolerance.

Query processing and optimisation: The query processing and optimisation component handles the execution of queries or data manipulation operations on the distributed database. It receives user queries, breaks them down into subqueries, and optimises their execution across multiple nodes. The goal is to minimise data transfer between nodes and maximise query performance by leveraging parallel processing and data locality.

Distributed transaction manager: The distributed transaction manager ensures that transactions, which consist of multiple operations that must be executed atomically, consistently, and reliably, are properly managed in a distributed environment. It coordinates the execution of distributed transactions across multiple nodes, handles concurrency control to prevent conflicts, and ensures the durability and consistency of the data.

Distributed query coordinator: The distributed query coordinator acts as the central point of control for processing distributed queries. It receives and coordinates queries from clients, breaks them down into subqueries that can be executed on individual nodes, and gathers and merges the results from various nodes to form the result set. The query coordinator also handles query optimisation and data distribution decisions.

Consensus and coordination protocols: In a distributed database, consensus and coordination protocols play a crucial role in achieving consistency and fault tolerance. These protocols enable nodes to agree on the state of the system, coordinate data replication, handle failures, and ensure that data modifications are properly replicated across nodes. Examples of consensus protocols include Paxos, Raft, and Distributed Two-Phase Commit.

Distributed lock manager: The distributed lock manager is responsible for managing locks on data items to ensure proper concurrency control in a distributed environment. It coordinates lock acquisition and release across multiple nodes to prevent conflicts and maintain data integrity. The lock manager also handles deadlock detection and resolution to avoid resource contention issues.

Data replication and synchronisation: Data replication is an important aspect of distributed databases for fault tolerance and data availability. The data replication and synchronisation component ensures that data updates are propagated to replicated copies across different nodes, maintaining consistency and durability. It handles mechanisms like replication protocols, conflict resolution, and data synchronisation algorithms.

Distributed monitoring and management: Distributed databases require monitoring and management tools to oversee the health, performance, and configuration of the distributed system. These tools collect metrics, monitor node status, detect and resolve failures, and provide insights into system behaviour. They may include distributed monitoring agents, dashboards, and alerting systems.

The specific implementation and architecture of a distributed database may vary based on the underlying technologies, database models (e.g., relational, NoSQL), and the specific requirements of the application or system using the distributed database.

Understanding Polyglot Persistence and Distributed Database

Polyglot databases involve using multiple specialised databases or data storage technologies within an application or system to handle different types of data or workloads. Each database is chosen based on its strengths in managing specific data types or use cases. For example, a polyglot database architecture may use a document-oriented database for storing unstructured data, a graph database for managing relationships, and a relational database for structured data.

In a distributed database environment, data is distributed across multiple nodes or servers, and the processing of queries and transactions is distributed as well. Distributed databases are designed to provide scalability, fault tolerance, and high availability by spreading the data and workload across multiple machines.

The basis of the relationship between polyglot databases and distributed databases is that a distributed database architecture often supports the use of polyglot databases. In a distributed database system, different types of databases can be used for different portions of the data or specific tasks. For example, certain nodes in a distributed database cluster may use a NoSQL database for storing and querying unstructured data, while others may use a traditional relational database for structured data.

The combination of distributed databases and polyglot databases allows for a more flexible and efficient data management approach. By leveraging the strengths of different database technologies, organisations can optimise their data storage and processing for specific use cases or data types. This can result in improved performance, scalability, and overall system efficiency.

It is worth noting that while they can support polyglot architectures, not all distributed databases are necessarily polyglot databases. A distributed database can use a single type of database technology, such as a distributed relational database or a distributed key-value store, but it can still leverage the distributed nature for scalability and fault tolerance.