The I/O schedulers in Linux manage the order in which the I/O requests of processes are handled by the disk subsystem. This article compares the performance of different I/O schedulers in Linux virtual machines and Docker containers, offering insights into optimising disk access for improved system performance.

Process schedulers are essential to the functionality and responsiveness of Linux systems and are well-known to the majority of users. Their primary role is to manage the distribution of system resources among processes, including the CPU, memory, and I/O hardware. The process scheduler’s objective is to maximise system throughput, reduce response time, and ensure fairness among all active processes.

In addition to process schedulers, Linux also has I/O schedulers that manage the order in which processes’ I/O requests are handled by the disk subsystem. The I/O scheduler performs a similar role to the process scheduler, but instead manages the processes’ disk access rather than the CPU. In situations where multiple I/O requests from different processes compete for disk access, the I/O scheduler is crucial to the system’s overall performance.

Despite the use of flash and solid-state storage, disk access remains the most expensive form of data access, being slower than data access from RAM. I/O schedulers optimise disk access requests to improve performance.

This article compares the efficiency of different I/O schedulers when SSDs are used, and the results are particularly relevant for processes that involve intensive disk access. The comparison is made between running benchmarks on Ubuntu 20.04 and using Docker and containers (where files must first be copied into the container to run the benchmarks).

“Prior to Ubuntu 19.04 with Linux 5.0 or Ubuntu 18.04.3 with Linux 4.15, the multiqueue I/O scheduling was not enabled by default and just the deadline, cfq and noop I/O schedulers were available by default. For Ubuntu 19.10 with Linux 5.0 or Ubuntu 18.04.3 with Linux 5.0 onwards, multiqueue is enabled by default providing the bfq, kyber, mq-deadline and none I/O schedulers. For Ubuntu 19.10 with Linux 5.3 the deadline, cfq and noop I/O schedulers are deprecated.” (Ubuntu Wiki, 2019)

The above statement can be taken as true for Ubuntu 20.04 too. Hence, for the comparison only the following I/O schedulers were taken into account:

- none I/O scheduler

- mq-deadline I/O scheduler

- bfq I/O scheduler

- kyber I/O scheduler

I/O scheduler commands

To check the available I/O schedulers, run the following command:

$ cat /sys/block/sda/queue/scheduler

The output would be something like:

[mq-deadline] none

This means that the currently selected I/O scheduler is mq-deadline, and other options are available in the I/O scheduler, for example, none. (Ubuntu Wiki, 2019). Usually, mq-deadline and none are available by default. If the other I/O schedulers are missing you could use the following commands:

$ sudo modprobe bfq $ sudo modprobe kyber-iosched

Now run the following command to check the available I/O schedulers:

$ cat /sys/block/sda/queue/scheduler

The output would be something like:

[mq-deadline] none bfq kyber

Let’s now move on to the benchmarks.

Test 1: writes-starving-reads

This test evaluates the performance of the I/O schedulers when there is a write operation going on in the background and how efficiently the read operations can be completed without starvation. Benchmark Test 1 is designed in a way to get results for better comparison.

You can perform the write operation in the background, using the following command:

$ while true; do dd if=/dev/zero of=file bs=1M; done &

This command runs a write operation in the background due to the ‘&’ symbol used at the end of the command. The above command is an infinite while loop.

After the testing on benchmarks is done, remember to kill this process using its process ID.

The code for changing to the none scheduler and then running Benchmark Test 1 is given below.

Step 1: Perform the write operation in the background.

Step 2: Find out how long does it take to read a file of various sizes.

echo none while true; do dd if=/dev/zero of=file bs=1M; done & echo none > /sys/block/sda/queue/scheduler time cat 10MB.txt > /dev/null time cat 20MB.txt > /dev/null time cat 30MB.txt > /dev/null time cat 40MB.txt > /dev/null time cat 50MB.txt > /dev/null time cat 60MB.txt > /dev/null time cat 70MB.txt > /dev/null time cat 80MB.txt > /dev/null time cat 90MB.txt > /dev/null time cat 100MB.txt > /dev/null

We executed the read and write operations test cases in a virtual machine and Docker container, and the results are tabulated in Tables 1 to 4 for various schedulers.

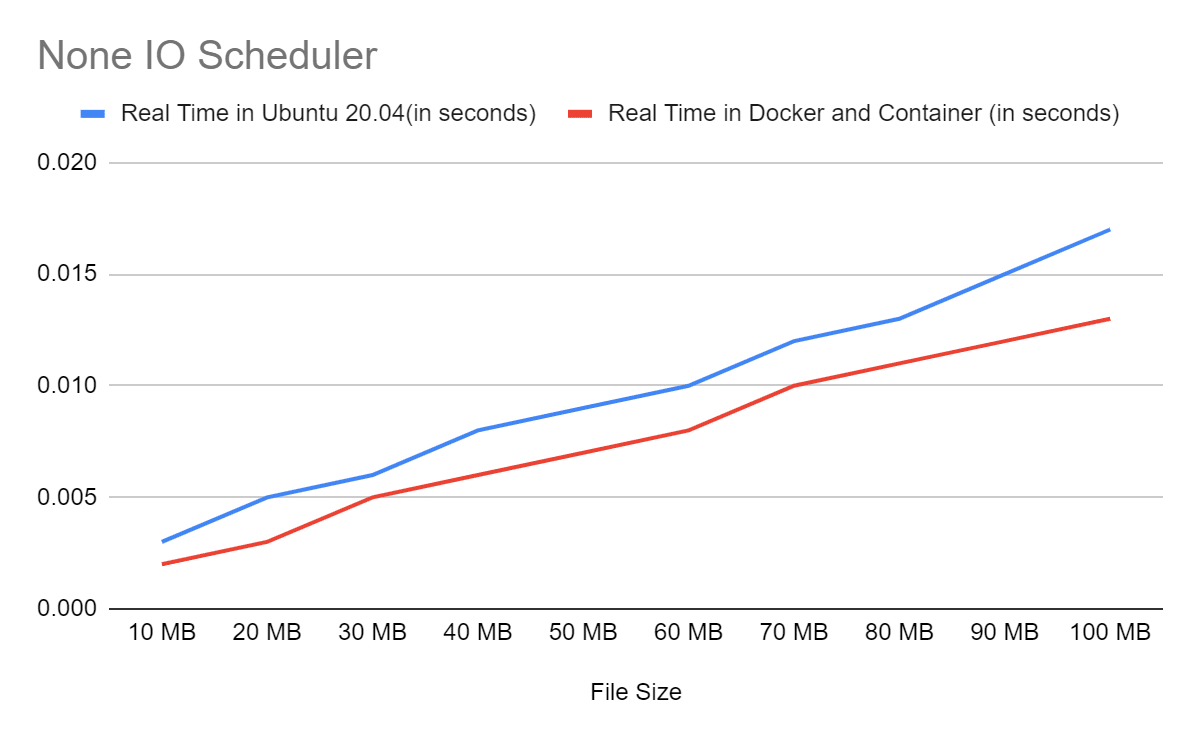

| Table 1: none I/O scheduler results | |||

| File size | Real-time in Ubuntu 20.04 (in seconds) | Real-time in Docker and container (in seconds) | Percentage improvement |

| 10MB | 0.003 | 0.002 | 33.33 |

| 20MB | 0.005 | 0.003 | 40 |

| 30MB | 0.006 | 0.005 | 16.67 |

| 40MB | 0.008 | 0.006 | 25 |

| 50MB | 0.009 | 0.007 | 22.22 |

| 60MB | 0.01 | 0.008 | 20 |

| 70MB | 0.012 | 0.01 | 16.67 |

| 80MB | 0.013 | 0.011 | 15.38 |

| 90MB | 0.015 | 0.012 | 20 |

| 100MB | 0.017 | 0.013 | 23.53 |

Figure 1 shows the performance comparison of none I/O schedulers in a virtual machine versus a Docker container.

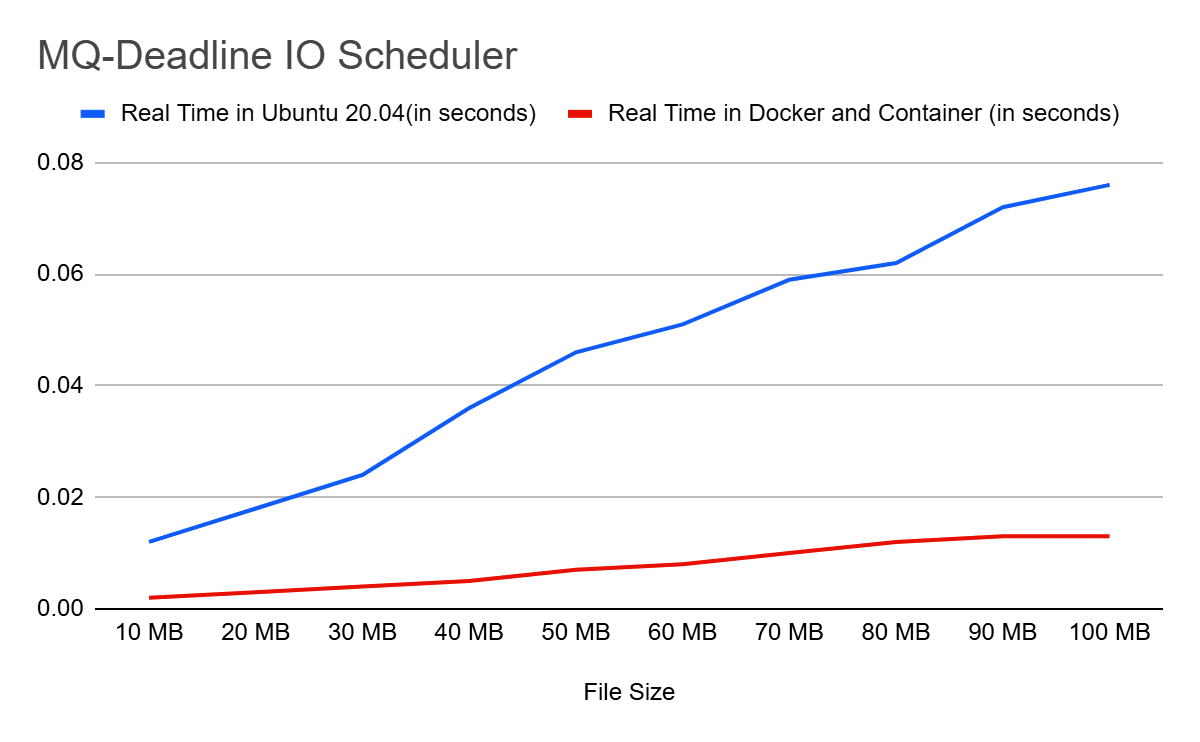

Figure 2 shows the performance comparison of mq-deadline I/O schedulers in a virtual machine versus a Docker container.

| Table 2: mq-deadline I/O scheduler results | |||

| File size | Real-time in Ubuntu 20.04 (in seconds) | Real-time in Docker and container (in seconds) | Percentage improvement |

| 10MB | 0.012 | 0.002 | 83.33 |

| 20MB | 0.018 | 0.003 | 83.33 |

| 30MB | 0.024 | 0.004 | 83.33 |

| 40MB | 0.036 | 0.005 | 86.11 |

| 50MB | 0.046 | 0.007 | 84.78 |

| 60MB | 0.051 | 0.008 | 84.31 |

| 70MB | 0.059 | 0.01 | 83.05 |

| 80MB | 0.062 | 0.012 | 80.65 |

| 90MB | 0.072 | 0.013 | 81.94 |

| 100MB | 0.076 | 0.013 | 82.89 |

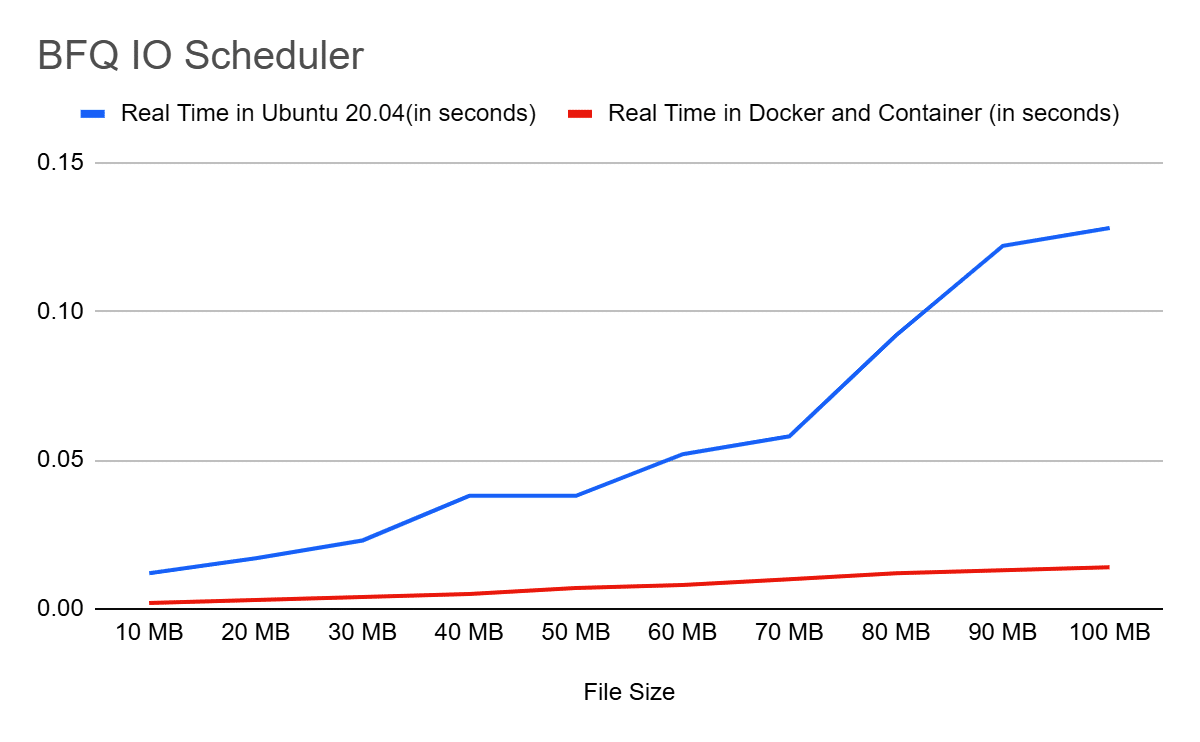

Figure 3 shows the performance comparison of bfq I/O schedulers in a virtual machine versus a Docker container.

| Table 3: bfq I/O scheduler results | |||

| File size | Real-time in Ubuntu 20.04 (in seconds) | Real-time in Docker and container (in seconds) | Percentage improvement |

| 10MB | 0.012 | 0.002 | 83.33 |

| 20MB | 0.017 | 0.003 | 82.35 |

| 30MB | 0.023 | 0.004 | 82.61 |

| 40MB | 0.038 | 0.005 | 86.84 |

| 50MB | 0.038 | 0.007 | 81.58 |

| 60MB | 0.052 | 0.008 | 84.62 |

| 70MB | 0.058 | 0.01 | 82.76 |

| 80MB | 0.092 | 0.012 | 86.96 |

| 90MB | 0.122 | 0.013 | 89.34 |

| 100MB | 0.128 | 0.014 | 89.06 |

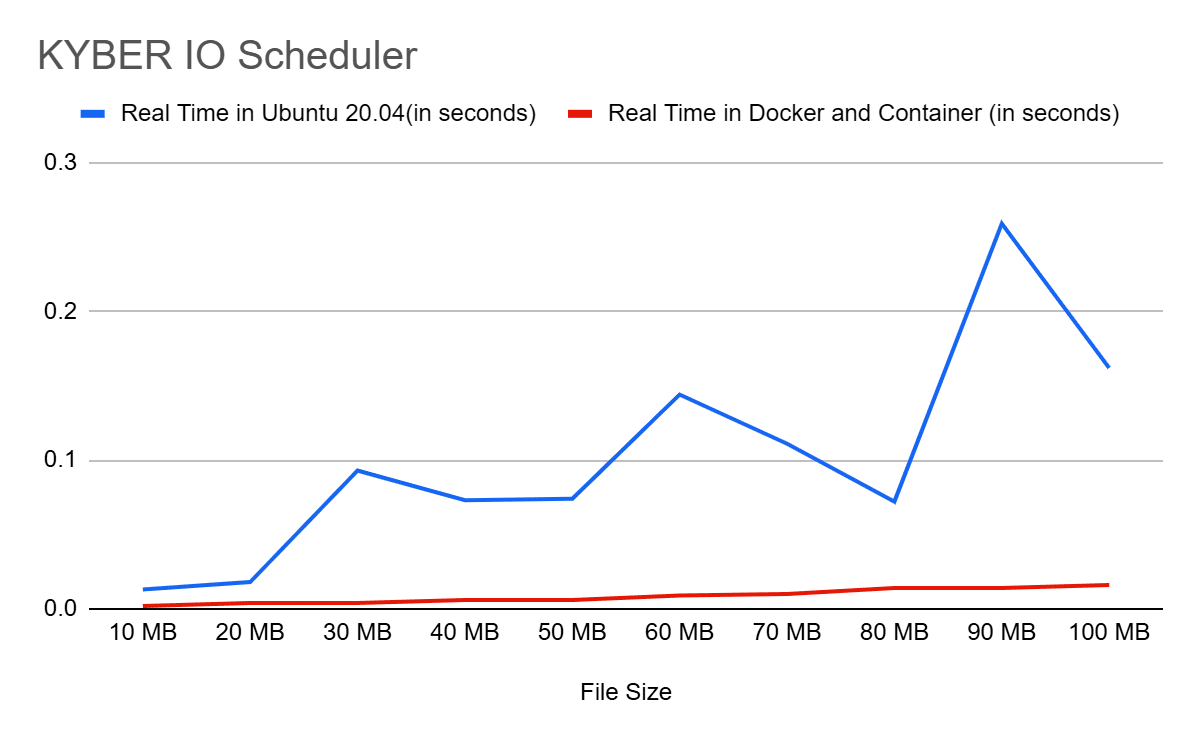

Figure 4 compares the performance of kyber I/O schedulers in VM vs Docker container.

| Table 4: kyber I/O scheduler results | |||

| File size | Real-time in Ubuntu 20.04 (in seconds) | Real-time in Docker and container (in seconds) | Percentage improvement |

| 10MB | 0.013 | 0.002 | 84.62 |

| 20MB | 0.018 | 0.004 | 77.78 |

| 30MB | 0.093 | 0.004 | 95.7 |

| 40MB | 0.073 | 0.006 | 91.78 |

| 50MB | 0.074 | 0.006 | 91.89 |

| 60MB | 0.144 | 0.009 | 93.75 |

| 70MB | 0.111 | 0.01 | 90.99 |

| 80MB | 0.072 | 0.014 | 80.56 |

| 90MB | 0.259 | 0.014 | 94.59 |

| 100MB | 0.162 | 0.016 | 90.12 |

Test 2: Effects of high read latency

This benchmark checks how well the I/O scheduler works when reading several small files while also reading a large file.

The code for changing to none scheduler and then running the Benchmark Test 2 is given below.

Step 1: Perform the read operation in the background.

Step 2: Record the time taken to read every file in the Android SDK.

$ while true; do cat BigFile.txt > /dev/null;done &

$ echo

$ echo none

$ echo none > /sys/block/sda/queue/scheduler

$ time find ../../../Android/Sdk/. -type f -exec cat ‘{}’ ‘;’ >

/dev/null # path to Android Sdk

The test results obtained for various I/O schedulers are given in Table 5.

| Table 5: Test results obtained for various I/O schedulers | |||

| I/O scheduler | VM (in seconds) | Container (in seconds) | Percentage improvement |

| none | 94.133 | 79.805 | 17.9537623 |

| mq-deadline | 99.765 | 82.228 | 21.32728511 |

| kyber | 100.315 | 86.747 | 15.64088672 |

| bfq | 114.214 | 92.577 | 23.37189583 |

The performance evaluation of different I/O schedulers (none, kyber, bfq, and mq-deadline) was conducted, and the results showed that the none I/O scheduler outperformed the others in both Test 1 and Test 2. Interestingly, all the I/O schedulers performed better when used inside a container. However, the none I/O scheduler proved to be the most suitable choice for the given test conditions, as it provided the best results for both tests.

These findings are particularly relevant for processes that involve intensive disk access. In such cases, reading from a Docker container is faster and can significantly improve performance. For instance, if there are numerous reading and writing operations, such as reading a .csv file for data analytics and storing the results in a file, the none I/O scheduler can be particularly useful in enhancing performance.