Tired of spending long hours in debugging your code? The logging system in Python is designed to help you keep an eye on these bugs and improve the quality of your code substantially. Let’s see how it works.

Tired of spending long hours in debugging your code? The logging system in Python is designed to help you keep an eye on these bugs and improve the quality of your code substantially. Let’s see how it works.

Log calls from the program indicate that certain events have occurred during the timestamp, may be from the IP address, and also give some additional information. The events hold the descriptive message — for example, dynamic or static values, importance of message (debug, info, warn, error), etc.

Python provides a logging system as a part of its standard library, making it easier for you to add it to your application.

Logging module

The logging module that helps add logs is easy and readily

available, as shown below:

import logging

logging.debug(‘This is a debug message’) logging.info(‘This is an info message’) logging.warning(‘This is a warning message’) logging.error(‘This is an error message’) logging.critical(‘This is a critical message’)

The output is:

WARNING:root:This is a warning message ERROR:root:This is an error message CRITICAL:root:This is a critical message

As seen from the code, there are five levels of logging, which indicate the severity of events. Each level has a corresponding severity associated. The levels defined in Table 1 are based on the severity in increasing order.

| Level | Used for |

| Debug | Detailed messages, usually for diagnosing problems |

| Info | Confirmation or facts of working as expected |

| Warning | Alarming for future breaks, but until now the application is working |

| Error | Due to a serious problem in the function |

| Critical | Due to a serious problem, the program failed to continue to execute |

Table 1: Usage of each log level

From the output, it can be seen that the debug and info messages are not being logged, as the default severity is set to ‘warning’. So messages will be printed for the warning level and above.

Let us now see how the severity levels can be changed by changing the configurations. We will also look at how to change the format of the output, later on.

| Parameter | Description |

| level | Root logger will be set to the specified severity level |

| filename | Name of the file logs should be stored |

| filemode | Mode in which the log file should be opened; default is ‘a’ append mode |

| format | Pattern for the log message to be written |

| datefmt | Format in which date should be written |

Table 2: Commonly used log parameters

Several configurations can be done.

Basic configuration

We can use the method given below to configure the logging:

import logging logging.basicConfig(level=logging.DEBUG, filename=’/project/log/app.log’, filemode=’w’, format=’%(asctime)s - %(message)s’, datefmt=’%d-%b-%y %H:%M:%S’)

logging.debug(‘This is a debug message’) logging.info(‘This is an info message’) logging.warning(‘This is a warning message’) logging.error(‘This is an error message’) logging.critical(‘This is a critical message’)

The output (from app.log) is:

27-Nov-21 07:28:09 - This is a debug message 27-Nov-21 07:28:09 - This is an info message 27-Nov-21 07:28:09 - This is a warning message 27-Nov-21 07:28:09 - This is an error message 27-Nov-21 07:28:09 - This is a critical message

Through this basic configuration we observe that:

- Debug and info messages are logged, as the logging level is now ‘debug’ and above.

- Log contents are not displayed in stdout; rather, they are saved in the file called app.log.

- Message format is different, because it works as per the format given in the format parameter.

- The date format is also different.

File configuration

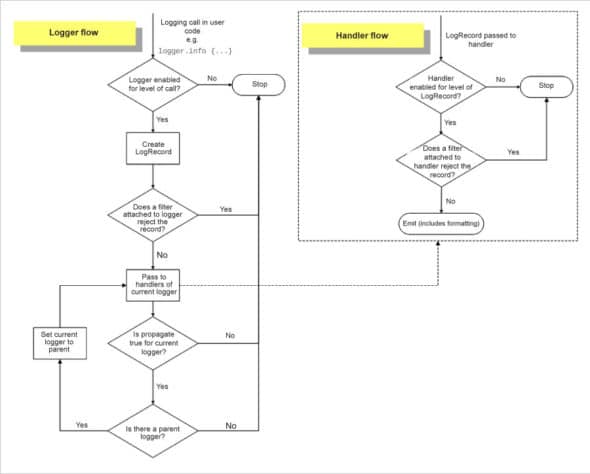

Before we get to know the file configuration, let’s look at how logging works.

Logging flow: So far, we have seen the default logger named root, which is used by the logging module whenever its functions are called directly, like logging.debug(). But it is preferable to define your own logger.

The logging module follows the modular approach that splits functionalities into components.

1. Logger: This is exposed to application code for direct usage, and the logging is performed by calling methods on instances of the Logger class. This class performs three duties.

- Exposes several methods to application code so that applications can log messages at runtime. A good convention is to use the module name to name the loggers.

logger = logging.getLogger(__name__)

- Logger objects determine which log messages to act upon based upon the severity or filter objects.

Logger.setLevel() Logger.addFilter()and Logger.removeFilter()

- Logger objects pass along relevant log messages to all interested log handlers.

Logger.addFilter()and Logger.removeFilter()

2. LogRecord: Loggers automatically create LogRecord objects that have all the information related to the event being logged, like the name of the logger, function, line number, message, and more.

3. Filter: This provides a finer grained facility for determining which log records to output. We will later see an example that uses filters.

4. Handler: This sends the LogRecords (created by loggers) to the appropriate destination. For example, it could be file, stdout, http, etc. For each destination, handler has a subclass such as file: FileHandler, stdout: StreamHandler, http: HTTPHandler. Logger objects can add zero or more handler objects to themselves with an addHandler() method.

5. Formatter: This specifies the format of LogRecord by mentioning it in the list of attributes that need to be logged in order to be written in the final output.

We can configure logging in three ways:

1. By creating loggers, handlers, and formatters explicitly using Python code that calls the configuration methods. In the example given below, though a logger is created with the same module name, the console handler is created with the debug level set and the formatter is created with time, module name, level and message.

import logging # create logger logger = logging.getLogger(__name__) logger.setLevel(logging.DEBUG) # create console handler and set level to debug ch = logging.StreamHandler() ch.setLevel(logging.DEBUG) # create formatter formatter = logging.Formatter(‘%(asctime)s - %(name)s - %(levelname)s - %(message)s’) # add formatter to ch ch.setFormatter(formatter) # add ch to logger logger.addHandler(ch) # ‘application’ code logger.debug(‘debug message’) logger.info(‘info message’) logger.warning(‘warn message’) logger.error(‘error message’) logger.critical(‘critical message’)

The output is:

$ python handlerconfig.py 2021-11-28 14:45:45,096 - __main__ - DEBUG - debug message 2021-11-28 14:45:45,097 - __main__ - INFO - info message 2021-11-28 14:45:45,097 - __main__ - WARNING - warn message 2021-11-28 14:45:45,097 - __main__ - ERROR - error message 2021-11-28 14:45:45,097 -__main__- CRITICAL- critical message

2. By creating a logging config file and reading it using the fileConfig()function.

Shown below is an example where a logger is created with the ‘simpleExample’ logger name, a console handler with the debug level set, and file handler with info level set, making sure that the debug log will not be printed. Note that both the configurations are taken from the logging.conf file and it’s important to have the handler and logger set for ‘root’.

- In file handler:

- The propagate entry is set to 1 to indicate that messages must propagate to handlers higher up the logger hierarchy from this logger, or 0 to indicate that messages are not propagated to handlers up the hierarchy. The qualname entry is the hierarchical channel name of the logger – it is the name used by the application to get the logger.

- In file rotate handler:

- Here we have used file rotate handler to avoid logging all the content in one huge file. We have configured maxBytes=10, which means about 10 bytes written will be rotated. backupcount=5 indicates how many rotated files need to be kept, and then the remaining are cleaned.

3. By creating formatter with time, module name, level and message:

fileconfig.py import logging import logging.config logging.config.fileConfig(‘logging.conf’) # create logger logger1 = logging.getLogger(‘simpleExample’) logger = logging.getLogger() # ‘application’ code logger.debug(‘debug message’) logger1.info(‘info message’) logger1.warning(‘warn message’) logger1.error(‘error message’) logger1.critical(‘critical message’) loggin.conf [loggers] keys=root,simpleExample [handlers] keys=consoleHandler,simpleFileHandler [formatters] keys=simpleFormatter [logger_root] level=DEBUG handlers=consoleHandler [logger_simpleExample] level=INFO handlers=simpleFileHandler qualname=simpleExample propagate=0 [handler_consoleHandler] class=StreamHandler formatter=simpleFormatter args=(sys.stdout,) [handler_simpleFileHandler] class=handlers.RotatingFileHandler formatter=simpleFormatter maxBytes=10 args=(‘test.log’,’a’,10,5) [formatter_simpleFormatter] format=%(asctime)s - %(name)s - %(levelname)s - %(message)s

The output is:

$ python fileconfig.py 2021-11-28 17:47:26,858 - root - DEBUG - debug message $ tail test.log* ==> test.log <== 2021-11-28 18:48:38,520-simpleExample - CRITICAL - critical message ==> test.log.1 <== 2021-11-28 18:48:38,519 - simpleExample - ERROR - error message ==> test.log.2 <== 2021-11-28 18:48:38,518 - simpleExample - WARNING - warn message ==> test.log.3 <== 2021.11.28 8:48:38,517 - simpleExample - INFO - info message

In the next example, let’s see how to create a dictionary of configuration information and pass it to the dictConfig() function. This is simple and can be done using the yaml format as configuration. It creates a logger with the same module name, a console handler with the debug level set and a formatter with time, module name, level and message. The dictConfig expects input to be in the dict object, so that dict can be defined and the logger method called.

dict_filelog.py

import logging

import logging.config

import yaml

with open(‘dict_config.yaml’, ‘r’) as f:

config = yaml.safe_load(f.read())

logging.config.dictConfig(config)

logger = logging.getLogger(__name__)

logger.debug(‘This is a debug message’)

logger.info(‘This is a info message’)

logger.error(‘This is a error message’)

dict_config.yaml

version: 1

formatters:

simple:

format: ‘%(asctime)s - %(name)s - %(levelname)s - %(message)s’

handlers:

console:

class: logging.StreamHandler

level: INFO

formatter: simple

stream: ext://sys.stdout

loggers:

sampleLogger:

level: DEBUG

handlers: [console]

propagate: no

root:

level: DEBUG

handlers: [console]

The output is:

2021-11-28 19:25:00,508 -_main_-DEBUG-This is a debug message

2021-11-28 19:25:00,508 -_main_-INFO - This is a info message

2021-11-28 19:25:00,509 -_main_-ERROR-This is a error message

| Note: When no configuration is provided, the events become an output using a handler of last resort, which is stored in logging.lastResort. This acts as StreamHandler, which writes messages to sys.stderr. Handler’s level is set to ‘warning’ and the behaviour of the logging package in these circumstances is dependent on the Python version. In this article we are using Python 3.2 and above. |

Logging from multiple modules

- Calling the same getLogger(“NAME”) multiple times will return a reference to the same object. This can be used across modules to get the same object but requires the same Python interpreter process.

- The application code can define and configure a parent logger in one module, and can only create but not configure a child logger in a separate module. All logger calls to the child will pass up to the parent. The parent and child mapping is done by the name of the logger. Here is an example in the getLogger method, where all the configuration is inherited from the parent.

parent: getLogger(‘test_app’) child: getLogger(‘test_app.sub_app’) File: main_app.py import sys import logging import sub_app # Create a custom logger with “test_app” logger = logging.getLogger(“test_app”) logger.setLevel(logging.DEBUG) # Create handlers # create console handler with debug log level c_handler = logging.StreamHandler(sys.stdout) # create file handler with error log level f_handler = logging.FileHandler(‘file.log’, ‘w’) f_handler.setLevel(logging.WARNING) # Create formatters and add it to handlers c_format = logging.Formatter(‘%(name)s - %(levelname)s - %(message)s’) f_format = logging.Formatter(‘%(asctime)s - %(name)s - %(levelname)s - %(message)s’) c_handler.setFormatter(c_format) f_handler.setFormatter(f_format) # Add handlers to the logger logger.addHandler(c_handler) logger.addHandler(f_handler) print(c_handler) print(logger) logger.debug(‘This is a debug from main module’) logger.info(‘This is a info from main module’) logger.warning(‘This is a warning from main module’) logger.error(‘This is an error from main module’) logger.info(“Before calling the sub_app_function”) sub_app.sub_app_function() logger.info(“After calling the sub_app_function”) File: sub_app.py import logging # create logger module_logger = logging.getLogger(‘test_app.sub_app’) def sub_app_function(): module_logger.error(“Debug message from sub_app”)The output is:

% python multiple_modules_logger.py <StreamHandler <stdout> (NOTSET)> <Logger test_app (DEBUG)> test_app - DEBUG - This is a debug from main module test_app - INFO - This is a info from main module test_app - WARNING - This is a warning from main module test_app - ERROR - This is an error from main module test_app - INFO - Before calling the sub_app_function test_app.sub_app - ERROR - Debug message from sub_app test_app - INFO - After calling the sub_app_function % cat file.log 2021-11-28 23:26:04,332 - test_app - WARNING - This is a warning from main module 2021-11-28 23:26:04,332 - test_app - ERROR - This is an error from main module 2021-11-28 23:26:04,332 - test_app.sub_app - ERROR - Debug message from sub_app

Logging from multiple threads

Logging from multiple threads is as simple as the normal one. To differentiate the logs generated from each thread, threadName can be used. An example of logging from the main thread and another thread is shown below.import logging import threading import time def worker(arg): while not arg[‘stop’]: logging.debug(‘Hi from thread 2’) time.sleep(0.5) def main(): logging.basicConfig(level=logging.DEBUG, format=’%(asctime)s - %(name)s - %(levelname)s - %(threadName)s - %(message)s’) info = {‘stop’: False} thread = threading.Thread(target=worker, args=(info,)) thread.start() while True: try: logging.debug(‘Hello from main thread’) time.sleep(1.0) except KeyboardInterrupt: info[‘stop’] = True break thread.join() if __name__ == ‘__main__’: main()The output is:

% python threadlogger.py 2021-11-28 22:38:54,207 - root - DEBUG - Thread-1 - Hi from thread 2 2021-11-28 22:38:54,207 - root - DEBUG - MainThread - Hello from main thread 2021-11-28 22:38:54,712 - root - DEBUG - Thread-1 - Hi from thread 2 2021-11-28 22:38:55,212 - root - DEBUG - MainThread - Hello from main thread 2021-11-28 22:38:55,214 - root - DEBUG - Thread-1 - Hi from thread 2 2021-11-28 22:38:55,716 - root - DEBUG - Thread-1 - Hi from thread 2 2021-11-28 22:38:56,217 - root - DEBUG - MainThread - Hello from main thread 2021-11-28 22:38:56,220 - root - DEBUG - Thread-1 - Hi from thread 2 2021-11-28 22:38:56,725 - root - DEBUG - Thread-1 - Hi from thread 2 2021-11-28 22:38:57,221 - root - DEBUG - MainThread - Hello from main thread 2021-11-28 22:38:57,229 - root - DEBUG - Thread-1 - Hi from thread 2 2021-11-28 22:38:57,734 - root - DEBUG - Thread-1 - Hi from thread 2 2021-11-28 22:38:58,223 - root - DEBUG - MainThread - Hello from main thread 2021-11-28 22:38:58,236 - root - DEBUG - Thread-1 - Hi from thread 2 2021-11-28 22:38:58,738 - root - DEBUG - Thread-1 - Hi from thread 2 2021-11-28 22:38:59,226 - root - DEBUG - MainThread - Hello from main thread 2021-11-28 22:38:59,239 - root - DEBUG - Thread-1 - Hi from thread

Context logging and filter

- LoggerAdapter: Sometimes the logging output is needed to contain contextual information in addition to the parameters passed to the logging call. For example, in a networked application, it may be desirable to log client-specific information in the log (e.g., remote client’s user name, or IP address). An easy way in which you can pass contextual information to the output along with the logging event information is to use the LoggerAdapter class. In the example that follows we are adding the host_ip to all the log events.

- Filter: A filter can be used for both the logger and handler levels, as it can stop certain information from being passed. It will also inject additional information into the LogRecord, which will be logged. In our example below, we are using filters to allow only certain levels to be logged. Though the log level is set to ‘debug’, we are only allowing ‘info’ and ‘error’ to be logged.

| Note: These are fictional examples, as use cases in real life vary. |

import sys

import logging

host_ip = “localhost”

class StdoutFilter(logging.Filter):

def filter(self, record):

return record.levelno in (logging.INFO, logging.ERROR)

logger = logging.getLogger(‘sampleApp’)

logger.setLevel(logging.DEBUG)

handler = logging.StreamHandler(sys.stdout)

handler.addFilter(StdoutFilter())

formatter = logging.Formatter(‘%(host_ip)s - %(asctime)s - %(name)s - %(levelname)s - %(message)s’)

handler.setFormatter(formatter)

logger.addHandler(handler)

logger = logging.LoggerAdapter(logger, {‘host_ip’: host_ip})

print(logger)

logger.debug(‘This is a debug from main module’)

logger.info(‘This is a info from main module’)

logger.warning(‘This is a warning from main module’)

logger.error(‘This is an error from main module’)

The output is:

<LoggerAdapter sampleApp (DEBUG)> localhost - 2021-11-29 00:26:18,566 - sampleApp - INFO - This is a info from main module localhost - 2021-11-29 00:26:18,567 - sampleApp - ERROR - This is an error from main module

Best practices

- Create loggers using the getlogger function

- Use appropriate log levels in different regions

- Create module level loggers

- Use rotating file handler

- Avoid opening the same log file multiple times

- Do not pass logger as parameter in class or methods

- Include timestamp in logs; it’s preferable to use the standard format called ISO-8601. For example:

import logging logging.basicConfig(format=’%(asctime)s %(message)s’) logging.info(‘Example of logging with ISO-8601 timestamp’)

As seen, the Python logging module is very flexible, customisable and easy to use. It has many more features such as context based logging, customisable log records, logging to multiple destinations and servers, multiple processes logging to the same file log, etc. The ready code is available in the Python cookbook URL at https://docs.python.org/3/howto/logging-cookbook.html.

If you haven’t been using logging in your applications yet, it is a good time to begin doing so. You may be surprised at how much it enhances the quality of the code being written.